If you haven’t heard of dbatools, it’s an open source project out on GitHub dedicated to making life easier for Data Professionals. If you have an idea that you don’t see a command for, you can build it and contribute it to the project. Or you can ask if someone has time to write if for you.

Getting dbatools

PowerShell has come a long way over the last 10 years. You can now download the dbatools module from the PowerShell Gallery, right from inside of PowerShell itself. If you’re running PowerShell 5+, simply run this command from an elevated shell and you’ll be ready to go.

|

1 2 3 |

Install-Module dbatools |

If you’re stuck on PowerShell 3, you can use this command to download the latest release of the dbatools module from the GitHub repository.

|

1 2 3 |

Invoke-Expression (Invoke-WebRequest -UseBasicParsing https://dbatools.io/in) |

Once you have the dbatools module downloaded you can get a full list of the commands by running

Reading a backup file using Read-DbaBackupHeader

Today we’re going to take a look at a new command in the dbatools module called Read-DbaBackupHeader. This command will allow you to cross-reference backup files on your SQL Server’s local drive or network share with information from your SQL Server’s backup history. The great thing about having a command like this is that you probably don’t need to look at this kind of information very frequently, so having a command that already has the relationships worked out and has demos on the kind of data you can expect to retrieve with it is a huge help in letting you to get through some infrequent task and back to what you love doing the most.

Let’s start with looking at a single backup file with the Read-DbaBackupHeader command. To do that we can run

|

1 2 3 |

Read-DbaBackupHeader -SqlServer localhost -Path 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\AdventureWorks2014.bak' |

This will give you in the neighborhood of 60+ different pieces of information (properties) about the backup file. Some of these properties will be useful to some people while other properties will be useful to others. Next I’d like to focus on two sets of properties I’ve found quite useful over the years.

Reading a backup file using the -FileList parameter

On a number of occasions, I have found myself trying to restore a backup that was too big for where it was trying to be restored to. Worse yet, is when you are trying to restore multiple databases and the first few succeed but then you run out of space and the last few end up failing. Wouldn’t it be great if you could know how big each data & log file inside the backup file are going to be once you restore them?

Read-DbaBackupHeader makes retrieving this information pretty simple with the -FileList parameter. This parameter is nice because the backup file contains multiple files, so when using this parameter, the command goes ahead and does the work of unwinding that array or properties into rows for you to work with.

|

1 2 3 |

Read-DbaBackupHeader -SqlServer localhost -Path 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\AdventureWorks2014.bak' -FileList |

Using the -FileList parameter you will see another 20+ properties for each data & log file within the backup file. In this case, I’m really only interested in the file itself and its size so I will pipe the results out to the SELECT cmdlet and specify the columns I want.

|

1 2 3 4 5 6 |

Read-DbaBackupHeader -SqlServer localhost -Path 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\AdventureWorks2014.bak' -FileList | SELECT LogicalName, Size, PhysicalName |

By default, the “Size” is reported in bytes. If you want to convert it to MB or GB that’s easy to do by adding some formatting syntax like this.

|

1 2 3 4 5 6 7 8 9 |

[string]$unit= "MB" $measure = "1$unit" Read-DbaBackupHeader -SqlServer localhost -Path 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\AdventureWorks2014.bak' -FileList | SELECT LogicalName, @{Label="SizeIn$unit";Expression={"{0:n2}" -f($_.Size/$measure)}}, PhysicalName |

Looking at just the file name & size in a directory

That’s great information, but what if you needed to get this information for multiple backup files that you were restoring? It only takes a small adjustment to the code to make that happen. Instead of looking at a single file we will take the stem of that directory path and do a Dir on it.

|

1 2 3 4 |

DIR 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\' | Read-DbaBackupHeader -SqlServer localhost |

We then pipe those results over to the Read-DbaBackupHeader command and presto, we have a list of all the data & log files and their sizes for every backup file in the directory.

|

1 2 3 4 5 6 7 |

DIR 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\' | Read-DbaBackupHeader -SqlServer localhost -FileList | SELECT LogicalName, Size, PhysicalName |

Looking at the filename & size of just the backup files you care about

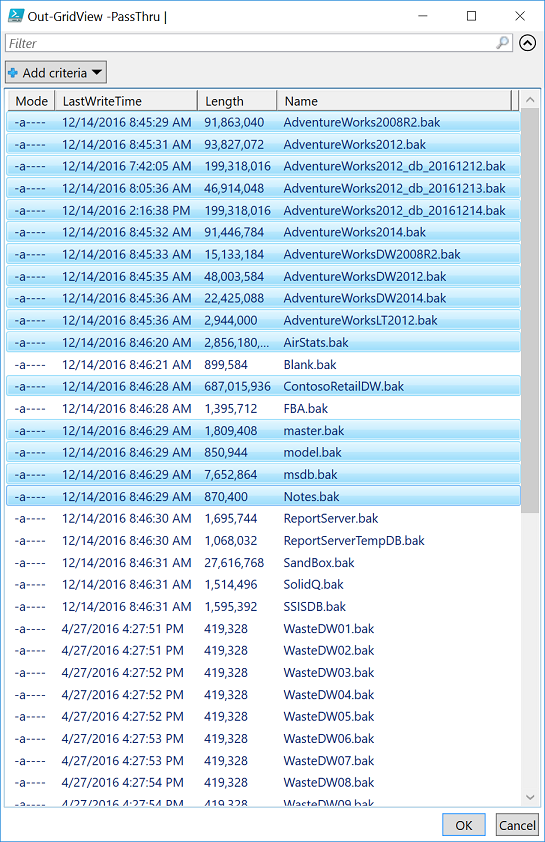

Of course, you may not be trying to restore every backup file in the directory, but maybe a handful. In this case we can use one of my favorite features of PowerShell, the Out-GridView cmdlet and its -PassThru parameter to thin down the list to only the backup files you care about, before sending the list on down to the Read-DbaBackupHeader command.

|

1 2 3 4 5 6 7 8 |

DIR 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\' | Out-GridView -PassThru | Read-DbaBackupHeader -SqlServer localhost -FileList | SELECT LogicalName, Size, PhysicalName |

Backups, were they compressed or not?

Finally, let’s have a look at the backup files and whether they are compressed or not. You probably have your backup compression defaulted to 1 or True but even if you do, a process could still be backing up a database without backup compression turned on, costing you space.

To inspect the backup files to see if they are compressed or not, simply take the same command we were using above (remove the -FileList parameter) and change the properties you’re SELECT’ing to DatabaseName, Compressed, BackupPath.

|

1 2 3 4 |

DIR 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\' | Read-DbaBackupHeader -SqlServer localhost | SELECT DatabaseName, Compressed, BackupPath |

At this point you could pipe the results to the Out-GridView cmdlet to filter and analyze them further. When you do that PowerShell will pop up a grid of results, similar to the Results to Grid setting in SSMS, except a little better. At the top of the window that pops up, there is a search box with the word “Filter” ghosted in it. If you type something in here it will filter every column on every row for that search term. Alternatively, you can click on the “Add Criteria” button and choose to filter the results in the grid based on the values in only a single column.

|

1 2 3 4 5 |

DIR 'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\Backup\' | Read-DbaBackupHeader -SqlServer localhost | SELECT DatabaseName, Compressed, BackupPath | OGV |

Adding the “Compressed” column and giving it a value of “0” will filter those results down to just the backup files that weren’t compressed when they were created. From here you can work on tracking down what process created those backup files and see about getting them converted to using backup compression.

Wrap up

Again, these are tasks that I would do very infrequently myself, so having a PowerShell command that can help me sort out the information quickly makes it so that I won’t have to remember much to get these tasks done.

Chrissy LeMaire, the leader of the dbatools project, shares many more of the backup/restore related commands in her blog post “dbatools to the backup/restore rescue”. Please join a PowerShell Virtual Chapter of PASS meeting if you can, we hold meetings at least once a month. You can find more information about reading the SQL Server backup header on MSDN: Restore.ReadBackupHeader Method (Server). And of course, you can keep up with the latest developments/improvements the SQL PowerShell is asking for on my blog

See more

Check out ApexSQL Plan, to view SQL execution plans, including comparing plans, stored procedure performance profiling, missing index details, lazy profiling, wait times, plan execution history and more

- Handy features of the dbatools Read-DbaBackupHeader command - December 21, 2016