Today’s businesses work with huge volumes of data. Sometimes, understanding the granularity of the data is helpful in data administration. Understanding the granularity poses unique challenges though and the database administrator needs to balance the key business and technical metrics of the environment. One of the key technical metrics is disk space estimation, which is vital to capacity planning and forecasting. The simplest way to get this information is by using the system stored procedure, called, sp_spaceused. The growth metrics can be captured periodically and stored in a central repository, a repository dedicated to helping with capacity planning and forecasting the disk requirements. This is a critical part of the day-to-day activities of a database administrator.

Introduction

The objective of this article is to look at the possible ways of capturing database usage trends using the most commonly available tools and techniques. The integration of SQL, the use of sp_spaceused, and the flexibility offered by PowerShell have been used to get the required database growth patterns; this data is combined into a single result. After the transformation is done, the data is stored in a dedicated repository.

Background

The output of the stored procedure, sp_spaceused, is a pair of result sets when the @objname parameter is omitted. The stored procedure accepts the @oneresultset parameter starting from SQL 2016, therefore, the result can be viewed in a single result pane. The simulation can be derived using DMVs but the result may vary in terms of numbers, sometimes, based on the use of complex design and data structures. This introduces a level of complexity in the process. To overcomes, this, we use PowerShell with SQL, in order to integrate the two result-sets into one and seamlessly executing the scripts across several servers. The generated stream of data is converted into JSON, the lightweight data interchange format. Using JSON helps simplify the integration of the output into a dedicated repository for data transformation.

Through this article, let’s learn how to integrate SQL and PowerShell to execute stored procedures, as well as the techniques to transform the output into the desired form which focuses on the database usage trends.

We shall discover:

- the workings of sp_spaceused,

- how the stored procedure is executed to transform and merge the result,

- loading of the JSON data,

- the available JSON constructs,

- and more…

Getting Started

The sp_spaceused stored procedure

sp_spaceused is the most common stored procedure used by any database administrator to check the database space usage details. It displays the number of rows, the disk space reserved, and the disk space used by a table, indexed view, or Service Broker queue in the current database, or displays the disk space reserved and used by the whole database.

If objname is omitted, the following result sets are returned with the current database size information.

| Name | Data type | Description |

| database_name | nvarchar(128) | Name of the database. |

| database_size | varchar(18) | Size of the database in MB. It includes both data and log files. |

| unallocated space | varchar(18) | Space available but yet to be reserved for database objects |

| Name | Data type | Description |

| reserved | varchar(18) | Total space allocated by database objects |

| data | varchar(18) | Total data space |

| index_size | varchar(18) | Total indexes space |

| unused | varchar(18) | Total space reserved but not yet used. |

Permissions

Permission to execute sp_spaceused is granted to the Public role. Only members of the db_owner fixed database role can specify the @updateusage parameter.

In SQL Server 2016, the @openresultset parameter made our life much simpler. By specifying the parameter, the result can be shown in one or more result sets.

For example, using sp_spaceused @oneresultset=1, summarizes the space usage details for the context of current database into a single result set.

|

1 2 3 4 5 |

USE ApexSQLBAckup GO EXEC sp_spaceused @oneresultset = 1 |

In the following example using sp_spaceused @oneresultset=0, shows the space usage details for the context of current database in two result sets; this is the default – if we omit the parameter, the result will be displayed in multiple result sets.

|

1 2 3 4 5 |

USE ApexSQLBAckup GO EXEC sp_spaceused @oneresultset = 0 |

Create the PowerShell script

Let us walk through the script, step-by-step, along with looking at the details of the setup and configuration of the script. The complete script can be found in Appendix (A)

Step 1: Declare the variable

Step 2: Declare connection object

- $DataSet1: To store sp_spaceused output of the first result pane

- $DataSet2: To store sp_spaceused output of the second result pane

Step 3: Build connection string to prepare database lists

Step 4: Loop through the listed databases

Step 5: Merge the datasets

Step 6: Conversion to JSON

In this step, we shall generate the data, and pipe that to ConvertTo-Json to get the output as a JSON file.

Step 7: Call the Get-SpaceUsed PowerShell function

Create a text file with the names of the database servers you’d like to get the information for. Let’s call this server.txt. We’ll call this file when calling the function.

Call the PowerShell function

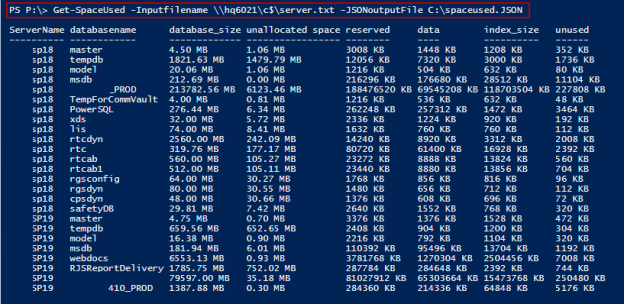

The output of the function is the two result sets merged into one.

PS P:\> Get-SpaceUsed -Inputfilename \\hqdef\c$\server.txt -JSONoutputFile C:\spaceused.JSON

Step 8: Transformation of the JSON into an SQL table

The aforementioned steps yield data in the JSON format, which can be used to generate a JSON file, As for the PowerShell script, it can be called using an SQL Server agent job or Windows Task Scheduler; the script would generate the JSON file. The subsequent step would be to load the data into SQL Table using JSON constructs. This is one of the most recommended ways to load data in JSON format, into an SQL table.

Let us now view the output JSON File

Let us now import the JSON data file into the SQL Server using JSON constructs. The output is joined with OpenRowSet bulk loading function that can read the data from the file. The function returns a single column that contains the contents of the file. The output is then joined using the OPENJSON function. The CROSS APPLY enables us to join the dynamically generated rows from the OPENROWSET function, which is a collection of JSON objects stored in a single column. You can further parse the ServerName as shown in the below query, to fetch the desired result using native JSON SQL constructs.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

SELECT t2.value ServerName, databasename , database_size, [unallocated space], reserved, data, index_size, unused FROM OPENROWSET(BULK N'\\hq6021\c$\spaceused.JSON', SINGLE_NCLOB) AS json CROSS APPLY OPENJSON(BulkColumn) WITH( ServerName nvarchar(MAX) as JSON, databasename NVARCHAR(20), database_size NVARCHAR(20), [unallocated space] NVARCHAR(60), reserved NVARCHAR(60), data NVARCHAR(60), index_size NVARCHAR(60), unused NVARCHAR(60) ) AS t CROSS APPLY OPENJSON(Servername) WITH(value nvarchar(100)) t2 |

After collecting the database usage stats over a period of time, we can project the growth rate by self-joining the tables with the previous day’s values. The trend can be plotted on all the columns of the sp_spaceused output columns, and the data can also be aggregated at the instance level.

Step 9: Cleansing SQL data

Now that we’ve polled our servers and written information to our repository, let’s now query it to help in capacity planning. In the following output, we can see the how much free space left in the database. The logdate column is used for log the specific calendar date that an entry was made into the dedicated table.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

SELECT t2.value ServerName, databasename , database_sizeMB = replace(database_size, 'MB', '') , unallocatedspaceMB = replace([unallocated space], 'MB', '') , [Free %]=cast(cast(replace([unallocated space], 'MB', '') as decimal(10,2))/cast(replace(database_size, 'MB', '') as decimal(10,2))*100 as decimal(10,2)), reservedKB= replace(reserved, 'KB', '') , dataKB= replace(data, 'KB', '') , index_sizeKB =replace(index_size, 'KB', '') , unusedKB=replace(unused, 'KB', ''), logdate=convert(varchar(20),getdate(),112) FROM OPENROWSET(BULK N'\\hq6021\c$\spaceused.JSON', SINGLE_NCLOB) AS json CROSS APPLY OPENJSON(BulkColumn) WITH( ServerName nvarchar(MAX) as JSON, databasename NVARCHAR(20), database_size NVARCHAR(20), [unallocated space] NVARCHAR(60), reserved NVARCHAR(60), data NVARCHAR(60), index_size NVARCHAR(60), unused NVARCHAR(60) ) AS t CROSS APPLY OPENJSON(Servername) WITH(value nvarchar(100)) t2 |

Wrap Up

In this article, we looked at modifying the output of sp_spaceused system stored procedure, and fetching the raw data that can be put to use after some transformations. We primarily looked at overcoming the challenge of combining the two result sets, using PowerShell to combine the data into a friendly JSON report, which can then be transformed into a SQL table, stored in a central repository; we can now use this repository as a source of raw data which can be used to generate meaningful reports. Also, we saw the integration with OPENROWSET (BULK) function to import JSON files into SQL Server using JSON constructs.

Fetching the raw data and storing is important. When processed, this data would be helpful in capacity planning and forecasting. We shall discuss on how to put this data to use in capacity planning, in a separate article.

Appendix (A)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 |

<# .Synopsis The objective of the script is to simulate sp_spaceused stored procedure using PowerShell. .Description Many tools are available in the market to measure the database growth and provide accurate results for capacity planning and forecasting. There are customers who still don't own tools to perform this technique. The first question would be How to Forecast Database Disk Capacity If You Don’t Have a Monitoring Tool? .Parameter InputFile The path to the file where the input details are saved. This holds the list of servers where it require to measure database growth Example: c:\InputServer.txt .Parameter JSONoutputfile The path of output file to pull the requested data and save it in a JSON file for further processing .Link https://powershellsql.wordpress.com/ Jump #> Function Get-SpaceUsed{ # The addition of the below line cmdletbinding adds several capabilities such as additional parameter checking, and the ability to easily use the Write-Verbose cmdlet. [CmdletBinding()] Param( # The Mandatory argument indicates that the parameter is required. In this case, the input filename where it lists all the servers is required for further processing [Parameter(Mandatory=$true)] [String] $Inputfilename , [Parameter(Mandatory=$true)] [String] $JSONoutputFile ) #The variable to build connection, command, adapter and dataset #Defining the object to use Connection $SqlConn = New-Object System.Data.SqlClient.SqlConnection #Defining object to use sql commands $SqlCmd = New-Object System.Data.SqlClient.SqlCommand $SqlAdapter = New-Object System.Data.SqlClient.SqlDataAdapter #Dataset to store the adapter result $DataSet1 = New-Object System.Data.DataSet $DataSet2 = New-Object System.Data.DataSet # Store the output ofPSCustomObject to Hashtable $Object=@() # Loop through each server listed in inputfile foreach($srv in get-content $inputfilename) { #The dataset are reset using Reset() method $DataSet1.Reset() $DataSet2.Reset() #Build the connection string. The first and foremost thing is building the connection string. It’s been assigned to the variable for later usability #You can also build the connection string with uses Port, username and password. #$sqlconn = "data source=SQLServer,1433;Initial catalog=master;uid=test;pwd=test124;" $SqlConn.ConnectionString = "Server=$srv;Database=master;Integrated Security=True" # The below statement defines the query text executed across all the instances based on the connection string # The below SQL fetches the database name from sys.databases schema # You can ignore the system database by querying id column ie is select name from sys.databases where database_id>4 $SqlCmd.CommandText = "select name from master.sys.databases" #setup the sql connection using sqlclient namespace $SqlCmd.Connection = $SqlConn #The SqlAdapter will fill the datasets based on the data that we are extracted from the SQL server query $SqlAdapter.SelectCommand = $SqlCmd <#The SqlDataAdapter, serves as a bridge between a DataSet and SQL Server for retrieving and saving data. The SqlDataAdapter provides this bridge by mapping Fill, which changes the data in the DataSet to match the data in the data source, and Update, which changes the data in the data source to match the data in the DataSet, using the appropriate Transact-SQL statements against the data source #> $SqlAdapter.Fill($DataSet1)|out-null <# Now the $DataSet1 variable has the required data. To get to this information, you can access it by calling the dataset by referring the indicator starting at 0. Assigning the dataset to variable for later usability #> $dbs =$DataSet1.Tables[0] #Loop through the listed databases foreach ($db in $dbs) { # prepare the sql statement by appending the database name to system stored procedure sp_spaceused $SqlCmd.CommandText = $db.name+"..sp_spaceused " #setup the sql connection $SqlCmd.Connection = $SqlConnection #Assign the query to an interface to data retrieval $SqlAdapter.SelectCommand = $SqlCmd #fill dataset #SqlDataAdapter to create an in-memory cache of data table that can easily be manipulated. this is something to be keep in mind for larger resultsets. The bigger the result set requires large memory $SqlAdapter.Fill($DataSet2) |out-null } #closed the connection $SqlConnection.Close() <# The below code to merge the resultset of two datasets. The dataset1 holds the first three columns of the sp_spaceused system stored procedure and #second dataset holds the subset of sp_spaceused SP. The PowerShell string evaluation is calling ToString() on the DataSet. In order to evaluate any properties (or method calls), you have to force evaluation by enclosing the expression in $() #> for($i=0;$i -lt $DataSet2.Tables[0].Rows.Count;$i++) { $Object+=New-Object PSObject -Property @{ "ServerName" =$srv "databasename" = $($DataSet2.Tables[0].Rows[$i][0]) "database_size" = $($DataSet2.Tables[0].Rows[$i][1]) "unallocated space" = $($DataSet2.Tables[0].Rows[$i][2]) "reserved" = $($DataSet2.Tables[1].Rows[$i][0]) "data" = $($DataSet2.Tables[1].Rows[$i][1]) "index_size" = $($DataSet2.Tables[1].Rows[$i][2]) "unused" = $($DataSet2.Tables[1].Rows[$i][3])} } } #selecting the attributes to console $Object| Select-Object ServerName,databasename,database_size,"unallocated space",reserved, data,index_size,unused |ft -AutoSize $JSON=$Object| Select-Object ServerName,databasename,database_size,"unallocated space",reserved, data,index_size,unused |ConvertTo-Json #write the output to file $json |Out-File $JSONoutputFile #invoke to process to open JSON file invoke-item $JSONoutputFile } |

See more

Check out ApexSQL Plan, to view SQL execution plans, including comparing plans, stored procedure performance profiling, missing index details, lazy profiling, wait times, plan execution history and more

- Stairway to SQL essentials - April 7, 2021

- A quick overview of database audit in SQL - January 28, 2021

- How to set up Azure Data Sync between Azure SQL databases and on-premises SQL Server - January 20, 2021