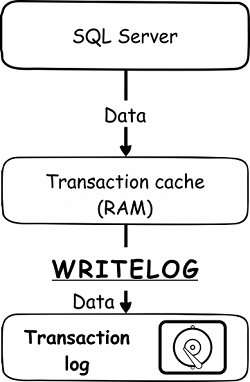

The WRITELOG wait type is one of those wait types that can often be seen quite frequently on SQL Server, and that can cause a lot of headaches for DBAs. The WRITELOG wait time represents the time that accumulates while waiting for the content of the transaction log cache to be flushed to the physical disk that stores the transaction log file. To understand better the WTITELOG wait type, there are some basics of SQL Server mechanism for storing the data in the transaction log file is to be explained first

When SQL Server has to store data in transaction log file, it doesn’t do that directly by writing the data straight on the disk where the transaction log file is stored. Instead, all data is serially written to a Log cache (often referred to as a Log buffer or Log block) which is in-memory structure. Moreover, the SQL Server OS must comply with Atomicity, Consistency, Isolation, and Durability (ACID) principle, so flushes the entire log cache into the transaction log file that is stored in the disk subsystem or rolled back if required. The size of the log cache is between the 512 B and 64 KB.

Often what the WRITELOG wait type is and when it starts to accumulate is misunderstood based on a belief that it accumulates when SQL Server is writing the data in the Log cache, or while data is sitting in the log cache and waiting to be flushed to the transaction log file. However, neither of those two is correct. SQL Server starts to register WRITELOG wait type at the moment when the log cache starts to be flushed to a transaction log file.

So WRITELOG is not related directly to SQL Server – Log cache relation, or to the Log cache itself. It is strictly related to a log cache and transaction log file communication. The moment data starts to be flushed to a transaction log file, the WRITELOG wait type is registered and its time accumulates until the Log cache completes flushing data from memory to a transaction log file on the disk drive. What is evident from this explanation is that it is I/O operation related to the physical drive is the most important parameter related to the WRITELOG wait type. That directly means that as faster the disk subsystem is, the lower data writing latency in transaction log file is.

Therefore, for any kind of transactional workload writing to the SQL Server transaction log, I/O performance is equally important for the data throughput and the application responsiveness. While being the most common, I/O subsystem performance is not the only cause of the excessive WRITELOG, as SQL Server engine itself has some hard limitations related to the amount of I/O operations that the Log manager can issue before receiving flushing-complete info. So, some prerequisites should be fulfilled to avoid excessive WRITELOG wait types, as well as any optimizations that should be performed

The disk subsystem optimization and limitation

Transaction log related performance is strongly related to Disk subsystem I/O performance, and it is not unusual that this factor is often the cause for degraded SQL Server performance and high values of WRITELOG wait types. There are some rules for production systems that should be fulfilled, especially in situations where there are excessive WRITELOG wait types indicated

The Disk subsystem must provide adequate I/O performance to ensure fast response to I/O requests issued against the transaction log

Improperly sized disk storage or inadequately configured one are the main reasons behind performance issues related to I/O operations. Quite often the transaction log file (.ldf file) is stored on the same physical drive as SQL Server data file (.mdf file), forcing those two to share the performance of the disk subsystem, causing them to affect each other. Therefore, it is recommended to separate the transaction log file on a separate physical drive from the data file. Often a misinterpretation exists that it is enough to separate the data file and the log file to some different partitions of the same physical drive. This is not a solution, though, as both files still sharing the performance limits of the same physical drive. Therefore, due to the nature of how the data flush in the transaction log file and writing to a log file is sequential in its nature, using the separate physical high-speed drives for storing the transaction log file could significantly increase the performance and thus reduce the WRITELOG wait type

However, physical I/O performance cannot be expanded without limits, so some factors implicitly affect the disk subsystem I/O such as SQL Server Replication (transactional replication), transaction log backup operations, SQL Server mirroring, etc., which makes designing and optimizing disk subsystem even more difficult. Some general recommendations are to design the disk subsystem in a way that can ensure it to sustain I/O response time below 5 milliseconds in the worst case, as a general rule of thumb.

The Log manager limitations

As mentioned already, the SQL Server OS itself has a few hard limitations related to the amount of I/O operations. There are two particular limitations of interest for this article: Outstanding log I/O limit and Outstanding I/O limit.

As already stated, both limitations are hard limits that do not allow any changes or settings by DBAs. To maintain the data integrity, the SQL Server OS imposes an I/O limit to the Log manager by limiting the amount of I/O for write to log operations that are started but not yet completed. The moment limits are reached, the Log manager must wait for acknowledging of the outstanding I/O before it is granted to issue any new I/O operations (writing) to the transaction log file. Both limitations, Outstanding log I/O limit and Outstanding I/O limit are imposed at a database level.

For an in-depth understanding of those limitations as a frequent cause of the high WRITELOG values, see the article Diagnosing Transaction Log Performance Issues and Limits of the Log Manager

Query optimization

Writing queries is not a hard task in and of itself. But when it comes to optimization of a query for performance things can become more challenging. Let’s take two elementary INSERT queries, almost identical, that both have the same final result, to help us understand the difference between the poor and optimal optimization

Query 1

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

USE [AdventureWorks2014] GO DECLARE @c INT SET @c = 1 WHILE @c < 100000 BEGIN INSERT INTO [HumanResources].[EmployeePayHistory] ([BusinessEntityID] ,[RateChangeDate] ,[Rate] ,[PayFrequency] ,[ModifiedDate]) VALUES (@c ,'2009-03-07 00:00:00.000' ,53.232 ,4 ,'2018-06-30 00:00:00.000') SET @c = @c + 1 END |

The above query inserts 100,000 rows of data into a table. What is specific for this query is that it is using an implicit transaction. The time needed for that query to execute on a test machine is 528 seconds with a WRITELOG wait time of 507 seconds. Such queries are often accompanied with high wait times for the WRITELOG wait type.

These high wait times can occur because the SQL Server OS flushes the Log cache into a transaction log file in cases when the transaction commits or when the Log cache is filled to its maximum size. In the query above, since an implicit transaction is used, the Log cache flushes into a transaction log file on every commit of data. That means the Log cache is flushed on every insert, which is 100,000 times.

Query 2

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

USE [AdventureWorks2014] GO DECLARE @c INT SET @c = 1 BEGIN TRAN WHILE @c < 100000 BEGIN INSERT INTO [HumanResources].[EmployeePayHistory] ([BusinessEntityID] ,[RateChangeDate] ,[Rate] ,[PayFrequency] ,[ModifiedDate]) VALUES (@c ,'2009-03-07 00:00:00.000' ,53.232 ,4 ,'2018-06-30 00:00:00.000') SET @c = @c + 1 END COMMIT |

The second query performs the same as the first, but in this case, the query is written to use an explicit transaction. The whole WHILE loop is now folded in an explicit transaction (BEGIN TRAN – COMMIT is highlighted), meaning that commit has completed once after the query executes entirely. That also means that the Log cache flushes into the transaction log file only after it became full. By optimizing the query in this way, the number of Log cache flushes to transaction log file are significantly reduced which has as a consequence much faster query execution with significantly reduced WRITELOG wait type time. In this particular case, the query executes in 4 seconds with a slightly above 1 second of WRITELOG wait time.

Therefore, it is now evident that when high WRITELOG values are experienced on SQL Server, the knee-jerk reaction is often that it is related to something with the disk subsystem. But as we’ve learned, this is not always the case. Check the queries that are causing high WRITELOG wait times and optimize them if possible to avoid committing data too often

SQL Server Delayed durability

Starting with SQL Server 2014 the DELAYED_DURABILITY is added as a new option for transaction commits with one single aim: a tradeoff of transaction durability for better performance. Using this option in SQL Server where excessive WRITELOG wait type is present could be significant.

To understand delayed durability, let’s first provide a short background. SQL Server uses write-ahead transaction log (WAL) to record data modifications to disk, and WAL grants ACID properties that data modifications are not written to the physical disk before it writes the accompanied log record to disk. As data modifications are never made directly to disk, when data modification occurs, it performs on data stored in the buffer cache. A page with modified data that is stored in the buffer cache and is not yet flushed to the disk is called a “dirty page.” The page stays there until the database checkpoint occurs or to allow the log cache to be used by the new data page. When the data modifications occur in the buffer cache, the associated data page that contains modification creates in the Log cache as well. The data page created in the Log cache must always be flushed to disk before flushing the dirty page to disk to maintain the SQL Server ability to roll back data in case of failure. That means that all data must be written to the transaction log file first, before allowing those data to be committed and flushed into the data file on the disk

Having that in mind, for systems that are experiencing performance issues caused by writes to the transaction log file, SQL Server delayed Durability provides an option to drop the Durability from the ACID requirements for some data by allowing the dirty pages to be flushed to disk before flushing the associated log cache. That practically means that SQL Server now maintains some level of tolerance for some moderate data loss.

So the SQL Server Delayed Durability option allows dirty pages to be flushed to disk as if the Log cache is flushed before them. The logic behind abandoning durability in favor of performance is the optimistic judgment that nothing deleterious could happen and that the Log cache is going to be flushed eventually. Therefore, instead of flushing the Log cache on every commit, the data continues to be stored in the Log cache until it reaches the maximum size of 60 KB or when the explicit sys.sp_flush_log is issued. Then it is flushed to disk reducing the I/O operations in this way, significantly in some cases. By reducing the log I/O contention, a significant reduction of the WRITELOG waits could be expected in some cases, such as committing data too often query design

What have to be noted here is that when using the delayed transaction option, there is no guarantee that some data won’t be lost in case of a catastrophic event, like power outage or SQL Server crash

The delayed durability option allows control at the three different levels, at the database level, at the transaction (COMMIT) level and at an Atomic block level (In-memory OLTP Natively Compiled Stored Procedures)

Starting with SQL Server 2014 and newer, there are two levels of controlling the transaction durability:

- Full transaction durability – This is the standard (default) SQL Server settings that grants full durability for all transactions in the database. This setting matches the settings of all pre-SQL Server 2014 versions

This option is recommended option especially if there is a zero tolerance for data loss or when the system is not clogged anyhow with transaction log write latency

It must be stated that some transactions are hardcoded as fully durable transactions, and the delayed durability option cannot affect those transactions regardless of the settings. So delayed durability is not applicable in any way to any cross-database transactions, Microsoft Distributed Transaction Control (MSDTC) transactions, transactions related to Change Tracking, Change Data Capture, Transaction Replication and File tables operations, Log shipping and Log backup, and system transactions regardless of the delayed durability settings

- Delayed transaction durability – This option allows asynchronous mode for transactions allowing the data stored in the buffer to be flushed on disk before flushing the Log cache

This option must be used carefully and only in systems that can afford a certain level of data loss at first place, when transaction log writes latency is causing the performance bottleneck or in situations where high contention level of workload is encountered to allow faster releasing of the acquired locks

Controlling transactions durability using the DELAYED_DURABILITY option

Database level

To control transaction durability at the database level, the DELAYED_DURABILITY option must be used with the ALTER DATABASE command

|

1 |

ALTER DATABASE AdwentureWorks2014 SET DELAYED_DURABILITY = |DISABLED|ALLOWED|FORCED |

DISABLED – This is the default option that grants full durability of all transactions. It is the highest-level option that overrides any delayed durability option set at the transaction (COMMIT) or Atomic block level

ALLOWED – When this option is turned on, the transaction durability decision transfers to the lower Transaction and Atomic block level. This option allows the transaction with the explicitly delayed durability settings imposed at the Transaction and Atomic block level to be met. So, it doesn’t force the delayed durability anyhow but rather delegates the decision directly to the lower level

FORCED – When this option is turned on, the delayed durability is forced to all transactions in the database, except those above mentioned cannot be set to be with delayed durability. This option overrides any explicitly or non-explicitly determined delayed durability at the Transaction and Atomic block level. Using this option is particularly useful when there are no easy options to control durability at the application level or changing the application code is not an option

Transaction (COMMIT) level

The delayed durability at the explicit transaction level applies via the extended syntax of the COMMIT command

|

1 |

COMMIT TRANSACTION WITH (DELAYED_DURABILITY = ON|OFF); |

ON – When the option is set to ON, committing of the transaction is set to delayed durability, and it follows that setting except in situations where the delayed durability at the database level is set to DISABLED. In case that DISABLED is set at the database level, the transactions are set in synchronous COMMIT mode, and the ON option doesn’t have any effects

OFF – This is a default value that is valid except in situations where the delayed duration at the database level is set to FORCED. In such cases, where FORCED is set at the database level, asynchronous COMMIT is imposed, and the OFF option (as well as default delay durability settings) doesn’t have any effects

Atomic block level

Managing of delayed durability at the Atomic block level can be done via the BEGIN ATOMIC syntax that is extended with the DELAYED_DURABILITY = ON|OFF option

|

1 2 3 4 5 6 7 8 9 10 11 |

CREATE PROCEDURE dbo.Test_t1 @p1 bigint not null, @p2 bigint not null WITH NATIVE_COMPILATION, SCHEMABINDING, EXECUTE AS OWNER AS BEGIN ATOMIC WITH (DELAYED_DURABILITY = ON,TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english') INSERT dbo.TestTable VALUES (@p1) INSERT dbo.TestTable VALUES (@p2) END GO |

ON – When this option is set to ON, committing of the transaction is set to delayed durability, and it follows that setting except in situations where the delayed durability at the database level is set to DISABLED. In case that DISABLED is set at the database level, the transactions are set in synchronous committing mode, and the ON option doesn’t have any effects

OFF – This is a default value that is valid always, except in situations where the delayed duration at the database level is set to FORCED. In such case, the asynchronous committing mode imposes, and the OFF option (as well as default delay durability settings) doesn’t have any effects

I case of the Atomic block; the ON and OFF option behaves differently depending on whether the transaction the processing is active, or there is no active transaction:

There is no active transaction – The Atomic block initiates a new transaction that is set as fully durable

The transaction processing is active – A save point creates in the ongoing transaction (fully or delayed durable) by the Atomic block, and then it starts the new fully durable transaction

DELAYED_DURABILITY = OFF

There is no active transaction – The Atomic block initiates a new transaction that is set with delayed durability

The transaction processing is active – A save point creates in the ongoing transaction (fully or delayed durable) by the Atomic block, and then it starts the new transaction with delayed durability

Finally, one important note that is often overlooked. When delayed durability is turned on, any normal and planned SQL Server restart or shut down is treated in the same way as any other catastrophic event. So for any SQL Server maintenance that requires planned restart or shut down of SQL Server, data loss should be planned. While it is possible that data loss might not occur in some specific scenarios, any planned or unplanned restart or shut down of SQL Server should be treated as a catastrophic event when active delayed durability is engaged

See more

Check out SpotLight, a free, cloud based SQL Server monitoring tool to easily detect, monitor, and solve SQL Server performance problems on any device

- SQL Server trace flags guide; from -1 to 840 - March 4, 2019

- How to handle the SQL Server WRITELOG wait type - June 13, 2018

- SQL Server performance counters (Batch Requests/sec or Transactions/sec): what to monitor and why - June 5, 2018