Outline

In this article, we’ll discuss how to read SQL Server transaction logs. This article should provide and insight into how the workload is being captured in the transaction log files. We are going to see how to get the transaction meta-data details and the history of the data will give us a clear idea of how the system is working and helps to decide peak usage hours, also helps to log information about who is doing what?

In this article you’ll learn how:

- To read a SQL transaction log

- to prepare SQL to capture the transaction data

- to deploy, configure and manage data using T-SQL

- to schedule a job using the SQL Server Agent job

- And more ….

Importance of transaction log

The transaction log is a critical and vital component of the database. Every SQL Server database must have a transaction log and it records all transactions made on the database. If there is a system failure, accidental deletes, audits, point-in-time restores, recovery, or setting up High-availability, you will need a transaction-log to bring your database online.

Read SQL Server transaction log

In this article, we’ll discuss how to read transaction log to measure the number the Inserts, Updates, and Deletes operations. Also, discuss how easy is to find those affected objects against these transactions. Surprisingly, it’s not that complex to measure the entire process but obviously, you do need a database with FULL recovery model or SIMPLE if you get in there fast enough before the log clears. So what do you need to do? Well, first things first we need to look at the log.

In general, the transaction logs cannot be read directly because the data is in binary and it’s not in human-readable format. Indeed, the logged transactions details can be read using undocumented functions such as fn_dblog () and fn_dump_dblog (). This gives us an option to read each logged data in the transaction files. It can also be used in conjunction with preparing recovery solutions. Let us get statred to see how to use the fn_dbLog function, make sure to select columns that you’re interested in. fn_dblog is an organized transaction data-set to view the details of the transaction log. All of the transactions are carried forward in the same order in which it got created and each transaction is associated with specific LSN (Log Sequence Number).

Syntax:

fn_dblog (@startLSN,@endLSN)

@startLSN : Beginning of the transaction LSN

@endLSN: End of the transaction LSN

Let us use the following query to analyze the transaction log for the specific transaction.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

SELECT [Current LSN], [Operation], [Context], [Transaction ID], [Begin Time], [end time], [Log Record Length], [AllocUnitName], [Description] FROM fn_dblog (null, null) where [Transaction ID]='0000:000003a3' |

You can find the type of the operation, begin date timestamp and end time and associate object using fn_dblog() function for the specific transaction. Let’s deep-dive into only those operations types that we are interested to capture using the SQL.

Most commonly used operation types are:

- LOP_BEGIN_XACT type is used to find the beginning of the transaction.

- LOP_INSERT_ROWS is to get the insert transaction detail

- LOP_MODIFY_ROW is to get the update transaction detail

- LOP_DELETE_ROWS is to get the delete transaction detail

- LOP_ABORT_XACT is to get the detail about rollback operation

- LOP_COMMIT_XACT, gives the details about the committed transaction

Prepare SQL

In this section, we are going to create dummy table and run through few DML operations (Insert, Delete and Update), and then query the log to see those statement. Let’s dissect the flow into sample SQL for better understanding.

-

Query fn_dblog for the operation type LOP_INSERT_ROWS, LOP_MODIFY_ROWS and LOP_DELETE_ROWS to see what has happened with the all the objects

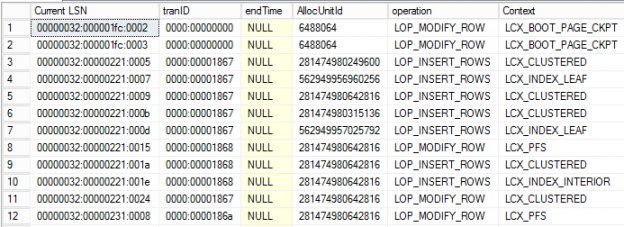

123select [Current LSN], [transaction ID] tranID,[end time] endTime, AllocUnitId, operation, Contextfrom ::fn_dbLog(null, null)where operation in ('LOP_INSERT_ROWS', 'LOP_MODIFY_ROW', 'LOP_DELETE_ROWS')

- Let’s join fn_dblog with the system objects to retrieve transaction information pertaining to only user-defined objects

- Next, getting how many rows were inserted, updated, or deleted alongside the end-time about when the respective transaction committed

-

Fetch only those transaction happened in the last 5 minutes. In this case, the interval is defined as 5 minutes. You can change as per the requirement

123select [Transaction ID] tranID, [End Time] endTimefrom ::fn_dbLog(null, null)where Operation = 'LOP_COMMIT_XACT' and [End Time]>=DateADD(mi, -5, Current_TimeStamp) - Next, pull the [Transaction SID] of each operation type ‘LOP_BEGIN_XACT’ and pass it into SUSER_SNAME () function to get the account details.

- Now, project all these value to get a count of each committed transactions at the object level, and based on the operation type using the Pivotal method

Here is the complete T-SQL that captures the details about how many rows were inserted, updated, or deleted alongside the time when the respective transaction committed

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

SELECT objectName, begintime, endtime, account, SUM(LOP_INSERT_ROWS) [Insert], SUM(LOP_MODIFY_ROW) [Update], SUM(LOP_DELETE_ROWS) [Delete] FROM ( SELECT object_name(p.object_id) objectName, tlog.[Current LSN], T.begintime begintime, c.endTime endtime, tlog.Operation operation, T.account account FROM sys.objects so inner join sys.partitions p on p.object_id=so.object_id inner join sys.system_internals_allocation_units AU on p.partition_id=AU.container_id inner join( select [Current LSN], [transaction ID] tranID,[end time] endTime, AllocUnitId, operation, Context from ::fn_dbLog(null, null) where (operation in ('LOP_INSERT_ROWS', 'LOP_MODIFY_ROW', 'LOP_DELETE_ROWS')) --and context not in ('LCX_PFS', 'LCX_IAM')) --or operation in('LOP_COMMIT_XACT','LOP_BEGIN_XACT') )tlog on tlog.AllocUnitId=AU.allocation_unit_id inner join ( select [Transaction ID] tranID, [End Time] endTime from ::fn_dbLog(null, null) where Operation = 'LOP_COMMIT_XACT' and [End Time]>=DateADD(mi, -1500, Current_TimeStamp) ) c on tlog.tranID = c.tranID inner join ( SELECT [Transaction ID] tranID, SUSER_SNAME ([Transaction SID]) AS account, [Begin Time] as begintime FROM fn_dblog (NULL, NULL) WHERE [Operation] = N'LOP_BEGIN_XACT') T on tlog.tranID = T.tranID WHERE so.type='U' ) X pivot ( COUNT(operation) FOR operation in (LOP_INSERT_ROWS, LOP_MODIFY_ROW,LOP_DELETE_ROWS,LOP_ABORT_XACT,LOP_COMMIT_XACT) )p GROUP BY objectName,begintime,endtime,account |

Analyzing the table, we can see that the type of transaction carried out on each user object of the database, by pivoting we can measure, how many rows were inserted, updated, or deleted alongside the time when the respective transaction committed.

Schedule a job

Let us simulate the reading transaction log file using T-SQL with the process known as continuous auditing and schedule a job to run as close to 5 minutes intervals using SQL Server Agent.

In this section, we’ll outline the steps required to capture the transaction type information and scheduled a job to run the Stored Procedure at every 5 minutes. It can also be done without creating a stored procedure.

Let us walk through the entire process using AdventureWorks2016 database.

- Create a stored procedure Audit_TransactionDetail. See Appendix for more information

-

Create a table to log the transaction details

12345678910CREATE TABLE SQL_TLOG_Operation(ObjectName NVARCHAR(100),BeginTime datetime,EndTime datetime,InsertCnt int,UpdateCnt int,DeleteCnt int,Acccount varchar(100),LogDate datetime default getdate()) -

Prepare insert SQL statement

12INSERT INTO SQL_TLOG_Operation(ObjectName,EndTime,InsertCnt,UpdateCnt,DeleteCnt)EXEC Audit_TransactionDetail 5 -

Create a job using SQL Server Agent

- Browse job folder

- Right-click and choose New Job

-

Type in the Job Name. In this case its SQL_LOG_Operation

- Go to Steps

-

In the job step

- Click New

- Type in the Step name

- Select the Database AdventureWorks2016

-

Enter the T-SQL procedure call

-

In the Schedules

- Click New

- Type in the schedule Name

- In the Frequency section, choose Daily

-

In the Daily frequency section, choose to run every 5 minutes

- Test and verify the process

I have performed various DML operations to see how the operations are getting tracked in log file using the following SQL

123USE AdventureWorks2016GOSELECT * FROM SQL_TLOG_OperationWrap up

Thus far, we’ll discussed basics of transaction log, importance of log file, how to read transaction log to measure transaction rate of each object. The table can be further queried to get more granular report. Also, discussed how easy is to find those affected objects against these transactions using fn_dblog() function. It is also very useful technique to measure activities like page splits or objects being dropped etc:-.

That’s all for now…

Appendix

Stored procedure

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657CREATE PROCEDURE Audit_TransactionDetail (@minutes int)ASBEGINSELECTobjectName,begintime,endtime,account,SUM(LOP_INSERT_ROWS) [Insert],SUM(LOP_MODIFY_ROW) [Update],SUM(LOP_DELETE_ROWS) [Delete]FROM(SELECTobject_name(p.object_id) objectName,tlog.[Current LSN],T.begintime begintime,c.endTime endtime,tlog.Operation operation,T.account accountFROMsys.objects soinner join sys.partitions p on p.object_id=so.object_idinner join sys.system_internals_allocation_units AU on p.partition_id=AU.container_idinner join(select [Current LSN], [transaction ID] tranID,[end time] endTime, AllocUnitId, operation, Contextfrom ::fn_dbLog(null, null)where (operation in ('LOP_INSERT_ROWS', 'LOP_MODIFY_ROW', 'LOP_DELETE_ROWS'))--and context not in ('LCX_PFS', 'LCX_IAM'))--or operation in('LOP_COMMIT_XACT','LOP_BEGIN_XACT'))tlog on tlog.AllocUnitId=AU.allocation_unit_idinner join(select [Transaction ID] tranID, [End Time] endTimefrom ::fn_dbLog(null, null)where Operation = 'LOP_COMMIT_XACT' and [End Time]>=DateADD(mi, -1500, Current_TimeStamp)) c on tlog.tranID = c.tranIDinner join(SELECT[Transaction ID] tranID,SUSER_SNAME ([Transaction SID]) AS account, [Begin Time] as begintimeFROM fn_dblog (NULL, NULL)WHERE [Operation] = N'LOP_BEGIN_XACT') Ton tlog.tranID = T.tranIDWHEREso.type='U')Xpivot(COUNT(operation)FOR operation in (LOP_INSERT_ROWS, LOP_MODIFY_ROW,LOP_DELETE_ROWS,LOP_ABORT_XACT,LOP_COMMIT_XACT))pGROUP BY objectName,begintime,endtime,accountEND

Latest posts by Prashanth Jayaram (see all)- Stairway to SQL essentials - April 7, 2021

- A quick overview of database audit in SQL - January 28, 2021

- How to set up Azure Data Sync between Azure SQL databases and on-premises SQL Server - January 20, 2021