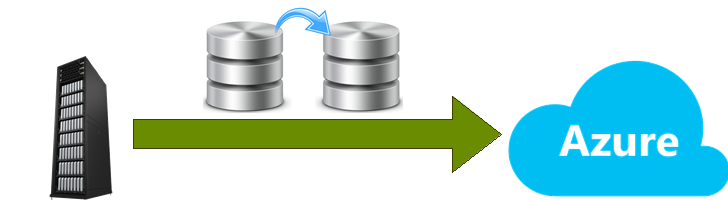

With the evolution of the Cloud, specifically talking about Azure, SQL Server team started to take advantage of the new opened horizon. In this article we will discuss about backups. What are the pros and cons of store your backup files in Azure?

In the previous article, I started a “Hybrid series”, with the objective of show how to take advantage of this new cloud era, without migrate our entire datacenter. This way, we can keep our servers up and running with the critical services and holding the critical information, but we can still use the cloud to offload some tasks. Building, this way, a hybrid scenario.

Talking about backups, we need to check some points before dive into the technical part. First of all, why should I use Azure to store my backups? Being direct, there are two main motivations here: minimize costs and use it as a disaster recovery solution. Let’s check more details…

The “mainstream”

If we stop and think about what we usually do, we will fall into possible scenarios, talking about backup strategy (in terms of storage and retention):

- Store the backups in a disk and retain the files for some time.

- My observation: Good option, because the DBA has a direct control over the files. However, as the databases are growing or the new databases are being added to an instance, a disk remains with the same capacity. The problem? The available space won’t be enough to store all the files for the initially defined retention period. This way backups will start to fail! As the quick solution is reduce the rotation period, the problem will be “extinguished”, people will forget the root cause…and in most of the cases, the disk won’t be expanded.

- My observation: Good option, because the DBA has a direct control over the files. However, as the databases are growing or the new databases are being added to an instance, a disk remains with the same capacity. The problem? The available space won’t be enough to store all the files for the initially defined retention period. This way backups will start to fail! As the quick solution is reduce the rotation period, the problem will be “extinguished”, people will forget the root cause…and in most of the cases, the disk won’t be expanded.

- Directly perform backups to the tape.

- My observation: Good option as well, as this probably won’t be your responsibility. So the disk space is not DBA.

In other hands, a DBA would lose all the control over the backups, as some paid tool, would be used to perform the backups.

So, for every needed restore, a DBA will need the help of the respective team (responsible for the backups). Think positive, if something go wrong, you will have always someone to blame :p

- My observation: Good option as well, as this probably won’t be your responsibility. So the disk space is not DBA.

- There’s also an option that is very common, mixing both strategies that we talked before: The backup is done to the disk and retained for a short time, for e.g. a week, and then the backup agent (third party tool) does its job, and copy the file from the disk to the tape, where the retention policy will be assured.

- My observation: From those three, this is my preferred one! Why? Because you, as a DBA, still have control of the most recent backups. So in case of a problem, you will have easy access to the most recent FULL and t-log backups. The other nice point here, is that you will have the retention period assured by the tapes.

Looking to those three options, which one is appropriate to my environment? Well, it depends! Defining a backup strategy is a very important, and critical, part of the DBA role. And this is not that easy to define. Some factors may influence on this:

- The resources available.

- The database size.

- The Recovery Point Objective (RPO).

- The Recovery Time Objective.

- … and more…

Looking for the described scenario, we can agree that if you have the backup files in a local disk, right there in the server, it would be very simple and easy to restore the files, right?

Now think in the scenario where you have the tapes involved. Probably you would need to open a ticket to another team, in order to position the right tape and ask them to restore a database for you. So you would need to:

- Identify a database and the right files to restore.

- Identify and specify the order to restore.

- Open a ticket with all the collected information.

- Wait for someone, from the respective team, to grab the ticket and start working on this.

- In most of the cases, they have problems restoring a database, because it is open for connections…

- And some other steps that are not in the DBA’s hands.

Let’s agree that this is not the most practical process…In some cases, the DBAs have control over all the process (involving tapes), but this is most common in smaller companies.

Where is Azure in the middle of this?

Well, now let’s explore what we can expect from Azure… First of all, backups to Azure is a process that involves “out-of-box” resources. Be prepared to open the connectivity to this service.

If you look to the first article, you will notice that we have different ways to connect to Azure. By default this one uses the internet connection to transfer the files, but there are ways to use a S2S VPN, for example. The main constraint here would be the transfer rate. If the database is too large, you may have problem in the time to transfer the file (during backup or restore operations). Still talking about the connectivity, some companies are not happy in have their data traveling in a public network…

From this point, we can approach another point: your data will be out of your “controlled zone”. We can still use the complementary solutions to encrypt the file, but still some companies don’t want to try their luck.

Ok, we already saw the bad side, let’s check the good ones!

As said before, in general there are two main points to attract people to send backups to Azure: Less costs and a better DR solution. Let’s look into details:

- Sending backups to Azure, we will be able to eliminate tapes. Less hassle!!

- You can say “I’m not using a tape! This is not for me.”. Wrong!!!

Just think that you have a limited and finite storage space to put you data files in…. In Azure you have “unlimited” storage, by a good price. Less worries! - As we talked about “price”, we need to say that in Azure you pay-per-use. Let’s pretend that you are storing backups in a local disk. What happened if the space is not enough anymore? You add a new disk (or extend the existing one). This operation would involve a good amount of gigabytes in one time. So, if you have a dis of 1Tb, and you need to replace it, you would never buy one with less than 1,5Tb…or maybe 2Tb. This would cost some extra money, and you won’t be using all the disk capacity from the day zero. In some case, you won’t be ever using all the free space. So, this is waste of money!

By paying per use, as your backup files are growing, you are paying exactly what you are using. You need more space, you pay more. You activated backup compression and the database backup is now smaller, you pay less. - By putting backups in Azure, you will have a Disaster Recovery scenario. The first point is to notice is that you are doing an offsite backup. Your datacenter could be destroyed by aliens, but your backups would still be in safe! But we have more here. By default, a storage account is always replicated to a secondary location…Even if the Azure datacenter has problems, your data will be safe in another location (datacenter).

Fair enough? So now we are ready to check our technical options! Check the second part to see the option that we already have available.

- Understanding backups on AlwaysOn Availability Groups – Part 2 - December 3, 2015

- Understanding backups on AlwaysOn Availability Groups – Part 1 - November 30, 2015

- AlwaysOn Availability Groups – Curiosities to make your job easier – Part 4 - October 13, 2015