In the previous articles of this Azure Data Factory series, we showed different scenarios in which we can take advantage of the Azure Data Factory in copying data from variant data stores and optionally transform the data before loading it to the destination datastore. In all examples that are provided in the previous articles, we created a new pipeline from scratch to achieve the required functionality.

In this article, we will see how to create a new pipeline from an existing template.

Why Template

An Azure Data Factory pipeline template is a predefined pipeline that provides you with the ability to create a specific workflow quickly, without the need to spend time in designing and developing the pipeline, using an existing Template Gallery that contains data Copy templates, External Activities templates, data Transformation templates, SSIS template or your custom pipelines templates.

Using templates will save your time and effort when you need to perform repeated provisioning for any resource. The same applied to creating a Data Factory pipeline repeatedly and quickly without the need to think about the pipeline design each time you provision the same logic.

How to use

To create a new pipeline from an existing template, open the Azure Data Factory using the Azure Portal, then click on the Author and Monitor option on the Data Factory page. From the Author and Monitor main page, click on the Create Pipeline from Template option, as shown below:

The same can be achieved from the pipeline Author window, by clicking beside the Pipelines node and choose the Pipeline from Template option, as shown below:

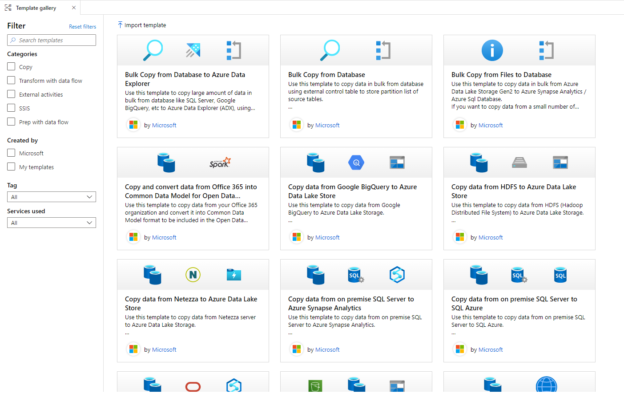

Both methods will lead you to the Template gallery page, from which you can check all predefined Azure Data Factory pipeline templates that are provided by Microsoft and the custom templates created by you or your team, with the ability to filter the predefined templates based on the template category, whether it is a Microsoft built-in template or a custom template and finally based on the tags and services, as shown below:

To get more information about any pipeline template, click on that template and a new window with be displayed, contains a description for that pipeline template, the list of tags related to that pipeline template, the services that can be used as a source or a sink for this pipeline template, the datasets that can be used as sources and sinks for that pipeline template and finally a preview for the pipeline template design that shows the activities that are used in that pipeline template to achieve the described functionality, as shown below:

If you decide to use that pipeline template, you need to select an existing or create a new Linked Service for each source and sink required in the selected pipeline template, from the same window, and validate the connection properties for each created Linked Service, using Test Connection option, as shown below:

Then two new options will be enabled in the pipeline template page, allowing you to export the pipeline JSON file to be saved in your machine, by choosing the Export Template option, as shown below:

When you save the predefined data Factory pipeline template, it will generate two JSON files, an ARM template and a manifest file. The manifest file contains information about the template description, template author, template tile icons and other metadata about the selected template.

The exported JSON Azure Resource Manager template file contains all information that describes the parameters, variables and resources used in creating the Data Factory pipeline, which can be shared with the data engineering or development team to be created later in their test environment, as shown below:

The second option that is provided in the pipeline template page is to Use this template to create a new pipeline in your Azure Data Factory, as shown below:

Where you will be notified of the number of resources that are created from the selected pipeline template, as shown below:

And the created pipeline will be opened in the pipeline Author window, allowing you to customize it based on your requirements. This allows you also to review all the activities configuration and confirm that it fits your scenario.

For example, the selected MoveFiles pipeline template consists of three main activities, the GetFileList activity that is used to return the list of files exist in the source data store, the Filter activity that ensures that only files will be selected from the source data store, without considering the folders, and finally the ForEach activity that loops through all the source files, copy it to the destination datastore then delete it from the source data store, as shown below:

In order to test that pipeline, that is created using an existing Data Factory pipeline template, you can debug it for testing purposes, as shown in the How to Debug a Pipeline in Azure Data Factory article, or schedule it using an Azure Data Factory trigger, as described in the How to Schedule Azure Data Factory Pipeline Execution Using Triggers article.

In this demo, we will test the pipeline using the Debug option, from the pipeline Author window, then provide all the required parameters for the pipeline execution, as shown below:

The pipeline execution can be monitored from the Output tab that shows useful information about the execution of each activity from the Data Factory pipeline, as shown below:

In addition to the detailed view of the copy activity, in which you can see the amount of data copied between the source and destination data stores, the throughput of the copy process, the consumed resources and time that the activity spent in the queue and in transferring the data, as shown below:

How to create

In addition to the ability to use a predefined pipeline template to create a new pipeline with specific functionality, Azure Data Factory allows you to save your existing pipelines as templates, in order to use it by different teams or different development stages with different inputs.

Take into consideration that, saving an existing pipeline as a template requires enabling the GIT integration option in your Data Factory in order to save the templates in your Azure Dev Ops GIT or GitHub repository.

For example, assume that we have the below pipeline, that will be used by different teams to perform their data migration process. In order to save it as a pipeline template, open the pipeline in the Author window and click on the Save as template option, as shown below:

In the Save as template window, provide a unique and meaningful name for the pipeline template, a useful description for the pipeline template that describes the functionality of that pipeline, the GIT location for that pipeline template, where it will be saved under the templates folder of the GIT repo, add tags and services for that pipeline template to make it easier to search for it and finally provide a link for useful documentation for the pipeline functionality or required parameters, with the ability to save that template and export it as JSON files, as shown below:

And the pipeline template will be visible to all the Data Factory users, with access to the GIT repo, under the Templates section, as shown below:

The saved custom pipeline template can be shown also under the Template Gallery, under the My templates section, as shown below:

From where it can be Edited, Exported and used by different users to create similar Azure Data Factory pipelines, without the need to create it from scratch, as can be shown below:

Conclusion

You can see from the previous scenarios, how the Azure Data Factory pipeline templates make it easier for us to create new pipelines with one click, without the need to design it from scratch. Also, you see how to save your pipelines in order to be used as templates by other teams to perform the same action. Stay tuned for the next trip in the Azure Data Factory world!

Table of contents

- Azure Data Factory Interview Questions and Answers - February 11, 2021

- How to monitor Azure Data Factory - January 15, 2021

- Using Source Control in Azure Data Factory - January 12, 2021