In this article, we will explore SQL Server PolyBase external tables with Azure Blob Storage.

A quick overview of SQL Server PolyBase

PolyBase allows processing data using native SQL queries from the external data sources. It is available from SQL Server 2016 onwards. PolyBase is a database scoped configuration; therefore, it differs from the popular Linked server. You can refer to the article, Explore SQL Server PolyBase External Tables in SQL Server Always On Availability Groups for a comparison between PolyBase and Linked server.

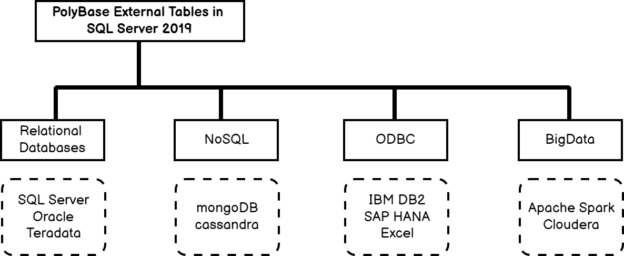

PolyBase supports various data sources for the external tables. It can retrieve data from SQL relational database, NoSQL, ODBC and Bigdata as well.

- SQL Server

- Oracle

- MongoDB

- Hadoop

- Azure Blob Storage

- Teradata

- ODBC Generic Types

In this article, we use SQL Server 2019 for configuring the PolyBase feature. If you do not have it installed, run the SQL Server installation and select the following features.

- PolyBase Query Service for external data

- Java Connector for HDFS data sources

Select the standalone PolyBase using the highlighted option.

It installs the Open JRE 11.0.3 with SQL Server 2019 installation. It is required to connect with the HDFS, Azure storage.

It installs the SQL Server PolyBase Engine and Data Movement service for your SQL instance.

Azure Storage Accounts

The Azure Storage account is a collection of blobs, files, queue, disks and tables. It is highly available, durable, scalable and secure. In this article, we store a flat-file ( .txt) in an Azure storage container and use SQL Server PolyBase to fetch data from it.

First, log in to the Azure portal with your credentials and click on Storage accounts. As shown below, we do have any configured storage account.

Create a storage account using its unique name, location, performance and storage type configurations. You can do additional configurations for networking, data protection; however, we go with default options for this article.

Here, we use Azure Data Lake Store Gen2 in the account kind. You can refer to the article, Introduction to Azure Data Lake Storage Gen2 for more details.

Click on Review+ Create. It validates your configurations and configures a storage account, as shown below.

Create a new container for blob storage. Here, We have a container [sampledata], as shown below.

Upload a comma-separated text file in the storage container, as shown below.

The text file has the following contents.

Steps to configure the SQL Server PolyBase with Azure Bob Storage

Step 1: Enable the PolyBase configuration

At first, use the following sp_configure command to enable the PolyBase feature.

|

1 2 |

EXEC sp_configure 'polybase enabled', 1; Go |

Restart the SQL Server and PolyBase services to activate the PolyBase feature.

If we do not enable the PolyBase, you get the following error while configuring the external data source.

Step 2: Set connectivity to support Windows Azure Blob Storage (WASB driver)

We need to access the text file stored in the Windows Azure Storage Blob (WASB). The WASB is a file system built on top of the Hadoop Distributed File System (HDFS).

|

1 2 |

EXEC sp_configure @configname = 'hadoop connectivity', @configvalue = 7; Go |

You get the following error message during external data source configuration if step 2 is not completed.

Step 3: Get the access key for the Azure Storage Account

SQL Server PolyBase requires the Azure Storage account credentials for connections. You can obtain the access keys for your storage account by navigating to the Storage account page -> Settings -> Access keys.

Note-down the storage account and container name.

In my lab environment, I have the following details.

- Storage Account Name: sqlpolybasedemo

- Container: sampledata

- Access key:

6PcYlCnMQjrJXb7uJC5/iu47VaF5XWEnGbnKy9mEVfYx9DlYxslt6ayB/KYT8C7/G4vjsXLg8+qvB13MSYqKA==

Step 4: Create a new SQL database, database master key and credential

For this article, I created a new SQL database [AzurePolyBaseDemo], as shown below.

Now, create a database master key and the credential using the below script.

- IDENTITY: You can use any string in the IDENTITY section

- SECRET: In the Secret, paste your storage account access keys

|

1 2 3 4 5 6 7 8 |

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'test@123'; USE AzurePolyBaseDemo Go CREATE DATABASE SCOPED CREDENTIAL [AzurePolyBase] WITH IDENTITY = 'TestAccount', SECRET = 'U6PcYlCnMQjrJXb7uJC5/iu47VaF5XWEnGbnKy9mEVfYx9DlYxslt6ayB/KYT8C7/G4vjsXLg8+qvB13MSYqKA=='; |

Step 5: Create an external data source

In this step, we create an external data source that points to the Azure Blob storage. It uses the following arguments.

- Type: Azure blob storage is compatible with HDFS storage; therefore, use the value HADOOP in the type clause

Location: In the location, we enter the Azure storage account and container information in the following format

- Credentials: Enter the credential name created in Step 4

|

1 2 3 4 5 6 7 |

CREATE EXTERNAL DATA SOURCE AzureStorage WITH ( TYPE = HADOOP, LOCATION = 'wasbs://csvfiles@sqlpolybasedemo.blob.core.windows.net', CREDENTIAL = [AzurePolyBaseDemo] ); |

It creates the external data source for Azure blob storage. You can view it in SSMS by navigating to Source database-> External Resources -> External Data Sources.

Step 6: Create an external file format

We create an external file format with the following details.

- Format type: Delimited Text

- Format Option ( field terminator): In my text file, fields are separated using a comma, therefore specify the comma (,) in the FORMAT_OPTIONS argument.

|

1 2 3 4 5 |

CREATE EXTERNAL FILE FORMAT TextFile WITH ( FORMAT_TYPE = DelimitedText, FORMAT_OPTIONS (FIELD_TERMINATOR = ',') ); |

Step 7: Create an external table

The external table syntax is similar to a regular SQL table. We define the columns and their data type in the usual way. The column names and their data type should match with the data in the text file. For example, if you have dates in a column, you cannot insert into a bit data type column.

In the below external table, we define five columns and their data type. It uses a few other parameters to define the data source, its location and file format:

- Location: Here, we specify the file name in the storage container. If you use a directory inside the container, you must specify the directory name as well. In my case, sales.txt is placed directly inside the container so we can specify the location as ‘./Sales.text’.

- Data_Source: Specify the external data source name (Step 5)

- File_Format: Specify the external file format we created in Step 6

|

1 2 3 4 5 6 7 8 9 10 11 |

CREATE EXTERNAL TABLE dbo.SQLExternal ( [Region] varchar(1000), [Country] varchar(1000), [Item Type] varchar(1000), [Sales] varchar(1000) ) WITH ( LOCATION='/Sales.txt', DATA_SOURCE=AzureStorage, FILE_FORMAT=TextFile ); |

It creates the external table in the [AzurePolyBaseDemo] database. You can view it in SSMS by navigating to Source database-> External Resources -> External Tables.

You can retrieve the data from the external table, similar to a regular SQL table. It reads the comma-separated text file data from the Azure Blob Storage and displays it in a tabular format, as shown below.

We can view its actual execution plan that shows that it is a remote query. In the remote query properties, we have the remote source as Polybase_ExternalComputation.

Use Azure Data Lake Storage to insert data from on-premises SQL Server using PolyBase

In this section, we execute a SQL query for the on-premise database and store the results into the Azure data storage. The one-use case of this may be a data archival where you can dump your old data into Azure storage, but still, it can be accessed using regular SQL query.

For this requirement, we need to enable PolyBase export that allows inserting into an external table.

|

1 |

EXEC sp_configure 'allow polybase export', 1; |

Create an external table with the required columns and their data types. In the location, we specify a direct name in the container of Azure data storage.

- LOCATION=’/AdventureWorks/’

- Data_Source: External data source name (From Step 5)

- File_Format: External file format (From Step 6)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

CREATE EXTERNAL TABLE SalesPerson ( [BusinessEntityID] [int] NOT NULL, [TerritoryID] [int] NULL, [SalesQuota] [money] NULL, [Bonus] [money] NOT NULL, [CommissionPct] [smallmoney] NOT NULL, [SalesYTD] [money] NOT NULL, [SalesLastYear] [money] NOT NULL, [ModifiedDate] [datetime] NOT NULL ) WITH (LOCATION='/AdventureWorks/', DATA_SOURCE = AzureStorage, FILE_FORMAT = TextFile ); |

It creates the external table pointing to the Azure Data Storage directory. You can refresh the external tables in SSMS and view them as shown below.

Insert data into the external table

In this step, we insert data from a SQL database (on-premise) and insert them into the external table.

-

Source: On-premise database

[AdventureWorks2019].[Sales].[SalesPerson] -

Destination: External table pointing towards Azure Data Storage blob container

[AzurePolyBaseDemo].[dbo].[SalesPerson]

Insert into AzurePolyBaseDemo.dbo.SalesPerson

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

Insert into AzurePolyBaseDemo.dbo.SalesPerson ( [BusinessEntityID] ,[TerritoryID] ,[SalesQuota] ,[Bonus] ,[CommissionPct] ,[SalesYTD] ,[SalesLastYear] ,[ModifiedDate]) SELECT [BusinessEntityID] ,[TerritoryID] ,[SalesQuota] ,[Bonus] ,[CommissionPct] ,[SalesYTD] ,[SalesLastYear] ,[ModifiedDate] FROM [AdventureWorks2019].[Sales].[SalesPerson] |

It inserts 17 rows in the external table [SalesPerson] as shown below.

You can retrieve the data from the external table using the Select statement, as shown below.

If you open the storage account directory, it displays the dataset ( query output) in four partitioned text files.

You can download the text file and view the content similar to the output of the external table query.

Conclusion

In the article, we explored the SQL Server PolyBase and its way to fetch data from the text file stored in the Azure Data Storage. The PolyBase works as an intermediate for communication between the Azure Data Storage and SQL Server. You can explore it for your environment requirement.

- Understanding PostgreSQL SUBSTRING function - September 21, 2024

- How to install PostgreSQL on Ubuntu - July 13, 2023

- How to use the CROSSTAB function in PostgreSQL - February 17, 2023