This article will explore Parameterized SQL notebooks in Azure Data Studio.

Introduction

SQL Notebook or the Jupyter notebook in the Azure Data Studio has excellent capabilities that include codes and texts in a single file. You can connect it to desired connection and execute it. You can view displays query results integrated into the SQL notebook. Thus, you do not have to migrate different consoles. It is an open-source application that contains live code, equations, visualizations, and text.

The Azure Data Studio notebook has different kernels such as SQL Server, Python, Pyshark, PowerShell, Spark |R, Spark |Scala.

- SQL Server: To write T-SQL queries for relational database

- Python Kernel: To write Python scripts for local development

- Spark kernel: To write Scala and R code using spark

- PySpark3 and PySpark Kernel: To write the Python code using the spark compute

Azure Data Studio includes parametrization support for Python, PySpark, PowerShell, and .Net Interactive Kernels. This article explores how you can use these parametrizations with notebooks.

Pre-requisites

-

Install the latest version of Azure Data Studio. In this article, I use ADS1.30 June 2021 version. You can browse Microsoft docs for downloading it

- You can refer to SQL Notebooks introduction and overview for becoming familiar with the SQL notebook features

- You can refer to Use Python SQL scripts in SQL Notebooks of Azure Data Studio for executing T-SQL scripts using Python code in the notebooks

Parameterized Notebook with Papermill

In this section, we will create a parameterized notebook using the Python kernel. Launch Azure Data Studio and go to File -> New Notebook.

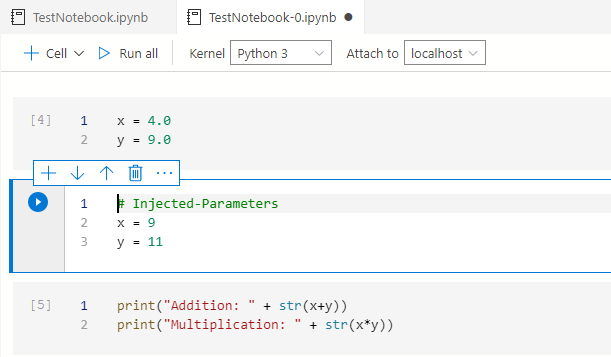

In the kernel, select Python 3 and Attach to as localhost as shown below.

If the Python is not installed for ADS or has an older version, it prompts you to install or upgrade its version. You can follow the instructions written and restart ADS.

Click on +Code and use the following script to install the Papermill package in Python. It installs the papermill package in your ADS.

|

1 2 |

import sys !{sys.executable} -m pip install papermill --no-cache-dir --upgrade |

It downloads and installs the papermill package, as shown below.

You can validate its version using the below code. Its version in my environment is 2.3.3

|

1 2 |

import sys !{sys.executable} -m pip list |

Now, we can convert an existing cell to parameterized cell. For the demonstration purpose, I wrote a sample code that declares values for two parameters X and Y.

Click on the eclipse, and you can find a list of options. Click on the option – Make parameter cell.

- Note: If you do not make a cell as a parameter cell, it acts as a regular code or text cell in the ADS

Once you click on the option – Make Parameter Cell, it changes the existing cell into a parameter call. It displays a tag – Parameters as shown below.

To call these parameters, add a new code cell and write python code that performs addition and multiplication of both parameters.

|

1 2 |

print("Addition: " + str(x+y)) print("Multiplication: " + str(x*y)) |

Click on Run All to execute both cells and performing calculations using the parameters. You get the output using the values specified in the parameter cell.

In the above example, we entered the parameter values in the code cell. However, in this case, we want to provide values during runtime. Therefore, to override the existing(default) values, you can click on the highlighted icon – Run with Parameters-to input parameters at the runtime.

You get a prompt to enter the values of the parameters. Enter the value of the specified parameter and press Enter. If you do not wish to enter new values of the parameter, press Escape on the keyboard.

Similarly, as I have two variables in my notebook, it prompts for entering the value of another parameter.

In the notebook, you can view the entered parameter values in the injected parameters section.

Click on the button – Run all to execute the notebook with injected parameters and display the results. You can note here that we have both default values and Injected values. However, for the calculation purpose, ADS uses injected values as it overrides the default ones.

Execute parameterized notebook using the URI

Suppose you prepared a SQL notebook so that your team members can execute it after entering the values of the parameters. In this case, you can store the SQL Notebook in a repository such as GitHub. Later, open the URL in any browser and enter the query with parameters values.

The ADS notebook supports HTTP, HTTPS, FILE URI schema in the azuredatastudio://microsoft.notebook/open?url= format.

Therefore, the script to open notebook URI schema from GitHub is as below:

|

1 |

azuredatastudio://microsoft.notebook/open?url=https://raw.githubusercontent.com/microsoft/sql-server-samples/master/samples/applications/azure-data-studio/parameterization.ipynb?x=10&y=20 |

In this query, we have the following fields:

- azuredatastudio: It refers that the notebook will open in the Azure Data Studio

- Microsoft.notebook.open?url: Enter the GitHub URL with the complete path to the notebook. Here, note that the notebook extension is .ipynb

- ?x=10&y=20: Enter the parameter name and its value

The browser prompts for permissions to open Azure Data Studio.

Click on Allow, and you get another prompt to allow notebook core extensions to open the URI.

Click on Open and Enter on Yes in the next window.

It downloads the notebook from the GitHub URI and displays your injected parameter values. You can run it to display the results.

Parameterized notebooks for PowerShell kernel

PowerShell scripts help automate DBA stuff. You can perform various tasks using PowerShell in a simplified manner. For this article, we will not focus on PowerShell scripting. Our main motive is to execute PowerShell scripts having parameters using notebooks.

Suppose we automated a task for restoring the [AdventureWorks] database. It involves the following steps:

- Download the backup file from the GitHub URL – “https://github.com/Microsoft/sql-server-samples/releases/download/adventureworks/AdventureWorks2016.bak

- We Save the backup file into a local directory

- Restore the database on the specified instance

Our requirement is to use variables for the URL, backup directory, SQL instance and the database name. Create a notebook in ADS, Select the kernel as PowerShell and enter the following PS code.

|

1 2 3 4 |

$BakURL = "https://github.com/Microsoft/sql-server-samples/releases/download/adventureworks/AdventureWorks2016.bak"; $BakFile = "c:/Temp/AdventureWorks2016.bak"; $SQLinstance="sqlnode3" $DBName="Mydemodb" |

As shown earlier, right-click on cell eclipse and convert this cell as a parameter cell. You get the parameter tag.

Now, open another code cell and enter the script for restoring a database backup. This script uses the parameters for the backup URL, backup directory, SQL instance and database name.

- The Invoke-WebRequest downloads the backup file from the specified backup URL and saves it in the location specified by variable $BakFile

- The Restore-SqlDatabase cmdlet restores the database using values specified by these variables

|

1 2 |

Invoke-WebRequest -Uri $BakURL -OutFile $BakFile; Restore-SqlDatabase -ServerInstance SQLinstance -BackupFile $BakFile -Database $DBName -AutoRelocateFile |

The overall notebook code looks like below.

If we directly run the notebook with explicit values of these parameters, it takes default values and executes the PS script. To enter the values of the variables explicitly, click on the Run with parameter icon.

It displays the parameter default values. You can put a new value or press Enter to move to a new parameter window.

For the backup file directory, I modified the value to C:\Temp\AdventureWorks.bak

I go with the default value for the parameter $SQLinstance.

Next, I specified the value for the $DBName variable as MydemoNotebookDB.

Once we enter the last parameter value, it returns to the notebook with new cell–injected parameters. In this injected parameter, you can see the value for two parameters – $BakFile and $DBName. We used default values for the $BakURL and $SQLInstance parameter. It only shows those parameters values that we need to override with the default values.

Click on Run All, and you can see the database [MydemoNotebookDB] restored in the SQL instance [SQLNode3].

Conclusion

This article explored the Azure Data Studio SQL Notebook feature – Parameterized notebook. It enables users to deploy a standard script that requires a few parameter values as input. You can pass the value of the parameter and run the script. You can use it for Python, PowerShell scripts automation of SQL DBA tasks.

- Understanding PostgreSQL SUBSTRING function - September 21, 2024

- How to install PostgreSQL on Ubuntu - July 13, 2023

- How to use the CROSSTAB function in PostgreSQL - February 17, 2023