Microsoft Azure has been a leading cloud service provider over the past few years. In this article, we are going to look into an overview of various cognitive services offered by Azure. In simple English, the word ‘Cognitive’ means to involve in intellectual activities, such as thinking or reasoning. Similarly, Azure Cognitive Services are also related to intelligence related to the computer and the way humans behave with these computers. For example, these services allow humans to interact with the machines as if they have the intellectual ability to talk, think and respond to events accordingly.

Microsoft has incorporated all these services into its AI and Machine Learning domain on Azure, exposing them in the form of APIs to connect and interact with other applications. These services are very easy and convenient to use once you have a basic understanding of working with APIs. You don’t need to be an expert in Machine Learning or Artificial Intelligence in order to consume these. Azure also offers client SDK in multiple programming languages such as C#, JavaScript, Python, etc. so that you can integrate these with your existing applications at ease.

Cognitive Services available in Azure

There are five main categories for Azure Cognitive Services that offer multiple services in each of the categories. These services are as follows:

- Vision – Used mostly for image recognition

- Language – Used to identify natural language and learn from human interactions

- Speech – Used to recognize and convert speech to text and vice versa

- Decision – Used to identify patterns in data and take necessary actions

- Search – Used to integrate web search APIs with your existing applications

Let us now learn more about these services in detail.

Vision

The Vision API is responsible for handling data related to images and videos. You can leverage this API and build interactive applications that can recognize images and videos and extract meaningful information out of them. For example, you can use the APIs to extract the type of objects available in an image or convert handwritten notes to text documents, etc. The main APIs available under this category are as follows:

- Computer Vision – This allows the users to perform the classification of images, extract sentiment from images and read handwriting and convert to a text or add descriptions to images based on the intent in the images. You can also use this to recognize human faces and apply tags based on classification, etc.

- Form Recognizer – This API can be used to read data from forms and then convert them into electronic formats. Helpful in converting existing paper-based documents into digital PDFs

- Video Indexer – Users can use this API to generate captions from videos, identify content, search for specific content, and interpret the text in the videos

- Face – This API is specifically designed to detect and identify faces of people, interpret emotions and recognize faces securely and this can then be integrated with existing applications

- Custom Vision – This API is a custom computer vision API designed to target specific business needs

Figure 1 – Vision – Image Recognition API (Source)

As you can see in the figure above, the handwritten notes have been converted into a piece of structured information that can now be used by applications accordingly.

Language

Language APIs are one of the most used services in Azure Cognitive Services. These APIs provide users with the ability to analyze texts and recognize intents and entities from them. This makes it easier for your application to communicate with your customers more naturally. There are several services available under this category as well.

- Immersive Reader – This is a service provided by Microsoft that enables users to generate meaningful information from the text. Suppose you have a document and you want to understand the meaning of the text from it. In such a case, you can use this API and extract meaning from your document

- LUIS – This service is used for natural Language Understanding and Interpretation Services. You can use this in your chatbots to learn from users as they talk and interact with your bot

- QnAMaker – The QnAMaker is an application created by Microsoft to maintain FAQ question banks for chatbots. If your organization has a FAQ page listed, you can use that information in the QnAMaker and the chatbot can reflect that information to the users while they interact with the bot

- Text Analytics – Text Analytics services are mostly used to identify sentiments and named entities from the texts that are being provided to these APIs. It is useful while analysing sentiments of tweets or some other social media applications

- Translator – This is a relatively new service that can be used to translate language from one language to another in real-time. At the moment of writing this post, there is a support of more than 90 languages

Figure 2 – Language Service – LUIS (Source)

In the above figure, LUIS helps the chatbot to identify the intent and entities linked to the conversation with the user.

Figure 3 – Language Service – QnA Maker (Source)

This is an example of the QnA Maker that you can use to create a FAQ chatbot that can answer users’ questions based on the inputs available.

Speech

The Speech services are used to detect and analyse voice-based content from the users. There are four basic services under this category.

- Speech to Text – This service is used to convert the audible conversation to textual data that can be read and searched. An implementation of this is voice-based typing services where you can speak, and your speech gets converted to text on the flow

- Text to Speech – This is the opposite of the speech-to-text service. This converts textual data into speech with a live voice. This voice has automated modulation that gives it a natural feel as if some human is reading the text

- Speech Translation – This service is used to translate speeches from one language to another in real-time as users are speaking. A lot of online translation applications these days make use of this feature in order to translate the voice conversations of their users

- Speaker Recognition – This is a new service and is still in preview mode. It can be used to identify speeches from the person and identify them accordingly

Decision

The Decision API services are used to apply machine learning algorithms to the dataset. These services help to identify data patterns and trends from within the dataset and aids in the decision-making process. You can leverage these services without any additional knowledge of how these work behind the scenes. Microsoft takes care of implementing and training the models behind the scenes and exposes the results through the API. The main services available under this category are as follows.

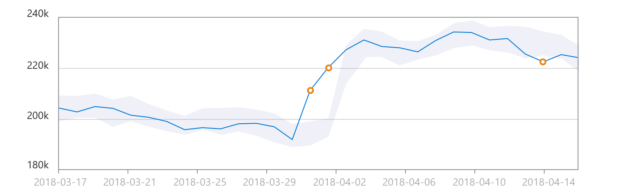

- Anomaly Detector – This is used to detect anomalies in the underlying dataset. You can identify values that should not be present within a dataset and take appropriate actions based on it

- Content Moderator – This API helps in monitoring social media applications and identifies offensive content like posts or videos and flags those. It is a very useful feature and is used on almost any social media platform

- Personalizer – The Personalizer is used to create personalized recommendations for every individual user based on their browsing activities. This is used by applications that target their sales by optimizing human interaction and behavior with them

Figure 4 – Decision Services – Anomaly Detector (Source)

Web Search

The web search APIs are a collection of multiple APIs using which you can enhance your applications’ searching capabilities on the internet. You can perform different types of searches like Web Search, News Search, Image Search, etc. However, moving forward, these services are going to be moved out of the Cognitive Services space to the Microsoft Bing Search APIs.

Conclusion

In this article, we have explored the Cognitive Services in Azure. There are mainly five services in this area that comes into the picture while working. Vision helps in identifying pictures and videos. You can analyze videos to identify people or objects within them. Using Speech, you can enable your applications to convert speech-to-text or vice versa and implement speech translation as well. Language is another cognitive service that enables customers to understand the natural language from users and provide outputs as desired. These are mostly implemented in chatbots to understand user input. In the Decision section, we can use algorithms like Anomaly Detector or Personalizers to enable your applications to behave in real-time scenarios. Using the Search APIs, you can look for content on the web and enrich your applications accordingly. This article has mostly covered all the services in a nutshell. In my upcoming article in this series, I will cover each of the services in depth.

- Getting started with PostgreSQL on Docker - August 12, 2022

- Getting started with Spatial Data in PostgreSQL - January 13, 2022

- An overview of Power BI Incremental Refresh - December 6, 2021