In this article, we will review on managing database files of SQL Server running on Azure Kubernetes service.

Please refer to “Creating a Kubernetes cluster” section in SQL Server in Azure Kubernetes Service (AKS) for creating a Kubernetes cluster using Standard_B2s size VM’s

We will cover the following topics of about managing database file in SQL Server running on Kubernetes cluster.

- Create multiple persistent volume claims and mount them to pod running SQL Server container

- Changing the location of tempdb database files in Kubernetes

- Changing the location master database files in Kubernetes

Mount multiple volumes to the Pod

By default, only one volume is mounted to the pod that is running SQL Server container when we deploy SQL Server using default manifest file in SQL Server in Azure Kubernetes Service (AKS). All the data and log files of the databases reside in the same volume. In case if you want to place the data files in one volume and the log files in another volume or spread database files across different volumes, we need to create multiple volumes and mount them to the pod running SQL Server container.

After creating the Kubernetes cluster using steps mentioned in SQL Server in Azure Kubernetes Service (AKS) and the nodes are in the ready state, create multiple volumes using below manifest files.

Open cloud shell in your Azure portal and run the following command to create pv1.yaml manifest file.

|

1 |

cat -> pv1.yaml |

Paste the following code and press Ctrl + Z.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

kind: StorageClass apiVersion: storage.k8s.io/v1beta1 metadata: name: azure-disk provisioner: kubernetes.io/azure-disk parameters: storageaccounttype: Standard_LRS kind: Managed --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: mssql-data annotations: volume.beta.kubernetes.io/storage-class: azure-disk spec: accessModes: - ReadWriteOnce resources: requests: storage: 8Gi |

Similarly, create a pv2.yaml manifest file with the following script.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

kind: StorageClass apiVersion: storage.k8s.io/v1beta1 metadata: name: azure-disk provisioner: kubernetes.io/azure-disk parameters: storageaccounttype: Standard_LRS kind: Managed --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: mssqllogs annotations: volume.beta.kubernetes.io/storage-class: azure-disk spec: accessModes: - ReadWriteOnce resources: requests: storage: 8Gi |

Execute ls command and make sure you have both pv1.yaml and pv2.yaml files

Now we need to apply both pv1.yaml and pv2.yaml to create two persistent volumes and volume claims.

Apply pv1.yaml using the below script. Applying pv1.yaml creates a persistent volume claim with name mssql-data of capacity of 8GB.

|

1 |

kubectl apply -f pv1.yaml |

Similarly, apply pv2.yaml which creates persistent volume claim with name “mssqllogs” of capacity 8GB in Azure Kubernetes Service.

|

1 |

kubectl apply -f pv2.yaml |

Now we need to create a deployment and specify both “mssql-data” and “mssqllogs” volume claims in the deployment and apply the deployment manifest.

Before creating a deployment, create a secret key that will be used in the deployment. Please execute the following script by replacing the password of your choice.

|

1 |

kubectl create secret generic mssql --from-literal=SA_PASSWORD="yourownpassword" |

Now create a deployment manifest file using the below script.

|

1 |

cat -> sql.yaml |

Paste the following script and press CTRL + Z.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

apiVersion: apps/v1beta1 kind: Deployment metadata: name: mssql-deployment spec: replicas: 1 template: metadata: labels: app: mssql spec: terminationGracePeriodSeconds: 10 containers: - name: mssql image: mcr.microsoft.com/mssql/server:2017-latest ports: - containerPort: 1433 env: - name: MSSQL_PID value: "Developer" - name: ACCEPT_EULA value: "Y" - name: MSSQL_SA_PASSWORD valueFrom: secretKeyRef: name: mssql2 key: SA_PASSWORD volumeMounts: - name: mssqldb mountPath: /var/opt/mssql - name: mssqllog mountPath: /var/opt/mssqllog volumes: - name: mssqldb persistentVolumeClaim: claimName: mssql-data - name: mssqllog persistentVolumeClaim: claimName: mssqllogs --- apiVersion: v1 kind: Service metadata: name: rbcsql spec: selector: app: mssql ports: - protocol: TCP port: 1433 targetPort: 1433 type: LoadBalancer |

Now apply the sql.yaml to create a deployment which creates a pod and mount both volumes claims to it.

|

1 |

kubectl apply -f sql.yaml |

Verify the status of the pods using the below command.

|

1 |

kubectl get pods |

Once the pod is in the running state, we can execute commands on the pod and check the files inside the volumes.

To run commands against pod in Azure Kubernetes Service, we need to get the pod name and replace it in the below script.

|

1 |

kubectl exec -it mssql-deployment-6bf47d8f65-qp8dj bash |

Execute ls command against pod to retrieve files and directories. The persistent volumes are under /var/opt.

Execute below command to navigate to /var/opt and execute ls command to list files and directories under /var/opt

|

1 |

cd /var/opt |

Changing the tempdb database file location

By default, the log and data files are placed under “/var/opt/mssql/data”. the error log, agent log, and default trace files are placed under “/var/opt/mssql/log”

Now to move the log file of a tempdb database from “/var/opt/mssql/data” in the default volume “mssqldb” associated with persistent volume claim “mssql-data” to “/var/opt/mssqllog” in the new volume “mssqllog” which is associated with persistent volume claim “mssqllogs”.

Get the IP address of the SQL Server running in Azure Kubernetes Service using the below command and login to the SQL Server using SQL Server management studio.

|

1 |

kubectl get svc |

Use the IP address and the secret you created earlier to login into the SQL Server using SQL Server management studio.

Execute below T-SQL script to know the current location of the log file of tempdb database.

|

1 2 3 4 |

SELECT name, physical_name AS CurrentLocation FROM sys.master_files WHERE database_id = DB_ID(N'tempdb'); GO |

Now execute the flowing script to change the location of the log file of a tempdb database.

|

1 2 3 |

ALTER DATABASE tempdb MODIFY FILE (NAME = templog, FILENAME = '/var/opt/mssqllog/templog.ldf'); GO |

Now restart the SQL Server by running the SHUTDOWN command in SQL Server management studio which will automatically create the log file of tempdb database “templog.ldf” file in the new location.

Changing the master database file location.

Generally moving master database files involves the following steps.

- Set the new location using mssql-conf

- Stop the SQL Server

- Move the files to the new location

- Start the SQL Server

Changing the master database file of SQL Server running on Kubernetes cluster in Azure Kubernetes Service is not a straightaway procedure.

In Kubernetes, you need to run commands at the pod level to access mssql-conf. to execute commands at the pod level using the below command and replace the pod name with your pod name.

|

1 |

kubectl exec -it mssql-deployment-576c5bdcdf-sv8r5 bash |

mssql-conf is at location “/opt/mssql/bin”. Navigate to “/opt/mssql/bin” and run the following command to change the location of the master database log file.

Execute the following command to change the location of the master database log file.

|

1 |

./mssql-conf set filelocation.masterlogfile /var/opt/mssqllog/mastlog.ldf |

Now we need to restart SQL Server. Issue a shutdown command from the SQL Server management studio.

As soon as shutdown command was executed the SQL Server and the pod running SQL Server container in Azure Kubernetes Service is also restarted. Now we need to move the log file of the master database to a new location and start the SQL Server. But, to move the log file to a new location we need to execute the “mv” command on the pod and the pod is not in the “Running” state.

In Kubernetes, as per the deployment, the pod will always check if the SQL server services are running or not. If the SQL Server is not running, the pod will restart, which will automatically restart the SQL Server inside it. But the SQL Server does not find the master database log file in the new location as we have not yet moved it. In this case, the SQL Server will never start, and the pod in Azure Kubernetes Service will go into “Error” status and keeps on restarting and we will be not able to move the log file to a new location as we need pod in “Running” status to execute “mv” command.

|

1 |

kubectl logs -p mssql-deployment-576c5bdcdf-sv8r5 |

I tried a workaround to sort out this. The following are the steps.

- Delete the existing deployment

- Create a dummy pod

- Mount the volumes to dummy pod

- Move the file to a new location

- Delete the dummy pod

- Create the deployment again by applying sql.yaml manifest file.

|

1 |

kubectl delete mssql-deployment |

Deploy below manifest to create a dummy pod and mount the volumes.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

kind: Pod apiVersion: v1 metadata: name: dummy spec: volumes: - name: mssqldb persistentVolumeClaim: claimName: mssql-data - name: mssqllog persistentVolumeClaim: claimName: mssqllogs containers: - name: dummy image: nginx ports: - containerPort: 80 name: "http-server" volumeMounts: - mountPath: "/var/opt/mssql" name: mssqldb - mountPath: "/var/opt/mssqllog" name: mssqllog |

To run commands in the pod use the below script.

|

1 |

kubectl exec -it dummy bash |

Use the flowing script to move the log file of the master database to a new location in Azure Kubernetes Service.

|

1 |

mv /var/opt/mssql/data/mastlog.ldf /var/opt/mssqllog/mastlog.ldf |

Verify if the file is moved or not, exit the pod and delete the dummy pod.

|

1 |

kubectl delete pod dummy |

Once the dummy pod is deleted. apply the sql.yaml again.

|

1 |

kubectl apply -f sql.yaml |

Check the status of the pod by executing the below script.

|

1 |

ubectl get pods |

The pod is in Running status as it started SQL Server services successfully. The SQL Server services are successfully started as the log file of the master database is found in the new location.

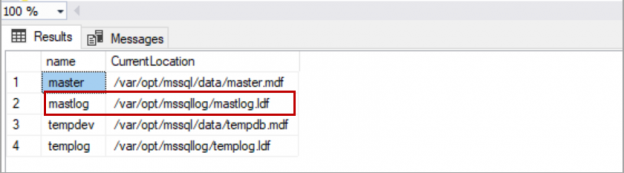

Login to SQL server using SQL Server management studio and check the location of the master database log file.

|

1 2 3 4 |

SELECT name, physical_name AS CurrentLocation FROM sys.master_files WHERE database_id = DB_ID(N'master'); GO |

Conclusion

In this article, we have explored how to create multiple persistent volume claims in Azure Kubernetes Service, mount the persistent volume claim to the pods and move the database files of SQL server running in Kubernetes cluster from the location in the default volume to the location in another volume. In case, if you have any question or other methods/workarounds to move the master database files to a new location, please feel free to post in comment section below.

- Geo Replication on Transparent Data Encryption (TDE) enabled Azure SQL databases - October 24, 2019

- Overview of the Collate SQL command - October 22, 2019

- Recover a lost SA password - September 20, 2019