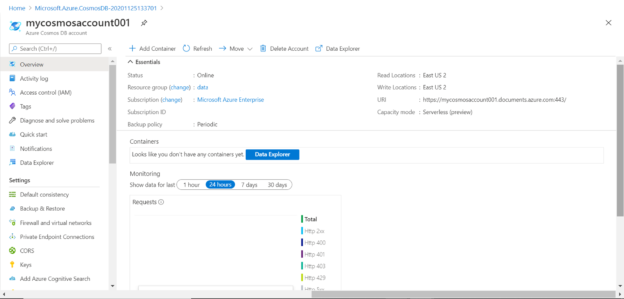

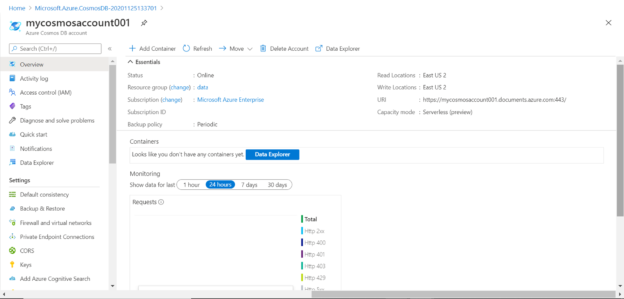

In this article, we will learn how to create a serverless instance of Azure Cosmos DB as well as understand its applicability in use-cases.

Read more »

In this article, we will learn how to create a serverless instance of Azure Cosmos DB as well as understand its applicability in use-cases.

Read more »

This article provides a quick overview of Azure Machine Learning, an end-to-end cloud framework that can help you build, manage and deploy up to thousands of machine learning models. We provide a quick tutorial that shows how to take your existing, pre-trained machine learning models and migrate them into the Azure ML framework.

Read more »

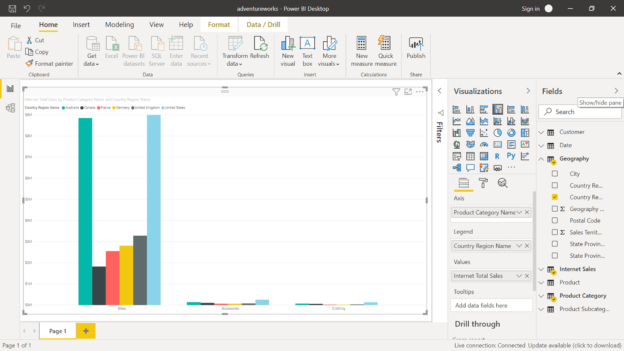

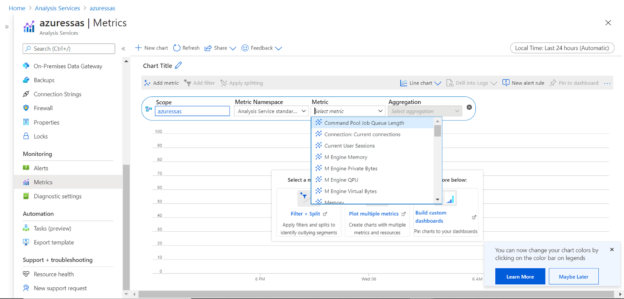

This article will walk you through how you can deploy a sample model on Azure Analysis Services and you will also learn the way to consume it using different tools.

Read more »

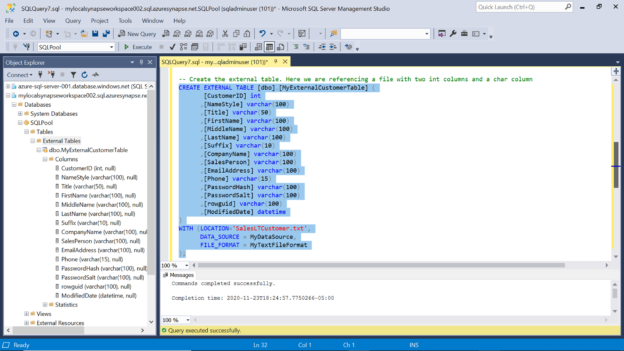

In this article, we will learn to create external tables in Azure Synapse Analytics with dedicated SQL pools.

Read more »

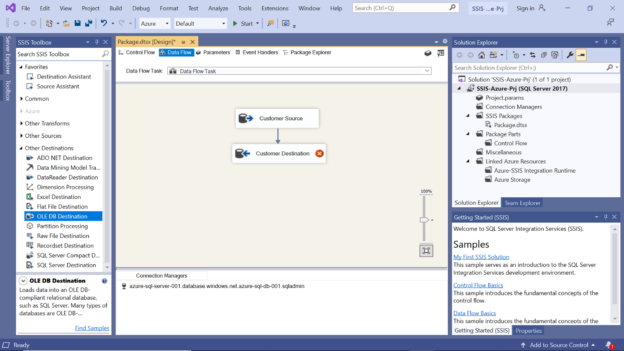

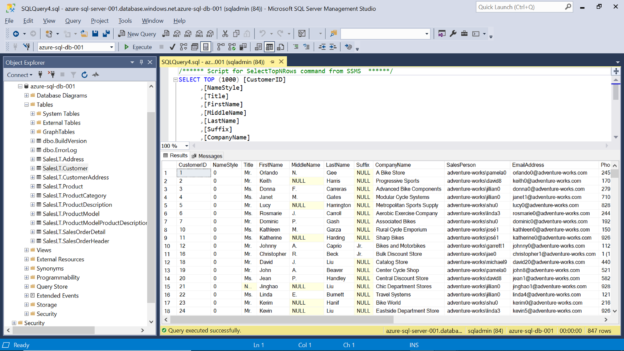

In this article, we will learn how to populate data from the Azure SQL database into Azure Synapse Analytics using SQL Server Integration Services (SSIS).

Read more »

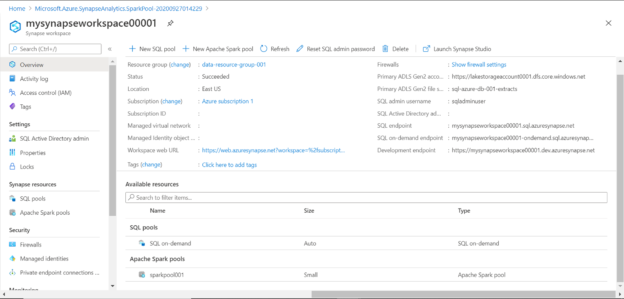

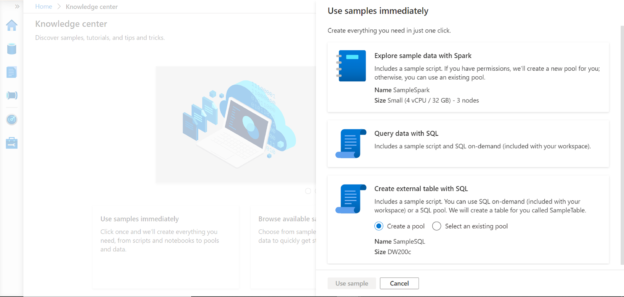

In this article, we will learn how to create a Spark pool in Azure Synapse Analytics and process the data using it.

Read more »

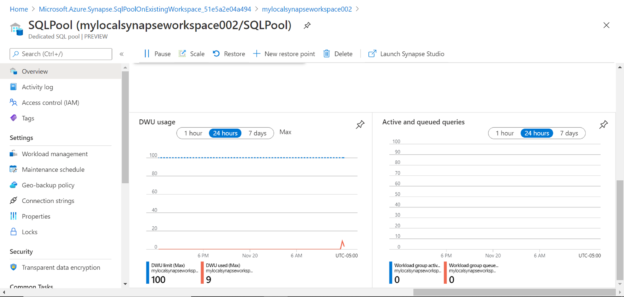

This article helps you create a dedicated SQL pool in Azure Synapse Analytics, which is the first step to set up a data warehousing environment in Synapse.

Read more »

This article will show how to work with SQL on-demand pools and understand the fundamentals of working with this pool in Azure Synapse Analytics.

Read more »

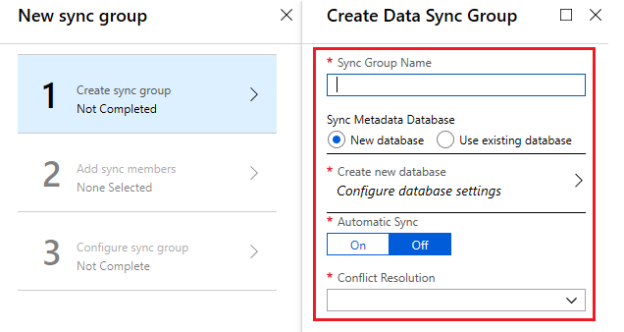

In this article, you learn how to set up Azure Data Sync services. In addition, you will also learn how to create and set up a data sync group between Azure SQL database and on-premises SQL Server.

Read more »

This article will help you get started with Azure Synapse Studio and its various features.

Read more »

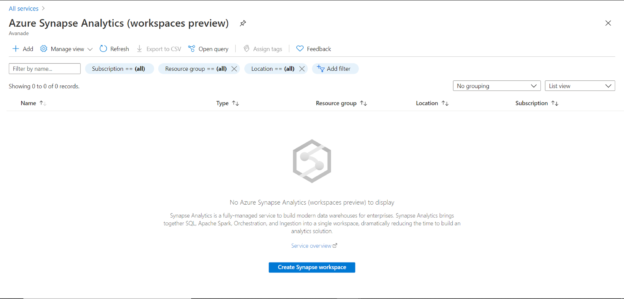

This article will help you understand the process to create an Azure Synapse Analytics workspace and some other features related to it.

Read more »

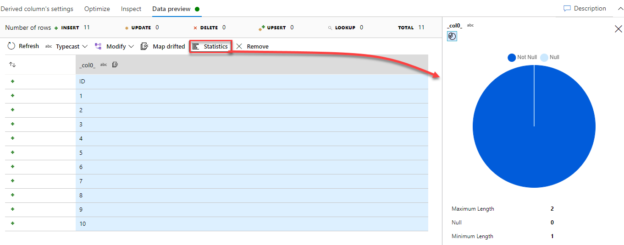

In the previous articles of this series, we discussed how to perform different types of activities using Azure Data Factory. These tasks include copying data between different data stores, running SSIS packages and transforming data before writing it to a new data store.

Read more »

In Azure, the Import/Export operation of the Azure SQL database is a vital part of the database migration methods. It is important to choose the most viable option as per the database migration strategy and business requirements. In addition, the applications that are configured with Azure SQL PaaS databases, the migration specialist could decide and identify several common scenarios where Azure PaaS database are scripted, copied, migrated, moved or backed up.

Read more »

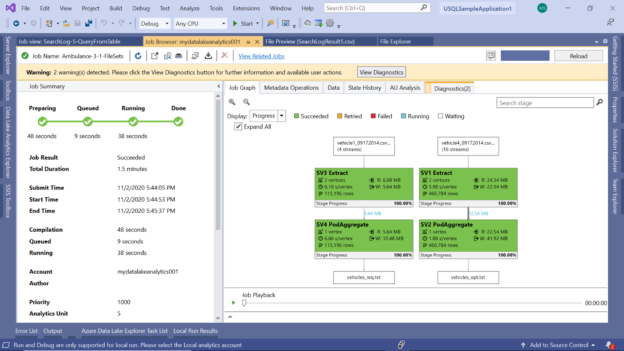

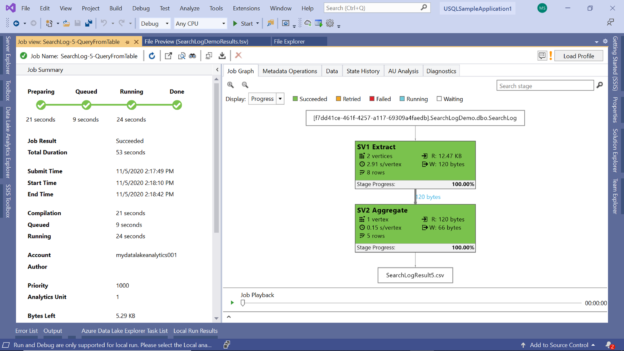

In this article, we will see how we can access data from an Azure SQL database from Azure Data Lake Analytics.

Read more »

This article will describe how to get started with Azure Analysis Services and help you understand the configuration and pricing options to create our first Analysis Services instance.

Read more »

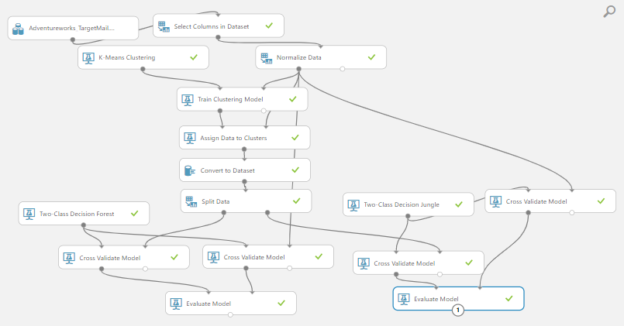

In this article, we will be discussing Clustering in Azure Machine Learning which is another machine learning technique such as Regression analysis, Classification analysis. During this article series, we have discussed the basic cleaning techniques, feature selection techniques and Principal component analysis, Comparing Models and Cross-Validation until today. We will introduce further few techniques that were not discussed before in this article as well. Previously, we have discussed how to perform clustering in SQL Server during the SQL Server Data Mining series.

Read more »

In this article, we will show how to use the GIT repository during the pipeline development phase in Azure Data Factory, to save the changes incrementally before publishing it completely to the Data Factory production environment.

Read more »

This article will help you process file sets with U-SQL in Azure Data Lake Analytics.

Read more »

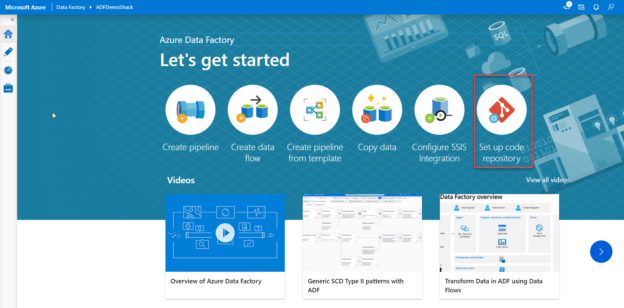

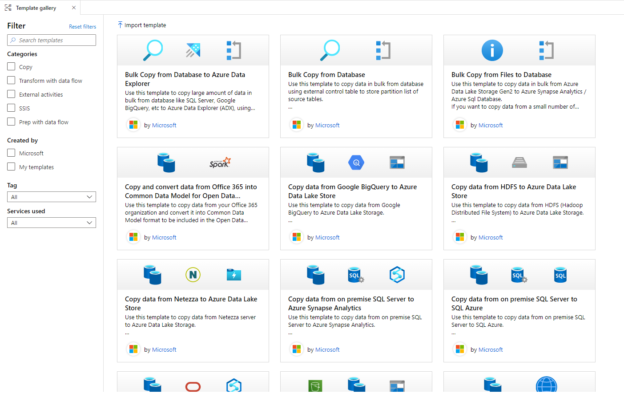

In the previous articles of this Azure Data Factory series, we showed different scenarios in which we can take advantage of the Azure Data Factory in copying data from variant data stores and optionally transform the data before loading it to the destination datastore. In all examples that are provided in the previous articles, we created a new pipeline from scratch to achieve the required functionality.

Read more »

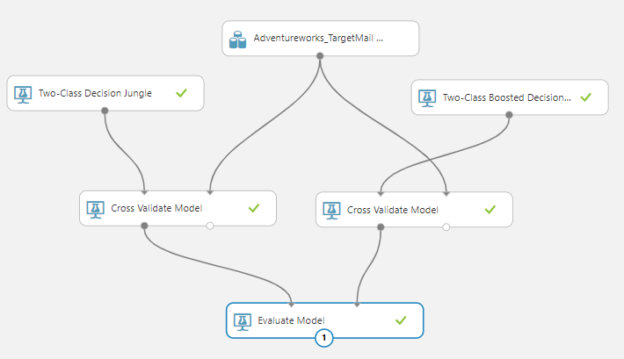

After discussing a few algorithms and techniques and comparison of models with Azure Machine Learning, let us discuss a validation technique, which is Cross-Validation in Azure Machine Learning in this article. During this series of articles, we have learned the basic cleaning techniques, feature selection techniques and Principal component analysis etc. After discussing Regression analysis, Classification analysis and comparing models, let us focus now on performing Cross-Validation in Azure Machine Learning in order to evaluate models.

Read more »

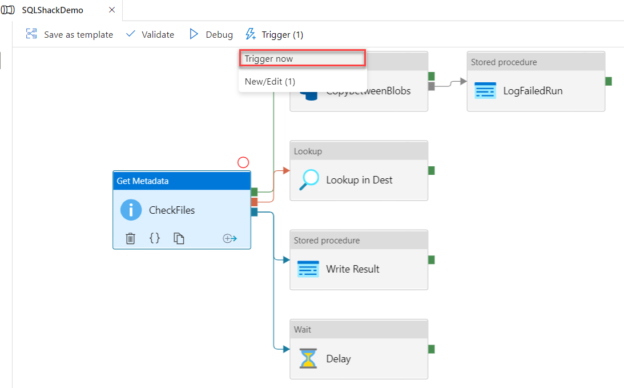

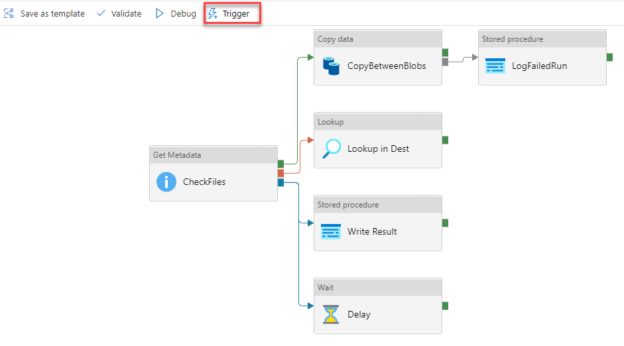

In the previous articles of this series, we showed how to create Azure Data Factory pipelines that consist of multiple activities to perform different actions, where the activities will be executed sequentially. This means that the next activity will not be executed until the previous activity is executed successfully without any issue.

Read more »

This article will help you create database objects in Azure Data Lake Analytics using U-SQL.

Read more »

In the previous article, How to schedule Azure Data Factory pipeline executions using Triggers, we discussed the three main types of the Azure Data Factory triggers, how to configure it then use it to schedule a pipeline.

Read more »

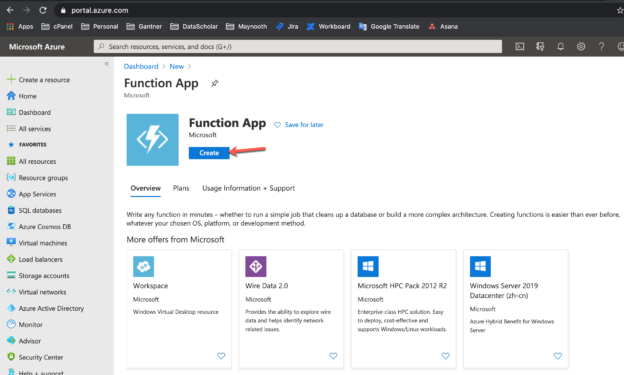

In this article, I am going to explain how to create a serverless application using Azure Functions and use Azure SQL Database to log messages generated by the function. In this world of cloud-based applications, it is very important that you are aware of how to create and design serverless applications. An important aspect while designing any application is to generate log messages at every key step or operation that is being performed. This helps us to understand the workflow whenever there are some issues and need debugging at some later point in time.

Read more »

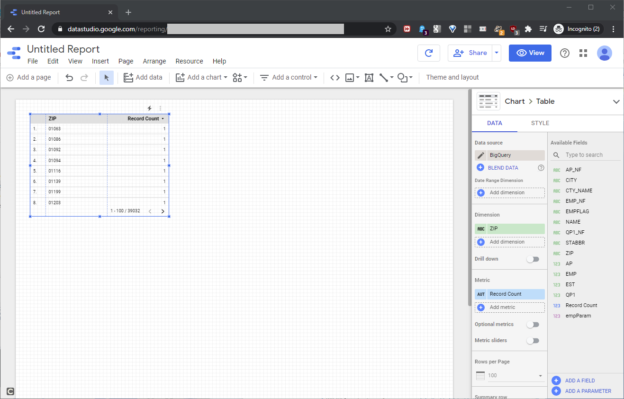

In the two-part SQL Shack article, Build a Google BigQuery Resource, I showed how to build a Google BigQuery resource, and then link it to an Azure SQL Server resource. This article will expand on that first part, showing how to build a BigQuery report with Google Data Studio.

Read more »© 2025 Quest Software Inc. ALL RIGHTS RESERVED. | GDPR | Terms of Use | Privacy