In this article, we’ll discuss security implications of using SQL Bulk Insert and how to mitigate those risks.

Like all ETL tools, any flat file insert can invite security risks relative to the design. In the third part of this series (see TOC) related to SQL Bulk Insert, we’ll look at how we can restrict ETL access to accomplish what we need, but without giving developers too many permissions. I will point out that this may not apply to every company, where security may not be a high priority due to the nature of the company or the nature of our data requirements for ETL flows. As we’ve seen in our series, SQL bulk insert does read and possibly write data (error handling), which can be used for nefarious purposes, if an attacker is given too much permissions.

This applies to other ETL tools as well. SSIS packages are XML data and these can be manipulated as well, along with providing very useful information depending on how an attacker might discover and view these files. ETL applications that use DLLs and other development files often involve configuration files that can also be manipulated, so security risks exist with any tool and creating a design that recognizes these risks is the best way to protect ourselves.

Developers like to build for convenience and this makes sense in some data contexts, but convenience is often easier to attack and uncover, so it may be less appropriate for some data contexts. We’ll look at some examples of adding layers of security around inserting data from files that may add work for our developers, but will be more appropriate in situations where security is more important than convenience.

Considering the Security Context

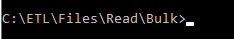

We’ve looked at the SQL bulk insert ability to read from a file and write to our table. We’ll now look at this on the file level. In our examples, we see the below file path:

Our file path where our flat files are stored.

While this file path serves as a useful location for us to load flat files, we should consider that the user account that is executing the underlying insert statement must be able to read (and possibly write to) that file location. The writing part of the equation comes in when it involves logging, even if the permissions of the written logging data are tied down strictly in the output, in that the user doesn’t control what gets written, but that errors are written. In the least, we want to ensure that a separate folder with strict permissions exists for any flat file import to restrict the account access – notice that we’re not reading off the root drive, as we’ve seen that we can insert an entire file of data – think about using SQL bulk insert to view files through SQL Server by inserting the file’s data and reviewing it.

This also means that when we’re done inserting our data from our files, we may want to migrate the files elsewhere, if we want to limit future reads. The risk of future reads is that attackers may infiltrate our system and, even with limited permission to a drive, be able to view past data we’ve inserted and used. Relative to our design, attackers may face limits on what they know about our systems if our load design is demarcated from other parts of the full data flow, such as a load server, transformation server, and presentation server. Just like with least permissions, when we consider how we store our files, we want least readability in that once a file has been read and saved to our load database or server, we want the file moved. And once we’ve completed what we need with our loaded data, we want it cleaned and migrated to our next step.

Using SQL Bulk Insert with Stored Procedures

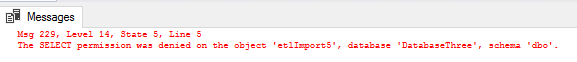

In the below code, in one query window we create an account and give it execute permission to a stored procedure that imports one of the files we have already created in the second part of this series. We’ll notice that the stored procedure does not allow input at all – it inserts data and returns a select of the data from the table, but the user cannot pass any information into the stored procedure. In a new query window with this new account logged in, we try the exact same flat file insert statement of our file without the stored procedure and the transaction fails. Even with the user assigned execute permissions to the stored procedure, the execution will still fail (see the next batch of code under “error thrown as well” in the comments).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

---- Query window with database level permissions CREATE TABLE etlImport5( VarcharSpefzTen VARCHAR(10), IntSpefz INT, BitSpefz BIT, VarcharSpefzMax VARCHAR(MAX) ) CREATE PROCEDURE stpRestrictInsert WITH ENCRYPTION AS BEGIN BULK INSERT etlImport5 FROM 'C:\ETL\Files\Read\Bulk\daily_20180101.txt' WITH ( FIELDTERMINATOR = ',', ROWTERMINATOR = '\n', FIRSTROW = 2, MAXERRORS = 100 ) SELECT * FROM etlImport5 END USE [master] CREATE LOGIN etlRestrictedUser WITH PASSWORD = 'ShortPassword!' USE [DatabaseThree] CREATE USER etlRestrictedUser FROM LOGIN etlRestrictedUser GRANT EXECUTE ON stpRestrictInsert TO etlRestrictedUser --- New window with etlRestrictedUser logged in USE DatabaseThree GO --- Errow thrown SELECT * FROM etlImport5 --- Error thrown BULK INSERT etlImport5 FROM 'C:\ETL\Files\Read\Bulk\daily_20180101.txt' WITH ( FIELDTERMINATOR = ',', ROWTERMINATOR = '\n', FIRSTROW = 2, MAXERRORS = 100 ) --- Error thrown as well EXEC stpRestrictInsert |

We can neither select nor bulk load data, as we have permission to execute one stored procedure.

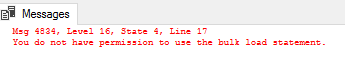

We still get a bulk load error as more privilege must be granted in order to call this procedure.

The SQL bulk insert statement requires administer bulk operations and it also involves inserting data into a table. However, we don’t want to grant a data insert on the table, as it would mean that our user could insert data into the table without calling the procedure that we’ve restricted the user to. Because permission to the procedure will allow an insert, we will only grant the administer bulk operations permissions, which we’re required to do on the master database. We then call the stored procedure in the below code in our other window and we see it inserts and returns the select of the newly inserted data.

|

1 2 3 4 5 6 |

---- Returning to query window with database level permissions USE [master] GRANT ADMINISTER BULK OPERATIONS TO etlRestrictedUser --- Returning to window with etlRestrictedUser logged in post bulk operation grant EXEC stpRestrictInsert |

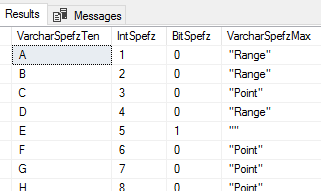

Our file inserts and shows the result of the select statement.

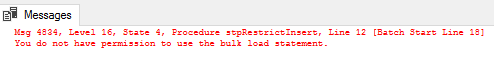

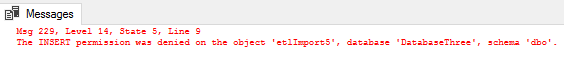

If we try to import the flat file data with our new user even with being granted administer bulk operations, we see that we get a failure about being denied insert access to the etlImport5 table – this also shows why we don’t want to allow insert permissions, as we only want the user in this case to have access to the procedure:

|

1 2 3 4 5 6 7 8 9 |

--- Even with administer bulk operation permissions, this fails BULK INSERT etlImport5 FROM 'C:\ETL\Files\Read\Bulk\daily_20180101.txt' WITH ( FIELDTERMINATOR = ',', ROWTERMINATOR = '\n', FIRSTROW = 2, MAXERRORS = 100 ) |

The statement is denied because this statement requires explicit insert permission that hasn’t been granted, even if the account has administer bulk operations.

We can add parameters to our procedure that allow a dynamic file name or even allow us to specify a higher maximum amount of errors, but these invite risks even with strict parameterization. We must be extra cautious about validating the values that get passed into a stored procedure if we allow outside parameter. For an example, if we do allow a file path to be passed in, we should consider allowing only part of a file path such as the difference between a full file path and a file name only. Even then, we’re still allowing input so the risks of attack from internal and external sources exist.

Permissions of Administer Bulk Operations

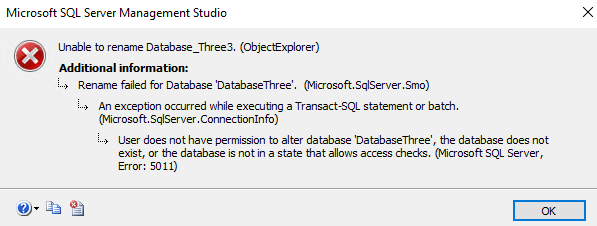

The permission of administer bulk operations is a server level permission, according to Microsoft. However, this does not give the user access to do anything on the server level. For instance, with our etlRestrictedUse, we can try manipulating databases and see failures – like renaming DatabaseThree to Database_Three3.

Our server level permission does not allow us to do anything we want.

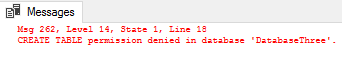

We also saw in the above code that with our etlRestrictedUser we couldn’t import flat file data into our etlImport5 table because insert permissions weren’t allowed. In the same manner, we can’t create objects either – as that permission wasn’t granted. Notice what happens when we try to create a table etlImport6 in DatabaseThree:

|

1 2 3 4 5 6 |

CREATE TABLE etlImport6( VarcharSpefzTen VARCHAR(10), IntSpefz INT, BitSpefz BIT, VarcharSpefzMax VARCHAR(MAX) ) |

Even if I switch to master, I still get the same error.

So even though the user has permissions to execute the SQL bulk insert statement, without authorization to any tables and without authorization to create tables, for these two security contexts, the permission may be appropriate. This doesn’t only prevent external threats that could compromise an account, it also prevents internal threats. For very strict security environments, we should still consider only allowing high-level employees the right to have permission to execute these flat file tools. From intellectual property theft to private information, some development contexts may require developers to have very little access, as they could reveal information that should not be revealed externally.

Summary

In this tip, we’ve looked at the security context of using SQL bulk insert by creating a SQL authentication account, assigning it basic permissions for one insert, and testing the ability of the created user to complete the insert and view the result. In other words, we’ve looked at giving developers the ability to do some tasks, but significantly restricting what they’re able to do in security contexts where this may be appropriate. This doesn’t only protect us against outside attackers – it also protects us against internal attackers or internal threats who state things that should remain confidential. While it comes with costs that limit our ability to do things quickly, in some contexts, we may favor security over performance and this “cost” is actually a benefit for those contexts.

Table of contents

See more

Check out ApexSQL Plan, to view SQL execution plans, including comparing plans, stored procedure performance profiling, missing index details, lazy profiling, wait times, plan execution history and more

- Data Masking or Altering Behavioral Information - June 26, 2020

- Security Testing with extreme data volume ranges - June 19, 2020

- SQL Server performance tuning – RESOURCE_SEMAPHORE waits - June 16, 2020