Introduction

This article is dedicated to creating custom differential and Transaction log backups so that you will see sufficient data in the file size. In SQL Server there are mainly three backup types, Full Backup, Differential Backup, and Transactional Log backups. A Full backup will get the entire database into a backup.

If your database is configured for either a full or bulk-logged recovery model, transaction log backup can be taken. When transaction log backup is taken, the inactive portion of the log file will be empty and the inactive portion will be taken into the backup file. Since log backup will empty the log file, it will be an incremental backup file that means you need all the log backups in case of a database restore.

Differential backup will create a backup with all the modified data pages after the last full backup. This means that a differential backup is related to the last full backup while transaction log backup is related to the last transaction log backup itself.

Typically, you schedule differential and transaction log backups in a database server instance. However, if there are no or fewer transactions between these schedules, you will end up with tiny, small files. Especially, in log backup, you need to use all the files even though they are small files. Typically, Transaction log files are scheduled for frequent time schedules such as fifteen minutes or 30 minutes, you have a good chance of creating many files small in size.

Using DBCC SQLPERF

DBCC SQLPERF (LOGSPACE) WITH NO_INFOMSGS will provide the Log Size and the Log Space used for each log file in the SQL Server instance.

Since this is a DBCC command, you cannot directly use it to include as the checking before the execution of a transaction log backup.

Let us say, we want to backup the Transaction log of the AdventureWorks2017 database only if the log file space used is more than 15%. To achieve the above objective, DBCC SQLPERF can be used by using the following query.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

CREATE TABLE #log_space_usage ( database_name NVARCHAR(256), log_size FLOAT, percent_used FLOAT, status iNT) INSERT INTO #log_space_usage EXEC('DBCC SQLPERF (LOGSPACE) WITH NO_INFOMSGS') SELECT database_name, percent_used, CONVERT(NUMERIC(16,2), log_size) AS log_size, CONVERT(NUMERIC(16,2), (log_size * (percent_used/100))) AS space_used FROM #log_space_usage WHERE database_name = 'AdventureWorks2017' DROP TABLE #log_space_usage |

The following is the output for the above query.

Let us incorporate the above query into the transaction log backup so that we can perform the log backup for a specific size.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

DECLARE @FileName VARCHAR(50) DECLARE @percent_used NUMERIC(9, 2) SET @FileName = 'D:\Data\' + 'AdventureWorks2017' + REPLACE(CONVERT(VARCHAR, GETDATE(), 101), '/', '') + REPLACE (CONVERT (VARCHAR, GETDATE (), 108), ':', '') CREATE TABLE #log_space_usage ( database_name NVARCHAR(256) ,log_size FLOAT ,percent_used FLOAT ,STATUS INT ) INSERT INTO #log_space_usage EXEC ('DBCC SQLPERF (LOGSPACE) WITH NO_INFOMSGS') SELECT @percent_used = percent_used FROM #log_space_usage WHERE database_name = 'AdventureWorks2017' IF @percent_used > 15 BACKUP LOG [AdventureWorks2017] TO DISK = @FileName DROP TABLE #log_space_usage |

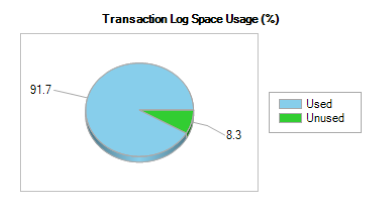

Though we can achieve what we are looking for, there is an issue with the above approach. In this method, we check only the size of usage of the log file. As we know, even though the log file is used, a large portion of that can be due to active transactions that will not be taken to the backup and will not be deleted from the log file after the log backup. Further, if you have replication, mirroring, Change Data Capture (CDC) enabled until transactions are delivered, those transactions will not be taken to the log backup. This means that log is utilized as shown in the below figure, but that will not make into transaction log backup since those are still active transactions.

In this type of scenario, the log file is more than 15% but only a few data to backup as shown in the below error.

Processed 3 pages for database ‘AdventureWorks2017’, file ‘AdventureWorks2017_log’ on file 1.

The log was not truncated because records at the beginning of the log are pending replication or Change Data Capture. Ensure the Log Reader Agent or capture job is running or use sp_repldone to mark transactions as distributed or captured.

BACKUP LOG successfully processed 3 pages in 0.557 seconds (0.035 MB/sec).

This can be verified from log_reuse_wait_desc column in the sys.databases view in the master database.

With this type of scenario, you will still see a large number of log backup files small in size as shown below.

Using the dm_db_log_stats Dynamic Management Function

With SQL Server 2016 SP2 and later, a new Dynamic Management Function named dm_db_log_stats is introduced that can be used to achieve the above objective. Let us query the said DMF:

|

1 2 3 4 5 |

SELECT total_log_size_mb, log_since_last_log_backup_mb , active_log_size_mb, log_since_last_checkpoint_mb FROM sys.dm_db_log_stats(db_id('AdventureWorks2017')) |

This is the output for the above query.

By combing these parameters and the log backups, you can define smart log backup as shown in the below script.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

--- Smart Log Backups DECLARE @log_since_last_log_backup_mb NUMERIC(9, 2) DECLARE @ThreasholdSize INT = 25 DECLARE @FileName VARCHAR(50) SELECT @log_since_last_log_backup_mb = log_since_last_log_backup_mb FROM sys.dm_db_log_stats(db_id('AdventureWorks2017')) IF @log_since_last_log_backup_mb > @ThreasholdSize BEGIN SET @FileName = 'D:\Data\' + 'AdventureWorks2017' + REPLACE(CONVERT(VARCHAR, GETDATE(), 101), '/', '') + replace(convert(VARCHAR, getdate(), 108), ':', '') BACKUP LOG [AdventureWorks2017] TO DISK = @fileName END ELSE PRINT 'No BACKUP' ----------------------- |

The above script will check for the incremental size of the transaction log after the last log backup. Even though this still has the earlier problem, there will be less chance of occurring.

Please note that this Dynamic Management Function (DMF) is available only for Azure SQL Database apart from the later versions of SQL Server 2016 SP2.

Differential Backups

Typically, we used differential backups in order to improve the database restoring process. If we are employing full backups and transaction log backups, we will need a large number of files to recover the database since there are a large number of transactional log backups. This means you need to restore all the log backups from the last full backups. If you are taking log backup for every 15 minutes, we are looking at 96 files per day. If you are taking full backup per day, you are looking at log files with multiple factors of 96 depending on the number of days.

By having differential database backups, we can ignore the usage of a large number of log files in the restoring process.

For example, when you need to restore a database, you need only the transactions log backups after the last differential backups. This means that you need the last full backup and the last differential backup and all the log backups after the last differential backup.

Differential backups will include all the modified data pages after the last full backups. A similar scenario like transaction log backup can be cited with the Differential backup that is differential backup will not have enough data page if there are no or less transaction between the full and the differential backups. However, this is an unlikely scenario for an operational database.

Let us look at how we can include the checking of how many data pages will be included for the differential backups. To achieve this objective, we will be using a DMV called dm_db_file_space_usage. This DMV will be available for all the SQL Server versions, Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics as well.

This is the query that should be executed under the relevant database.

|

1 2 3 4 |

SELECT total_page_count, modified_extent_page_count, modified_extent_page_count * 100.0/total_page_count Percentage FROM sys.dm_db_file_space_usage |

Now let us include the above details in the Differential backup process as below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

DECLARE @FileName VARCHAR(100) DECLARE @Modified_percentage NUMERIC(3, 2) SELECT @Modified_percentage = modified_extent_page_count * 100.0 / total_page_count FROM sys.dm_db_file_space_usage IF @Modified_percentage > 5.0 BEGIN SET @FileName = 'D:\Data\' + 'AdventureWorks2017_DIFF_' + REPLACE(CONVERT(VARCHAR, GETDATE(), 101), '/', '') + replace(convert(VARCHAR, getdate(), 108), ':', '') BACKUP DATABASE [AdventureWorks2017] TO DISK = @FileName WITH DIFFERENTIAL END |

The above script will ensure that differential backup will be executed only if the modified volume of the database is more than five percent.

Log Shipping

As you know, log shipping is totally dependent on the log backup. Log shipping is made of three SQL Server Agent jobs, backup, copy and restore. If you are moving ahead with the above approach of Transaction log backups, you need to disable the backup job of the log shipping and need to make sure that the log backups are copied to the folder where the copy job is picking up.

However, this approach might increase the data latency between the primary and secondary servers of log shipping during off-peak times. Further, you need to include all the maintenance tasks in log shipping such as delete older files and notifications etc.

Conclusion

Among the database backups in SQL Server, this article has introduced a mechanism to perform transaction log and differential backups by considering the size of the content rather than just running them on a given frequency. This approach will reduce the number of backup files that will lead to much efficient management of backup files.

References

- https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-db-log-stats-transact-sql

- https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-db-file-space-usage-transact-sql

See more

Interested in a SQL log reader to view and analyze the SQL Server transaction log? Consider ApexSQL Log, a powerful tool that renders transaction log information into a searchable, sortable information that can be used for forensic auditing, replication, continuous auditing, disaster recovery and more

- Testing Type 2 Slowly Changing Dimensions in a Data Warehouse - May 30, 2022

- Incremental Data Extraction for ETL using Database Snapshots - January 10, 2022

- Use Replication to improve the ETL process in SQL Server - November 4, 2021