In this article, we will learn how to migrate data from Azure SQL Database to Azure Database for PostgreSQL using the Azure Data Factory.

Introduction

Azure cloud provides a variety of database options to host relational and other types of data using different types of data repositories. Traditionally, SQL Server has been the database technology of choice for a solution that involves major usage of Microsoft technology stack and platform. When such solutions are migrated to the Azure cloud, the obvious choice becomes SQL Azure. PostgreSQL has been one of the most popular open-source relational databases for a long time. Those who have been using PostgreSQL on-premises would naturally want to move to the Azure database on PostgreSQL when their solution is migrated to the Azure cloud.

One interesting point about PostgreSQL is that several databases and data warehouses are either based on or share compatibility with PostgreSQL. A comprehensive list of such databases or data warehouses can be found here. Keeping this factor in view, many solutions move from Azure SQL and other commercial databases to PostgreSQL for forward compatibility with other databases or data warehouses in future migration or cross-technology stack integration scenarios. Considering the case of migrating from Azure SQL to Azure Database for PostgreSQL, the question comes to using a technology stack to perform this migration after the due diligence of compatibility and data mapping is done. There are multiple ways and tools to perform this migration. One of the efficient tools to perform data migration for most of the general data migration scenarios is using the Azure Data Factory. This article will help us migrate data from Azure SQL Database to Azure Database for PostgreSQL using the Data Factory.

Pre-Requisites

As we know that our source is going to be Azure SQL Database and the destination would be Azure Database for PostgreSQL, we need to have one instance of each database server created on the Azure account under the same resource group and location. We also need sample data in the source database. An easy way to host sample data is to create the Azure SQL Database with the sample data option during instance creation which would create the new database instance with ready-to-use sample data. Once these instances are in place, the first part of the pre-requisite is complete.

Next, as we are going to perform data migration using an ETL tool which is Azure Data Factory, we need an instance of the same created in the same resource group and region as our database instances. Once the instance is created, we can navigate to the dashboard of the instance and access the Azure Data Factory portal from where we can use the copy tool as well as create data flow pipelines to perform data transfer as well as data transformation tasks. Typically before a data migration is performed, data model assessment, data mapping and transformation rules, delta detection and other such tasks involved in determining the optimal approach for migration are sketched out, and then the implementation is performed using an ETL tool like Azure Data Factory. It is assumed that these tasks are already carried out to keep our focus on the implementation using Azure Data Factory.

Migrating data from SQL Server to Azure Database for PostgreSQL

It is assumed that one is already on the Azure Data Factory portal as shown below. We would be using the copy tool to demonstrate how to perform the data migration for a simple table hosted in Azure SQL Database.

Click on the Copy data tool and it will open the wizard as shown below. For straight-forward data movement where no significant transformation is required, one may use the copy data method. For other complex scenarios, one can use the data flows and build a data pipeline that migrates data objects in batches. Provide the task name and execution frequency. If it’s a one-time migration one may execute the task only once, and if it’s a task where data would be migrated till a cut-over happens, then the task would be scheduled on a recurring frequency.

In the next step, we need to register the source database instance. Select Azure SQL database as shown below from the list of supported data sources.

In the next step, we need to provide connection credentials to enable connectivity from Azure Data Factory to Azure SQL Database. Ensure either Azure services are allowed to connect to the database or firewall setting in the database allow the IP of Azure Data Factory or a private connection endpoint of Azure Data Factory is added to Azure SQL Database to allow incoming connections in the database. Click on the Test Connection button to ensure that the connectivity is successful.

In the next step, we need to select the database objects that one may want to migrate. In our case for demonstration purposes, we are selecting a simple employee table. We can preview the data as well as the schema of this table as shown below. In real-life scenarios, simple tables would be migrated as-is, and tables with complex formulas or data-type compatibility issues may be dealt with using the query or script options.

In the next step, we have the option to apply a filter to the data. Here we just have three records and four fields, so we do not need to filter the data.

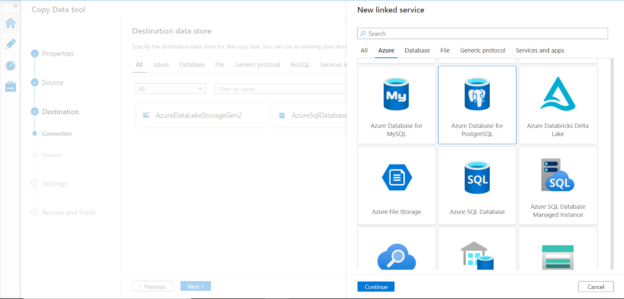

Now it’s time to register the destination database i.e. Azure Database for PostgreSQL. Select the same from the list of supported data repositories as shown below.

In the next step, we need to provide connection credentials to allow Azure Data Factory to connect to the PostgreSQL instance. There are some settings that are unique here which we need to be aware of. The username should be in the format of <username>@<database-endpoint-name> as shown below. Also seen below are the different types of supported encryptions.

Click on the Test connection button and you will find that the connectivity failed. If you read the error description, you will find that SSL is required for this connectivity and we selected the default encryption method which is No encryption.

If you verify the Azure Database for PostgreSQL instance settings, you will find that by default it is configured to enforce SSL connections. So here we need to configure the encryption method to require SSL. Once you change the settings, Postgresql will be registered as the destination.

In the next step, we need to provide table mapping. Even if we have a table with the same name in the Postgresql instance, it won’t show up automatically in the mapping as the schema would be public or with a different name in Postgresql while it may be under a different schema in Azure SQL Database. If one expects to have the database objects created when the data pipeline is executed, typically we create database objects first using a different task and then execute the data load tasks.

A script like the one shown below can create an equivalent table in Azure Database for Postgresql. The data type compatibility checks will be done already during the analysis phase of the migration based on which the table definition is assessed and created using a script. Select the table in the dropdown and move to the next step.

In the next step, we need to perform column mapping optionally. It’s always a good practice to verify that the right source and destination columns are mapped to each other. Here as the column names are identical in source and destination tables, they are already mapped correctly.

In this step, we can enable data consistency checks, logging, and other performance-related settings to keep a close check on the data integrity of the migrated data as well as the cost that is incurred to perform data migration in the desired time frame.

Once done, review the details in the next step and execute the data task and pipeline. Once the execution completes, data should get loaded from the source database to the destination database and migration for the database object in question can be considered as complete.

In this way, we can use Azure Data Factory for implementing a data migration approach to migrate data from Azure SQL Database to Azure Database for PostgreSQL.

Conclusion

In this article, we learned about the migration approach in general and using Azure Data Factory to migrate data from Azure SQL Database to Azure Database for PostgreSQL. We also learned some of the key settings that are particular to the Azure Database for PostgreSQL which needs to be kept in view while implementing a migration job that loads data into Postgresql.

- Oracle Substring function overview with examples - June 19, 2024

- Introduction to the SQL Standard Deviation function - April 21, 2023

- A quick overview of MySQL foreign key with examples - February 7, 2023