Introduction

One of the new features announced with SQL Server 2017 is support for the Python language. This is big! In SQL Server 2016, Microsoft announced support for the R language – an open source language ideally suited for statistical analysis and machine learning (ML). Recognizing that many data scientists use Python with ML libraries, the easy-to-learn-hard-to-forget language has now been added to the SQL Server ML suite.

There’s a big difference between R and Python though: R is a domain-specific language while Python is general purpose. That means that the full power of Python is available within SQL Server. This article leaves ML aside for the moment and explores a few of the other possibilities.

Getting started

A full discussion of installing Python on SQL Server is out of the scope of this article but you can follow the link in the References if you need to do that. Note that at the moment, ML services for Python are only available for installations of SQL Server on Windows. Neither Linux nor Azure SQL Database is supported as of this writing.

There is a single API for invoking Python programs from SQL Server:

|

1 |

sp_execute_external_script |

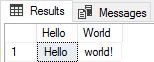

This is a system stored procedure that first appeared with R services in SQL Server 2016. Assuming you have everything set up already, this query will run a Python “Hello world!” program:

|

1 2 3 4 |

EXEC sp_execute_external_script @language =N'Python', @script=N'OutputDataSet = InputDataSet', @input_data_1 = N'SELECT ''Hello'', ''world!''' WITH RESULT SETS ((Hello VARCHAR(20), World VARCHAR(20))); |

This produces:

(Note that you need to use WITH RESULT SETS to get column names added to the output.)

Use case 1: Regular expressions

If you are an experienced user of SQL Server, you may have been frustrated on occasion by the lack of support for full regular expressions. Sure, a WHERE LIKE clause can do a lot, but there’s a lot that it can’t do. For example, consider email addresses. RFC 5352 describes them in detail and takes 57 pages to do it! Constructing a full LIKE clause to identify email addresses is cumbersome at best. However, this Python regular expression does it neatly:

|

1 |

(^[a-zA-Z0-9_.+-]+@[a-zA-Z0-9-]+\.[a-zA-Z0-9-.]+$) |

(This is difficult to do with a LIKE match because it lacks the “+” symbol meaning “one or more”.)

Testing that in a little Python program, shows that it works:

|

1 2 3 4 5 6 |

email = re.compile(r"(^[a-zA-Z0-9_.+-]+@[a-zA-Z0-9-]+\.[a-zA-Z0-9-.]+$)") m = email.match("me@you.com") if m: print('email found: ', m.group()) else: print('No email found') |

(Go ahead and try this on your own to prove it to yourself. If you haven’t installed Python on your workstation yet, this is a good time to do that!)

Let’s apply this in SQL Server 2017:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

DECLARE @Python NVARCHAR(4000) = ' import re re_email = re.compile(r"(^[a-zA-Z0-9_.+-]+@[a-zA-Z0-9-]+\.[a-zA-Z0-9-.]+$)") for index, row in InputDataSet.iterrows(): name, email = row m = re_email.match(email) if m: print(name, "email found: ", m.group()) else: print(name, "No email found") '; DROP TABLE IF EXISTS #Input; CREATE TABLE #Input (name varchar(50), email varchar(254)); INSERT INTO #Input (name, email) VALUES ('Arthur Dent', 'Arthur@earth.com'), ('Zaphod Beeblebrox', 'Zaphod@HeartOfGold.com'), ('Trillian', 'trillian@a_party') ; EXEC sp_execute_external_script @language =N'Python', @script = @Python, @input_data_1 = N'SELECT name, email from #Input' |

This produces:

|

1 2 3 4 5 |

(3 rows affected) STDOUT message(s) from external script: Arthur Dent email found: Arthur@earth.com Zaphod Beeblebrox email found: Zaphod@HeartOfGold.com Trillian No email found |

This would probably be more useful if it returned an output result set with an added “IsValidEmail” column. Before we get there, though, let’s look at a different problem.

Handling CSV formatted data

One frequently-asked question found on many SQL Server forums concerns producing or consuming CSV files in SQL. When asked, my usual go-to answer is “Integration Services.” With Python in SQL Server 2017, there’s a new option. Python has long had CSV handling in its standard library. Let’s use it!

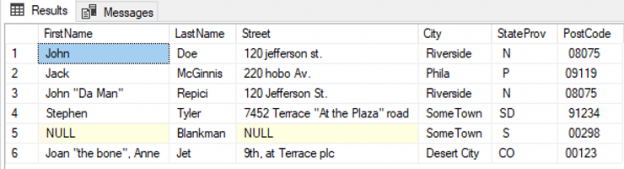

For this exercise, I’ll take the lazy approach and grab a ready-made CSV file using the link in the references section. It is a name and addresses file that looks like this:

|

1 2 3 4 5 6 |

John,Doe,120 jefferson st.,Riverside, NJ, 08075 Jack,McGinnis,220 hobo Av.,Phila, PA,09119 "John ""Da Man""",Repici,120 Jefferson St.,Riverside, NJ,08075 Stephen,Tyler,"7452 Terrace ""At the Plaza"" road",SomeTown,SD, 91234 ,Blankman,,SomeTown, SD, 00298 "Joan ""the bone"", Anne",Jet,"9th, at Terrace plc",Desert City,CO,00123 |

These six lines show some of the complexities of CSV data, including missing fields, quoted fields, embedded quotation marks and more. Handling this kind of data in SQL is awkward at best. Can we do it with Python? You bet! This little program will read the above data, parse it and print it:

|

1 2 3 4 5 |

import csv with open('addresses.csv') as csvfile: rdr = csv.reader(csvfile) for row in rdr: print(', '.join(row)) |

We could just take this script, wrap it up in a call to sp_execute_external_script, and run it in SQL Server 2017. Let’s do a bit more, though. Let’s pass the input to the script as a query and return the results as a SQL Server result set.

First, create a table to hold the sample data and populate it:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

IF OBJECT_ID(N'tempdb..#csv', N'U') IS NOT NULL DROP TABLE #csv; CREATE TABLE #csv(line VARCHAR(256)); INSERT INTO #csv(line) VALUES ('John,Doe,120 jefferson st.,Riverside, NJ, 08075'), ('Jack,McGinnis,220 hobo Av.,Phila, PA,09119'), ('"John ""Da Man""",Repici,120 Jefferson St.,Riverside, NJ,08075'), ('Stephen,Tyler,"7452 Terrace ""At the Plaza"" road",SomeTown,SD, 91234'), (',Blankman,,SomeTown, SD, 00298'), ('"Joan ""the bone"", Anne",Jet,"9th, at Terrace plc",Desert City,CO,00123') |

Next, compose a short Python script to use the Python csv module (part of the standard library):

|

1 2 3 4 5 6 |

DECLARE @Python NVARCHAR(MAX) = N' import csv csvinput = (row["line"] for index, row in InputDataSet.iterrows()) rdr = csv.reader(csvinput) OutputDataSet = pandas.DataFrame().from_records(rdr) '; |

InputDataSet is a DataFrame object from the pandas module:

The pandas module is included with SQL Server when you install Python support. This little script iterates over the rows in the DataFrame, then constructs OutputDataSet, also a pandas DataFrame object, using the reader method from the csv module, which does the actual parsing. Note that InputDataSet and OutpuDataSet are the default names used by the sp_execute_external_script stored procedure. These are overrideable. See the references section for more information

Next, create a table to hold the results:

|

1 2 3 4 5 6 7 8 9 |

IF OBJECT_ID(N'tempdb..#csvparsed', N'U') IS NOT NULL DROP TABLE #csvparsed; CREATE TABLE #csvparsed ( FirstName VARCHAR(50), LastName VARCHAR(50), Street VARCHAR(50), City Varchar(50), StateProv CHAR(2), PostCode VARCHAR(6)); |

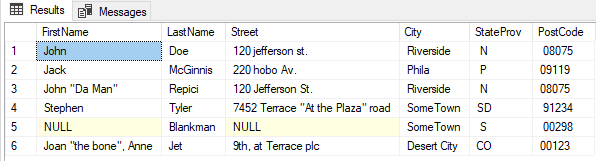

Finally, run the script to populate the new table:

|

1 2 3 4 5 6 7 |

INSERT INTO #csvparsed(FirstName, LastName, Street, City, StateProv, PostCode) EXEC sp_execute_external_script @language=N'Python', @script = @Python, @input_data_1 = N'SELECT line from #csv'; SELECT * FROM #csvparsed; |

This produces the result set:

Each row of the CSV file has been correctly parsed into rows and columns!

Python also has great support for other types of data, such as JSON, XML, and HTML. While it is possible to shred (parse) and emit these with T-SQL, you may find it easier to do this with the Python standard libraries, depending on the application. It should go without saying that, for performance-critical applications, you need to be sure to perform extensive testing to find the best overall solution.

Other applications

The Python standard library contains many other useful modules that I won’t go into here. You have the basic tools you need. The next time you have a problem that is tough to solve in T-SQL, consider using Python as demonstrated here instead.

Some modules will require additional permissions. For example, any module that interacts with the file system or remote servers will not work under the Launchpad service, as configured out of the box. You would need to grant the appropriate access to the service account. Also, there are often other ways to perform such operations – Integration Services, for example.

Summary

The addition of Python support to SQL Server opens up a world of new capabilities. Machine learning is certainly the primary reason for adding Python (and R) to SQL Server, but as this article has shown, there are other exciting use cases as well.

Oh, about adding an IsValidEmail column as mentioned above, why not give that a go on your own? It’s not hard and will give you a chance to get your hands dirty with Python in SQL Server.

- Snapshot Isolation in SQL Server - August 5, 2019

- Shrinking your database using DBCC SHRINKFILE - August 16, 2018

- Partial stored procedures in SQL Server - June 8, 2018