In this article, we will see how we can access data from an Azure SQL database from Azure Data Lake Analytics.

Introduction

In the previous part of the Azure Data Lake Analytics article series, we learned how to process multiple file sets stored in the Azure Data Lake Storage account using U-SQL. Often data is stored in structured as well as unstructured formats, and one needs to access data from structured stores as well apart from data stored in repositories like Azure Data Storage Account. We will go over the process to access data from an Azure SQL database using Azure Data Lake Analytics.

Initial Setup

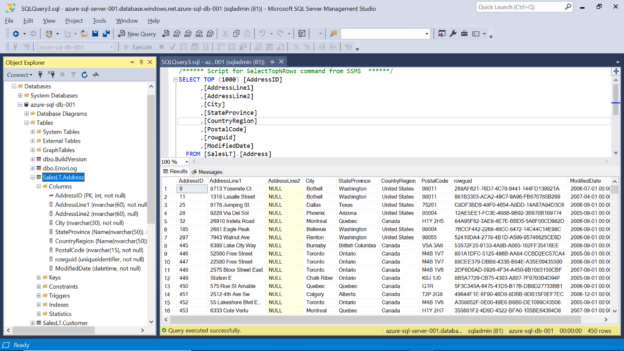

We need to have a few pre-requisites in place before we can start our exercise. In the previous part of this article series, we set up an Azure Data Lake Analytics account and created a database on it. We would also need an Azure database with some sample data in it. While creating an Azure database it offers the option to create it with sample data. In this exercise, we are going to use such a database with the sample data as shown below. In the below screen, it shows the azure-sql-server-001 is the name of the database, azure-sql-server-001 is the name of the Azure SQL Server instance, and SalesLT.Address is the name of the table that we intend to access from Azure Data Lake Analytics. Once this setup is in place, we can proceed with the next steps.

Creating Database Credential

It is assumed that an Azure Data Lake Analytics account is in place. Open the dashboard page on the Azure portal as shown below. “mydatalakeanalytics001” is the name of the account. From the toolbar, open Azure Cloudshell as shown below. We are going to use Azure PowerShell to fire command to create credentials for the Azure SQL Database. This is the first part of the process.

We intend to use PowerShell to fire commands to create a database credential in an Azure Data Lake Analytics U-SQL Database. Click on the PowerShell option. This will open a screen as shown below:

Click on the maximize button, so that we can have more screen space to execute the command clearly. Once the screen is maximized it would look as shown below:

Execute the command as shown below. The name of the command is New-AzureRnDataLakeAnalyticsCatalogCredential. This command takes the following input parameters:

- AccountName – Name of the Azure Data Lake Analytics account

- DatabaseName – Name of the Azure Data Lake Analytics U-SQL Database

- CredentialName – Any desired name of the credential that we intend to create

- Credential – We intend to create a new credential. So just provide the values as shown below

- DatabaseHost – Name of the Azure database that we intend to access

- Port – By default, the port on which Azure SQL Database listens to is 1433

Once you execute this command, you would see a prompt asking for the user id and password that forms the credential. Provide the access credentials of the Azure database that would have configured while creating this database, as shown below:

Once the command is executed successfully, the credential would get created. Navigate to the Azure Data Lake Explorer, and expand the database that we mentioned in the command, and under the Credentials folder, you would be able to find the newly created credential by the command that we executed in the above step.

Creating Data Source

After creating the credential, we need to create a data source. For the same, we can use the CREATE DATA SOURCE U-SQL command as shown below. We intend to create it in the DriverDB U-SQL database, so ensure that the name of the data source is preceded by the name of the database. In the provider string, provide the name of the SQL Azure Database which hosts the table we intend to access. For the credential name, provide the name of the credential that we created in the above step. Once the command is ready, execute the job on the Azure Data Lake Analytics account as shown below:

Once the job completes successfully, navigate to the Data Lake Explorer, and under the Data Sources section, you would be able to see the newly created data source as shown below:

Creating an External Table

Azure Data Lake Analytics supports the creating of two types of U-SQL tables. One is managed tables, which is the regular tables that we create using Azure Data Lake Analytics as the data source that we saw in the earlier part of this series. Another type of table that is supported is External Tables. This allows us to create a table that accesses the Azure SQL Database as the data source. In this setup, we are going to create an external table.

This can be easily created using the CREATE EXTERNAL TABLE command as shown below. The first step is to ensure that this table is created in the right database, so using the USE command we switch the context to the database in which we intend to create the table. Then we use the CREATE EXTERNAL TABLE command and provide the column definition along with the data types that are compatible with U-SQL. In the FROM clause, we specify the name of the data source that we created in the previous step, and LOCATION as the name of the table in the Azure SQL Database that would act as the source for this table.

Execute this job on the Azure Data Lake Analytics account, and once the job is completed successfully, the table would get created. Navigate to the Data Lake Analytics Explorer and you would be able to find the table under the tables section in the database we considered for table creation as shown below. You can also see the columns listed under it.

Now that the table is created, it’s time to access the table using a U-SQL job. Navigate to the solution explorer, right-click and select Add a new item, and select U-SQL Script. This would add a new blank U-SQL Script file to the solution. Type the U-SQL Script as shown below:

In this first line of the script, we are reading data from the newly created table using the SELECT command and populating it in a variable. In the second step, we are merely writing the read values to an output file on the Azure Data Lake Storage account using CSV outputters. Once the script is ready, execute the job on the Azure Data Lake Analytics account.

Once the job has been executed successfully, it would look as shown below. The job graph shows that data is being read from the table and written to an output file, and 450 rows have been processed.

Navigate to the Azure Data Lake Storage account, and you would be able to find the output file. Open the file for preview, and you would be able to see the file as shown below:

In this way, we can access data from an Azure SQL Database from Azure Data Lake Analytics.

Conclusion

In this article, we started with the basic setup of an Azure SQL Database and Azure Data Lake Analytics account with a database. We created a database credential using PowerShell from Azure Cloud Shell. Then we create a data source in Azure Data Lake Analytics account using U-SQL, followed by the creation of an external table using U-SQL as well. Finally, we created a U-SQL job to access the data from this external table that points to the Azure database.

Table of contents

- Finding Duplicates in SQL - February 7, 2024

- MySQL substring uses with examples - October 14, 2023

- MySQL group_concat() function overview - March 28, 2023