Some of my previous articles on Python provided insight of the basics and the usage of Python in SQL Server 2017.

- Why would a SQL Server DBA be interested in Python

- An overview of Python vs PowerShell for SQL Server Database Administration

- Data Interpolation and Transformation using Python in SQL Server 2017

This article is an effort to collect all the missing pieces and try to showcase the importance of using Python programming in SQL Server.

Many say that PowerShell has the upper hand over Python in some aspects of the database administration. I too am under the same impression as many technical enthusiasts, but with very limited knowledge, we can also see the power of Python. Perhaps, instead of pitting PowerShell and Python against each other, we can look at them as complementing technologies.

In 2016, R, the statistical computing programming language was integrated with the SQL Server version, named for the same year. The other side of the coin was missing since Python is also a leading machine learning language and even having a large user base. In SQL Server 2017, Python is integrated. Now, R and Python are under the same umbrella of the feature called machine learning services.

As Python is being a common programming language adopted by data scientists and database administrators, the ability to run Python code as T-SQL script enables the machine learning capabilities, directly when it comes to dealing with large amounts of data. First, data no longer needs to be extracted from the database before it can be processed through a client program. This provides us with significant benefits in terms of security, integrity, and compliance that arise when data is otherwise moved outside of the highly controlled environment within the database engine. Further, computations are performed on the server itself without having to first transfer the data to a client, thereby placing a large load on network traffic. This also means that you can perform computations across the entire dataset without having to take representative samples as is common when processing data on a separate machine. And because the data stays in place, you can take full advantage of the performance benefits brought by SQL Server technologies such as in-memory tables and column-store indexes. Python code is also very easy to deploy and can be written directly inside of any Transact-SQL command.

Next, SQL Server 2017 supports the installation of any Python packages that we might need so you can build on top of the extensive collection of open source capabilities that have been developed by the wider Python community. And finally, Python integration is available in every edition of SQL Server 2017, even the free-to-use Express edition. So, no matter the scale of your application, you can take advantage of Python integration.

To get you started, SQL Server 2017 includes a number of libraries from Anaconda, a very popular data science platform. In addition to that, Microsoft has created two libraries that are installed with Machine Learning Services.

- Revoscalepy

Revoscalepy is a library of functions that supports distributed computing, remote compute contexts, and high performance algorithms

- Microsoftml

The Microsoftml library contains functions for machine learning algorithms including the creation of linear models, decision trees, logistic regression, neural networks, and anomaly detection.

Let’s begin

The traditional ways of analysis within SQL Server using various Microsoft components such as SQL, MDX, DAX in PowerPivot gives the flexibility to transform data. Now, the R language, another rich superset of machine learning modules for data analytics is directly integrated with SQL Server 2016. R is another language which has a large user base, along with Python. With the available modules in Python, data analysis becomes more efficient and effective.

Let me take you through a few examples to prove that the use of Python within SQL Server is an efficient way to pull data from remote servers.

- See how to connect to a SQL Server data source using pyodbc

- Execute the SQL query, in this case build the connecting string which points to remote SQL instance and execute the Dynamic Management View query sys.dm_os_waitstas

- Assign the SQL output to the data frames

-

Display the top 10 rows of the SQL result set using the head function

The head command is used to display the first ‘n’ rows of the dataframe. This is just like the top command of the SQL Server. The first column (showing 0 to 9) is the default index for the dataframe - In order to convert the dataframe data into related SQL columns, the WITH RESULTS SET clause is defined at the end of the code. This gives us the flexibility to define the column and associated types of each column.

- WITH RESULT SETS requires that the number of columns in the result definition must be equal to the number of columns returned by the stored procedure/SQL query. In the following examples, the output of the SQL query returns four columns: servername, waiting_type, waiting_tasks_count, wait_time_ms. These match the definition of the with result sets clause.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

EXEC sp_execute_external_script @language = N'python', @script = N'import pyodbc import pandas as pd connection = pyodbc.connect(''DRIVER={ODBC Driver 13 for SQL Server};SERVER=hqdbsp18;UID=sa;PWD=api1401'') cursor = connection.cursor() query = ''SELECT wait_type,waiting_tasks_count,wait_time_ms FROM sys.dm_os_wait_stats'' df = pd.read_sql(query,connection) print(df.head(5)) connection.close() ' |

The above SQL query output can also be returned as a SQL table using with result clause.

|

1 2 3 4 5 6 7 8 9 10 11 |

EXEC sp_execute_external_script @language = N'python', @script = N'import pyodbc import pandas as pa connection = pyodbc.connect(''DRIVER={ODBC Driver 13 for SQL Server};SERVER=hqdbsp18;UID=sa;PWD=sqlshackai1401'') query = ''SELECT @@servername,wait_type,waiting_tasks_count,wait_time_ms FROM sys.dm_os_wait_stats'' pa.read_sql(query,connection) OutputDataSet = pa.read_sql(query,connection) ' WITH RESULT SETS((servername varchar(20),waiting_Type varchar(256), waiting_tasks_count bigint, wait_time_ms decimal(20,5))) |

Let us look at an example to push the sample data into the table. The following SQL query sample yields the database internal information, printed as a string output.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

EXEC sp_execute_external_script @language = N'Python' , @script = N'import pyodbc import pandas as pa with open("f:\PowerSQL\server.txt", "r") as infile : lines = infile.read().splitlines() for line in lines: server = line print(server) database = "Master" cnxn = pyodbc.connect("DRIVER={ODBC Driver 13 for SQL Server};SERVER="+server+";PORT=1443;DATABASE="+database+";UID=sa;PWD=api1401") tsql = """ SELECT @@SERVERNAME serverName, CONVERT(VARCHAR(25), DB.name) AS DatabaseName, (SELECT count(1) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid!=0) AS [DataFiles], (SELECT cast(SUM((size*8)/1024) as decimal(10,2)) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid!=0) AS [DataMB], (SELECT count(1) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid=0) AS [LogMB], (SELECT cast(SUM((size*8)/1024) as decimal(10,2)) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid=0) AS [LogMB] FROM sys.databases DB ORDER BY DatabaseName""" print(pa.read_sql(tsql,cnxn))' |

The following SQL creates a demo table on the target instance. SQLShackDemoDB is the name of the target database.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

USE [SQLShackDemoDB] GO CREATE TABLE [dbo].[tbl_databaseInventory]( [ServerName] [varchar](100) NOT NULL, [databaseName] [varchar](25) NULL, [DataFiles] [int] NULL, [DataMB] [int] NULL, [LogFiles] [int] NULL, [LogMB] [int] NULL ) ON [PRIMARY] GO |

The stored procedure named P_SampleDBInventory_Python is created with two connections.

- Load the pyodbc Python module for data connectivity

- Build the target connection string. It includes the destination object details such as target instance, database and table

- Open the cursor

- The second connection string is built using a file. The file is the source of the input server names. The query should traverse across the lists to generate the data sets. Then the dataset is traversed to pull the column details in the destination using the target connection string

- Build the SQL statement using triple quotation marks. The triple quotes are used to build regular strings that can span into multiple lines

- The SQL statement is executed using the defined cursor

- Results are loaded into the destination table

-

Call the stored procedure

1EXEC P_SampleDBInventory_Python

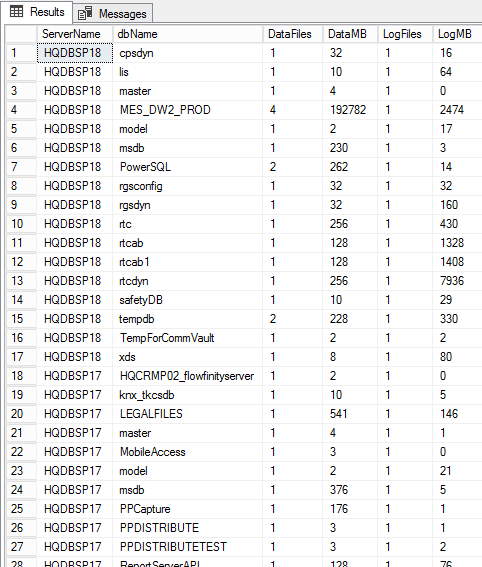

- Verify the SQL Output

For the target connection string,

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

CREATE Procedure P_SampleDBInventory_Python AS EXEC sp_execute_external_script @language = N'Python' , @script = N' import pyodbc import pandas as pa Instance = ''HQBT01'' Database = ''SQLShackDemoDB'' uname = ''thanVitha2017'' pwd = ''thanVitha2017401$'' conn1 = pyodbc.connect(''DRIVER={ODBC Driver 13 for SQL Server};SERVER=''+Instance+'';PORT=1443;DATABASE=''+Database+'';UID=''+uname+'';PWD=''+ pwd) cur1 = conn1.cursor() with open("f:\PowerSQL\server.txt", "r") as infile : Input = infile.read().splitlines() for sInput in Input: srv = sInput print(srv) db = "master" conn2 = pyodbc.connect("DRIVER={ODBC Driver 13 for SQL Server};SERVER="+srv+";PORT=1443;DATABASE="+db+";UID=sa;PWD=as21201") cur2 = conn2.cursor() SQL1 = """ SELECT @@SERVERNAME serverName, CONVERT(VARCHAR(25), DB.name) AS DatabaseName, (SELECT count(1) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid!=0) AS [DataFiles], (SELECT cast(SUM((size*8)/1024) as decimal(10,2)) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid!=0) AS [DataMB], (SELECT count(1) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid=0) AS [LogFiles], (SELECT cast(SUM((size*8)/1024) as decimal(10,2)) FROM sysaltfiles WHERE DB_NAME(dbid) = DB.name AND groupid=0) AS [LogMB] FROM sys.databases DB ORDER BY DatabaseName;""" with cur2.execute(SQL1): row = cursor.fetchone() while row: print (str(row[0])+" "+str(row[1])+" "+str(row[2])+ " "+str(row[3])+" "+str(row[4])+" "+str(row[5])) SQL2 = "INSERT INTO [tbl_DatabaseInventory] (ServerName, DatabaseName, DataFiles, DataMB, LogFiles, LogMB) VALUES (?,?,?,?,?,?);" with cur1.execute(SQL2,str(row[0]),str(row[1]),row[2],row[3],row[4],row[5]): print (''Successfuly Inserted!'') row = cursor.fetchone()' END |

Output:

Wrapping Up

So, there you have it. While the examples provided were fairly straightforward, I hope you can see how useful Python could be for a database administrator as well, and not just a BI or Data Scientist.

The real beauty is that all this code could happily sit inside a stored procedure; something that you can’t do all that well with PowerShell.

Python integration in SQL Server 2017 gives data scientists an easy way to interact with their data directly in the database engine and it gives developers an easy way to integrate Python models into their application through simple stored procedures.

- Stairway to SQL essentials - April 7, 2021

- A quick overview of database audit in SQL - January 28, 2021

- How to set up Azure Data Sync between Azure SQL databases and on-premises SQL Server - January 20, 2021