A SQL Server backup and restore strategy is an essential process to safeguard and protect critical data. The vital role of any DBA is to minimize the risk of data loss and preserve data modifications at regular intervals of time. A well-planned and well-tested backup-and-restore strategy always help to protect the organization with no data loss, or at least a minimum, from the many varieties of system and database failure. As such, it is recommended to understand the importance and implication of the backup-and-restore strategy.

In this article the following topics are covered:

- High-level components and concepts of Memory-optimized objects

- Introduction to Backup and Restore Strategies

- Background of In-Memory OLTP engine

- Explain database recovery phases

- Transaction logging

- Backup and restore of the memory-optimized database

- Piecemeal restore of the memory-optimized database

- And more…

Introduction

One should understand the basic business requirements, of an organization, and system internals so that the good backup-and-restore strategy can be defined. OLTP workloads define the state of the data. Data-manipulation and data-transformation operations define the memory and disk requirements for the operation to complete. A good knowledge about the systems and local applications is always a key factor for successful backups. Improper management of backups may lead to a greater risk to database availability.

In-Memory OLTP engine and storage engines both use the same transaction log and Write-Ahead Logging (WAL) logging mechanism; however, the log record format and algorithms used for In-Memory OLTP logging mechanism is entirely different. It generates log records only at the time of a commit transaction. There is no concept of tracking uncommitted changes and there’s no need to write any undo records to the transaction log. In-Memory OLTP generates logs based on the transaction write-set. All the modifications are combined to form one or very few log records.

The SQL Server engine handles the backup-and-recovery of durable memory-optimized databases in a different way than the traditional database backups. As we know, any data held in RAM is volatile and it’s lost when the server reboots or server crashes. However, the In-Memory architecture is engineered to create a backup of the data on the localized disk. As it’s a memory-centric, this adds a new step in the database recovery phase. Memory management is a very important step. If it’s not managed, we’ll end up with out-of-memory issues.

Database Recovery Phases

When SQL Server instances restart, each database goes through different recovery stages.

The different phases are:

- Analysis

- Redo

- Undo

Analysis: In this phase, the transaction log is analyzed to track the information about the last checkpoint and create the Dirty Page Table (DPT); this captures all the dirty-page details. In In-Memory OLTP engine, the analysis phase identifies the checkpoint inventory and prepares the system table with all the log entries and also its processes the associated file allocation log records

Redo: This is the roll-forward phase. When this phase completes, the database comes online.

Here are the points to ponder:

- For disk-based tables, the database is moved to the current point-in-time and acquires locks taken by uncommitted transactions.

- For memory-optimized tables, data from the data and delta file pairs are loaded into the memory and then update the data with the active transaction-log based on the last durable checkpoint. During this phase, disk and memory-optimized based object recovery run concurrently.

- In SQL Server 2017, the redo phase of the memory-optimized tables (the associated data and delta files) is done in parallel. This results in faster times for database recovery process.

- When the above operations are completed for both disk-based and memory-optimized tables, the database becomes online and available for access.

Undo: This is the rollback phase. It holds the list of the active transaction from the analysis phase basically undoing the transactions. This phase is not needed for memory-optimized tables since In-Memory OLTP doesn’t record any uncommitted transactions for memory-optimized tables.

Checkpoint

A checkpoint is a background process continuously scans the transaction log records and then writes the data and delta files on disk. Writing to the data and delta files is done in an append-only manner by appending newly created rows to the end of the current data file and appending the deleted rows to the corresponding delta file.

Data and Delta Files

For persistence, the data for memory-optimized tables are stored in one or more checkpoint file pairs (CFP). The data file(s) store inserted rows and delta file(s) stores the references of deleted rows. The update operation is an insert followed by delete rows. Over time, based on the merge policy, the background checkpoint process merges the CFP’s into a new CFP and older CFP’s go through removal process with a process of garbage collection.

Getting started

To create a sample Memory optimized database run through the following steps

- Create a In-Memory optimized database, named SQLShackInMemDB

- Map file-stream container to In-Memory file-group

- Create a In-Memory optimized table, named SQLShackInMemAuthor

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

CREATE DATABASE SQLShackInMemDB GO ALTER DATABASE SQLShackInMemDB ADD FILEGROUP SQLShackInMemDB_FG CONTAINS MEMORY_OPTIMIZED_DATA ALTER DATABASE SQLShackInMemDB ADD FILE (name=SQLShackInMemDB_FG, filename='F:\SQLShackInMemDB_FG_Container') TO FILEGROUP SQLShackInMemDB_FG GO USE SQLShackInMemDB GO CREATE TABLE dbo.SQLShackInMemAuthor ( ID bigint NOT NULL, Name char (30) NOT NULL, Address char (8000) NOT NULL, CONSTRAINT [pk_SQLShackInMemTBL_ID] PRIMARY KEY NONCLUSTERED HASH (ID) WITH (BUCKET_COUNT = 500000) ) WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA) GO |

Though data is stored in memory for memory-optimized objects, SQL Server still needs to data persistence for disaster recovery hence the transactions are scattered across containers

|

1 2 3 4 5 6 7 |

SELECT state_desc , file_type_desc , COUNT(*) AS Cnt , SUM(file_size_in_bytes) / 1024 / 1024 AS DiskSizeMB FROM sys.dm_db_xtp_checkpoint_files GROUP BY state, state_desc, file_type, file_type_desc ORDER BY state, file_type |

The size of the container on disk

Database backup operations

Now, let’s backup the SQLShackInMemDB database using the following T-SQL

|

1 2 |

BACKUP DATABASE SQLShackInMemDB TO DISK = N'F:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\SQLShackInMemDB-FULL-empty-data.bak' WITH CHECKSUM,NOFORMAT, INIT, NAME = N'SQLShackInMemDB-Full Database Backup', SKIP, STATS = 15 |

The full database backup size is 5.3 MB. The space consumed on the disk is 156 MB. On comparison; backup size is relatively much smaller in size. On other side, though the database is empty, still there is a lot of space consumed on the disk due to the fixed storage constraints.

Insert dummy values to the table to understand the storage internals and allocation units

|

1 2 3 4 5 6 7 |

DECLARE @id bigint = 0 WHILE (@id < 8000) BEGIN INSERT SQLShackInMemTBL VALUES (@id, 'SQLShack', replicate ('USA, WI,Appleton', 8000)) SET @id =@id+ 1; END |

The following query is used to check the different states of the and status of checkpoint files

|

1 2 3 4 5 6 7 |

SELECT state_desc , file_type_desc , COUNT(*) AS Cnt , SUM(file_size_in_bytes) / 1024 / 1024 AS DiskSizeMB FROM sys.dm_db_xtp_checkpoint_files GROUP BY state, state_desc, file_type, file_type_desc ORDER BY state, file_type |

Now, initiate a full database backup. As we can see that the database backup command is no different from the standard backup command T-SQL.

|

1 2 |

BACKUP DATABASE SQLShackInMemDB TO DISK = N'F:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\SQLShackInMemDB-FULL-data.bak' WITH CHECKSUM,NOFORMAT, INIT, NAME = N'SQLShackInMemDB-Full Database Backup', SKIP, STATS = 15 |

Let’s initiate differential backup. The backup size is relatively smaller than the full backup.

|

1 2 |

BACKUP DATABASE SQLShackInMemDB TO DISK = N'F:\PowerSQL\SQLShackInMemDB-DIFF-data.bak' WITH DIFF, CHECKSUM,NOFORMAT, INIT, NAME = N'SQLShackInMemDB-DIFF Database Backup', SKIP,STATS = 15 |

Execute the backup log command. This proves that it’s no different from normal backup operation command.

|

1 2 |

BACKUP LOG SQLShackInMemDB TO DISK =N'F:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\SQLShackInMemDB-LOG-data_1.trn' WITH CHECKSUM,NOFORMAT, INIT, NAME = N'SQLShackInMemDB-Tran Database Backup', SKIP, STATS = 15 |

Using sys.dm_db_xtp_checkpoint_files, it’s really that easy to see the state_desc. The “WAITING FOR LOG TRUNCATION” state of the checkpoint files reminds to backup the transaction logs. Checkpoint files go through various stages before they can be deleted and removed.

|

1 2 3 4 5 6 7 |

SELECT state_desc , file_type_desc , COUNT(*) AS Cnt , SUM(file_size_in_bytes) / 1024 / 1024 AS DiskSizeMB FROM sys.dm_db_xtp_checkpoint_files GROUP BY state, state_desc, file_type, file_type_desc ORDER BY state, file_type |

Database restore operation

In this section, we will discuss the steps to restore the In-Memory optimized database.

As we can see that the database restore commands for an In-Memory optimized database are no different than traditional database restore commands. But, there are few key points to consider. In this case, the size of the memory optimized objects is relatively small (~400 MB). Loading such a small chunk is relatively pretty straightforward. When dealing with larger objects, here are some of the general recommendations for how much memory is needed for the restoration activity.

- Identify the size of data/delta storage

- Measure the size of the durable memory optimized tables

- Understand the workload on the durable tables

- Compare the size of the t-log backups

Note: The outcome of the database restore always depends on the available resources.

|

1 2 3 4 |

USE [master] GO RESTORE DATABASE [SQLShackInMemDB] FROM DISK = N'F:\PowerSQL\SQLShackInMemDB-FULL-data.bak' WITH FILE = 1, MOVE N'SQLShackInMemDB_FG' TO N'f:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\DATA\SQLShackInMemDB_FG_Container', NORECOVERY, NOUNLOAD, STATS = 5 |

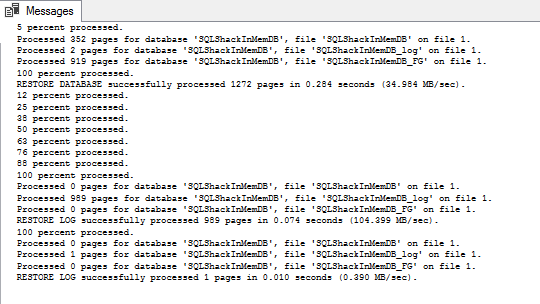

Restore t-logs

|

1 2 3 4 |

RESTORE LOG [SQLShackInMemDB] FROM DISK = N'F:\PowerSQL\SQLShackInMemDB-LOG-data_1.trn' WITH FILE = 1, NORECOVERY, NOUNLOAD, STATS = 5 RESTORE LOG [SQLShackInMemDB] FROM DISK = N'F:\PowerSQL\SQLShackInMemDB-LOG-data_2.trn' WITH FILE = 1, NOUNLOAD, STATS = 5 GO |

Piecemeal restore of a memory-optimized database

A key consideration for piecemeal restore of the memory-optimized database is that the file-group must be backed up and restored together with the primary file-group.

Demo

Let’s add another file-group and file named SQLShack_IndexFG and ShackShack_IndexFile

|

1 2 |

ALTER DATABASE SQLShackInMemDB ADD FILEGROUP SQLShack_IndexFG ALTER DATABASE SQLShackInMemDB ADD FILE (name=SQLShack_IndexFile, filename='F:\PowerSQL\SQLShack_IndexFG.ndf') TO FILEGROUP SQLShack_IndexFG |

If you’re intended to back up or restore the primary file-group, it is must to specify the memory-optimized file-group. In the backup T-SQL, we can see that primary and SQLShackInMemDB_FG are added. It is must to include the primary file-group, if you intend to backup memory-optimized file-group.

|

1 2 |

BACKUP DATABASE SQLShackInMemDB filegroup='primary', filegroup='SQLShackInMemDB_FG' TO DISK = N'F:\PowerSQL\SQLShackInMemDB-Primary-and-InMem-data.bak' WITH CHECKSUM,FORMAT, INIT, NAME = N'SQLShackInMemDB-Primary and In-Mem Database Backup', SKIP, NOREWIND, NOUNLOAD,STATS = 10 |

Now, backup the secondary filegroups. Here, SQLShack_IndexFG is the index file-group.

|

1 2 |

BACKUP DATABASE SQLShackInMemDB filegroup='SQLShack_IndexFG' TO DISK = N'F:\PowerSQL\SQLShackInMemDB-indexFG.bak' WITH CHECKSUM,FORMAT, INIT, NAME = N'SQLShackInMemDB-IndexFG Database Backup', SKIP, NOREWIND, NOUNLOAD,STATS = 10 |

Next, lets perform the t-log backup

|

1 2 |

BACKUP LOG SQLShackInMemDB TO DISK =N'F:\PowerSQL\SQLShackInMemDB-LOG-data_1.trn' WITH CHECKSUM,FORMAT, INIT, NAME = N'SQLShackInMemDB-Transaction log Database Backup', SKIP, NOREWIND, NOUNLOAD,STATS = 10 |

Thus far, we have seen the database backup operation. Now, we will see how to perform the database restore.

To restore the database, first restore the primary and memory-optimized file-group together and then restore the transaction log.

|

1 2 |

RESTORE DATABASE SQLShackInMemDB filegroup = 'primary', filegroup = 'SQLShackInMemDB_FG' from disk= N'F:\PowerSQL\SQLShackInMemDB-Primary-and-InMem-data.bak' with partial, norecovery |

|

1 2 |

RESTORE LOG SQLShackInMemDB FROM DISK =N'F:\PowerSQL\SQLShackInMemDB-LOG-data_1.trn' WITH FILE = 1, NOUNLOAD, STATS = 10 GO |

Next, restore the index file group

|

1 2 3 4 5 |

use master go RESTORE DATABASE SQLShackInMemDB file = N'SQLShack_IndexFile' from disk= N'F:\PowerSQL\SQLShackInMemDB-indexFG.bak' with RECOVERY, FILE = 1, NOUNLOAD, STATS = 10 |

The database is up and running! That’s all for now…

Wrapping Up

Memory-optimized tables are backed up with regular database backup options. The CHECKSUM clause is used in addition to validate the integrity of the data and delta file pairs storage allocations.

The process of transaction logging is optimized for scalability and high performance through the use of reduced logging mechanism.

Some extreme cases, like where the system is running out of disk space, may lead into the delete-only-mode.

Using transaction log and data and delta files, In-Memory tables are automatically rebuilt. As Data and Delta files are loaded and processed in parallel, the outage is further shortened by enforcing faster database recovery technique. Finally, transaction logs are applied to bring back the database online.

We ran through the backup and restore procedures with no additional backup procedures. The SQL Server engine manages the In-Memory database recovery process and it’s relatively straightforward with some additional steps. For durable memory-optimized data, the process has to stream the data from CFP (Checkpoint-File-Pairs) back into the memory.

The restore activity may fail due to out-of-memory errors. It is recommended to configure a dedicated resource pool for In-Memory optimized database. In general, there would be a need of 3 * the size of the durable memory-optimized memory for the successful database restores operation. I would recommend testing the backup and restore procedures in your environment.

Table of contents

References

- Choosing the right server memory for restore and recovery of memory-optimized databases

- Restore and recovery of memory-optimized tables

- In-Memory OLTP Series – Data migration guideline process on SQL Server 2014

- Survey of Initial Areas in In-Memory OLTP

- SQL Server 2016 SP1: Know your limits

- Restore and recovery of memory-optimized tables

- Resolve Out Of Memory issues

- Piecemeal Restore of Databases With Memory-Optimized Tables

- Expert SQL Server in-Memory OLTP

- Stairway to SQL essentials - April 7, 2021

- A quick overview of database audit in SQL - January 28, 2021

- How to set up Azure Data Sync between Azure SQL databases and on-premises SQL Server - January 20, 2021