One shared characteristic among popular data visualization tools such as Power BI is the ease at which data can be extracted from a variety of disparate data sources, usually at a click of a button, as shown in Figure 1. Such convenience, though, tends to come at a cost as you often have little control over how background scripts used to extract data are generated. Yet, this should be of utmost concern for data architects and BI developers alike as rarely do you find auto-generated scripts that are efficient and optimal. In this article, join me as I put on my DBA hat and trace, monitor and review SQL batch statements that are auto-generated by the Get Data feature in Power BI using SQL Server Extended Events.

Figure 1: Import data from a SQL Server database in Power BI

Import Data into Power BI Using the Navigator Window

The Get Data feature in Power BI allows for a connection into SQL Server to be established using different connectivity modes. However, for the purposes of this discussion, we will concentrate only on the Import mode of data connectivity in Power BI. The Import connectivity mode works by extracting a copy of the dataset from a data source and save it within a Power BI (.pbix) document. This mode of connectivity is suitable in environments wherein your connection to the data source is unstable or when working with a data dump. One obvious downside to storing a copy of your data within a Power BI document is that it could potentially increase the overall size of your Power BI document.

Whenever Power BI connects to a data source such as SQL Server using an Import connectivity mode, it tends to leave an imprint of itself within that data source environment. Fortunately, by running either a SQL Server Profiler or SQL Server Extended Events, we can get to see just what kind of activities occur when Power BI extracts data out of a SQL Server environment. SQL Server Profiler is now a deprecated feature in SQL Server, hence, we will only make use of SQL Server Extended Events in our demo, specifically the SQL:BatchCompleted event as we are more interested in monitoring back-end queries generated by clicking on the Power BI interface.

Assuming that you have already setup the SQL Server Extended Events session for the SQL:BatchCompleted event, we begin our data import in Power BI by configuring a connection to our SQL Server instance, as shown in Figure 2.

Figure 2: Configuring SQL Server database connection in Power BI

-

SQL Batch Statements #1: Get Database Names

The first batch of T-SQL queries is generated as soon as you click the OK button shown at the bottom of Figure 2. This batch generates two SQL:BatchCompleted events as shown in Figure 3. The batch text from the first event runs a SELECT @@VERSION command whilst the second SQL Server extended events is used to retrieve a list of user-defined database names available to the SQL Server instance that you are connected to.

Figure 3: Displaying SQL Server extended events

Power BI then takes this list of user-defined databases and displays it within the Navigator window, as shown in Figure 4

Figure 4: Power BI Navigator window

-

SQL Batch Statements #2: Get Tables and Views

As soon as you expand any of the database nodes shown in Figure 4, Power BI will submit another batch of SQL statements to the SQL Server engine. This time, to retrieve a list of database tables and views associated with a given user.

Figure 5: Displaying SQL Server extended events

Once this latest batch of statements is done running, the Navigator wizard in Power BI will be refreshed to show an extended sub-list of tables and views that the logged in user has access to. Clicking the checkbox next to a table/view in the sub-list will not generate a call to the SQL Server engine but you will get a preview of the table structure as well as a data sample, as shown in Figure 6

Figure 6: Preview of data sample

-

SQL Batch Statements #3: Get Tables and Views

Finally, when you click the Load button in Figure 6, Power BI will submit several T-SQL statements to the SQL Server engine. The majority of T-SQL statements in this batch will perform the same exercise as we have seen in prior batches with the exception of statements querying sys.foreign_key_columns and sys.index_columns system objects which is Power BI’s way of checking for any entity-relationships linked to the table/view we are attempting to load. You can always skip this check by unticking the Include relationship columns option shown in Figure 1. Finally, the last statement in the batch performs the actual data import from our data source into Power BI using the sp_executesql stored procedure.

Figure 7: Executing sp_executesql Stored procedure

So just to wrap up, using the default settings to load a single table into Power BI from SQL Server via the Import connectivity mode, the following batches are generated:

- Batch 1 – with 2 T-SQL statements

- Batch 2 – with 3 T-SQL statements

- Batch 3 – with 7 T-SQL statements

Although we have demonstrated that relying on Power BI to generates back-end queries to import data creates a total of 12 T-SQL statements for a single table, the end-to-end extraction of data from SQL Server to Power BI is still relatively quick. However, there are still some caveats, for instance, the auto-generated data extraction script uses the EXECUTE sp_executesql command which has been widely advocated for preventing SQL injection attempts yet several authors has also demonstrated in the past that using it can lead to a performance overhead of your SQL Server instance.

There is also the fact that, just like in SSMS, the Power BI Navigator will retrieve all user-defined databases within a connected SQL Server instance regardless of whether you have access to them or not. This is more annoying than a real issue but as a DBA you might have to attend to support tickets wherein users are trying to access databases that they are actually prevented from accessing. What makes it worse is that the error message displayed in Power BI does not out rightly inform you that a given database is inaccessible – as is the case in SSMS (see Figure 8), instead it returns “We couldn’t authenticate with the credentials provided. Please try again.” error message shown in Figure 9 making it seem like it’s a credentials issue. Which could lead to some users locking out their password in an attempt to gain access into a database that they shouldn’t be accessing in the first place

Figure 8: Error in SSMS

Figure 9: Error in Power BI Desktop

Import Data into Power BI by Using Custom SQL Statement

Another approach to using the Get Data feature in Power BI involves specifying a user-defined SQL statement instead of having Power BI generates it for you. This way, you have more control of the filters you want to apply and the fields you want to retrieve. To demonstrate this approach, we begin by configuring a SQL Server connection, as shown in Figure 10

Figure 10: Configuring SQL Server Connection

This time clicking OK at the bottom of Figure 10 does not result in Power BI submitting SQL batches into SQL Server, instead, you are redirected to the data preview window shown in Figure 11.

Figure 11: Data preview window in Power BI

Only after you have clicked the Load button in Figure 11, does Power BI generate SQL batch statements. The auto-generated batch contains three SQL:BatchCompleted events as shown in Figure 12. The first event runs a SELECT @@VERSION command whilst the second and the third are a repetition of the custom SQL statement we provided in Figure 10.

Figure 12: SQL:BatchCompleted SQL Server Extended events

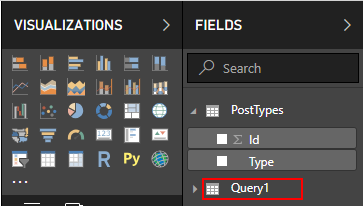

It’s that simple! You no longer have to worry about Power BI querying system objects to get database and table/view metadata or users trying to access databases they shouldn’t be accessing. However, there is a catch in that unlike in the Navigator window approach wherein your Power BI model entities were created using the names of source tables/views, entities created out of importing data using a user-defined SQL command are prefixed with Query which means you have to edit your model and rename the object accordingly, as shown in igure 13.

Figure 13: Data imported using Custom SQL Statement

Another downside to using the custom SQL statement option is that you can only import one dataset at a time, whereas in the Navigator approach, you could select as many tables/views as you like. The rest of the comparisons are between the two approaches is given in Table 1.

Get Data Using Navigator Window | Get Data by Using Custom SQL Statement |

By defaults, a total of 12 T-SQL statements are generated | By defaults, a total of 3 T-SQL statements are generated |

The database name is optional | The database name is mandatory |

Can import multiple tables at a time | Can only import one table per T-SQL statement |

Less control of the generated T-SQL statement | More Control of generated T-SQL statement |

T-SQL is written for you by Power BI | T-SQL is user-defined |

The entity name is derived from the source table name | The entity name is a generic name prefixed with Query |

Relationships can be detected by Power BI | Joins and relationships are defined by the user |

Table 1

Summary

Power BI is an exciting and powerful data visualization tool but like all such tools, it has its pros and cons, particularly when importing data. We hope this article provides a comprehensible approach on monitoring batch statements with Power BI using SQL Server extended events. Also, you get an idea of whether to write your own T-SQL queries to extract data or rely on Power BI to auto-generate scripts that will facilitate data import from SQL Server.

See more

Check out SpotLight, a free, cloud based SQL Server monitoring tool to easily detect, monitor, and solve SQL Server performance problems on any device