If this is your first visit to this SQL Server Transaction Log series, I recommend you first review the previous articles (see the TOC below), in order not to be familiar with the concepts that we will discuss in this article. Reviewing the previous articles, you will have a good idea about the following:

- The internal structure of the SQL Server Transaction Log

- the three recovery model types, Full, Simple and Bulk-Logged, that specifies how the transactions will be written to the SQL Server Transaction Log file

- the relationship between the SQL Server Transaction Log and the different types of high availability and disaster recovery solutions

- how to manage and monitor the SQL Server Transaction Log file growth, the different operations that can be performed on the Transaction Log, such as the log backup, shrink and truncate operations

- and finally, the list of best practices that should be performed by the database administrators in order to keep the SQL Server Transaction Log in healthy state

Overview

This article will be a continuation for the previous article, in which we showed the important role that the SQL Server Transaction Log plays in keeping the database in a consistent state and recovering the corrupted database or mistakenly modified table to a specific point in time.

Designing a proper backup and recovery plan, that includes the different types of backup operations that are scheduled in a way that achieves the agreed RTO and RPO measure, will minimize and for some level prevent any data loss from your database by restoring the database to the correct point in time. But what if this plan is not configured well and the disaster occurred?

The SQL Server Transaction Log plays also an important role in recovering deleted or modified data if you mistakenly perform a DELETE or UPDATE operation with the wrong condition, or badly without filtration condition. This can be achieved by listening to the records stored inside in this black box that is called SQL Server Transaction Log file. The events that are written to the SQL Server Transaction Log file, without any additional configuration required from the database administrator side, includes the different types of DML operations, such as INSERT, UPDATE and DELETE statements, and DDL operations, such as CREATE and DROP statements.

Getting Started

To compare the different methods that we can use to recover data from the SQL Server Transaction Log file, we will create a new database with a single table, using the script below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

CREATE DATABASE RecoverFromTransactionLog GO USE RecoverFromTransactionLog GO CREATE TABLE [dbo].[Employee_Main]( [Emp_ID] [int] IDENTITY(1,1) NOT NULL, [EMP_FirsrName] [varchar](50) NULL, [EMP_LastName] [varchar](50) NULL, [EMP_BirthDate] [datetime] NULL, [EMP_PhoneNumber] [varchar](50) NULL, [EMP_Address] [varchar](max) NULL, PRIMARY KEY CLUSTERED ( [Emp_ID] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] ) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY] GO |

After creating the database and the table, we will fill that table with 30 records, using the INSERT INTO T-SQL statements below:

|

1 2 3 4 5 6 7 8 |

USE [RecoverFromTransactionLog] GO INSERT INTO [dbo].[Employee_Main] VALUES ('AAA','BBB','1980-10-15','98542224','ABCDE') GO 10 INSERT INTO [dbo].[Employee_Main] VALUES ('CCC','DDD','1985-08-07','98543334','FGHIJ') GO 10 INSERT INTO [dbo].[Employee_Main] VALUES ('EEE','FFF','1989-04-06','98544454','KLMNO') GO 10 |

Having no backup taken from the database yet, how could we recover the table’s data if the below UPDATE statement is executed over that table with no WHERE clause used to filter the data to be modified?

Using Built-in Methods

fn_dblog

The content of the SQL Server Transaction Log can be read online using a number of built-in functions. The first function that we will try to use to recover the lost data is the fn_dblog. It is an undocumented system table-valued function, used to dive in the SQL Server Transaction Log and view the content of the active portion of that file.

In order to use the fn_dblog() function, you need to provide two parameters, the start and the end LSN of the log. To view all logs available in the active portion of the SQL Transaction Log file, you can pass NULL value for these two parameters. As the result returned from the fn_dblog() function contains 129 columns, it is better to choose only the columns that contain the data you are interested in and filter the result to show only the operation that you plan to recover. For example, the LOP_INSERT_ROWS operation is logged when a new record is inserted, LOP_DELETE_ROWS operation is logged when an existing record is deleted, and LOP_MODIFY_ROW operation is logged when an existing record is updated. The below script can be used to check the update statements that are performed on that database:

|

1 2 3 4 5 6 7 8 |

SELECT Operation, [rowlog contents 0], [rowlog contents 1], [rowlog contents 2], [rowlog contents 3] FROM sys.fn_dblog(NULL,NULL) WHERE operation = ('LOP_MODIFY_ROW') ORDER BY Operation ASC |

And the result in our example will be like:

You can see from the previous result, that recovering the data from the Transaction Log file using the fn_dblog() function is complex, as the data is viewed in hexadecimal format and spread in multiple columns. In addition, the UPDATE operation will be minimally logged, with no information about the new or old value, as it writes only the part that is changed, for example, changing the number 4 to 5 in the phone number as performed previously. In order to be able to recover the whole column value, you need to reconstruct it manually using the correct data type, by linking it with the insert operation performed prior to updating the value.

fn_dump_dblog()

The second system built-in function, fn_dump_dblog(), has one advantage over the fn_dblog() function is that it can be used to read the SQL Server Transaction Log backup, in addition to the ability to read the online Transaction Log file. On the other hand, in order to use the fn_dump_dblog() function, you need to provide 68 mandatory parameters, as shown in the script below:

You can see from the previous snapshot that the fn_dump_dblog() function returns the same result as the result returned from the fn_dblog() function, with the same complexity in reading the hexadecimal data and reconstructing it manually to get useful information.

DBCC Page Command

SQL Server provides us with a large number of DBCC commands that can be used to perform the different types of SQL Server administration tasks. One of these undocumented commands is the DBCC Page command, that can be used to read the content of the database data and log online files. In order to view the content of the database log file, you need to provide the database name and the ID of the Transaction Log file. The ID of the Transaction Log file can be retrieved from the query below:

Then you will be able to view the Transaction Log file, after turning on the Trace Flag 3604, in order to print the output of the DBCC command, as shown below:

|

1 2 3 4 |

DBCC TRACEON (3604, -1) GO DBCC PAGE (RecoverFromTransactionLog, 2, 0, 2) GO |

Again, the result returned from the DBCC command will be displayed in hexadecimal format, that needs big effort translating it to a meaningful shape and using the correct data type, as below:

3rd party solution

When a database is corrupted or table’s data is mistakenly lost or modified, the long time that is required to reconstruct and parse the data using the built-in methods cannot be acceptable, as the seconds of downtime for the international companies means losing potentially a lot of money. So, we need search in the SQL Server administration market for a tool that can be easily and quickly used to read the Transaction Log file content and show this content in a user-friendly format.

ApexSQL Log is a SQL Server Transaction Log reader tool, that can be easily used to read the content of the online SQL Transaction Log file, the detached Transaction Log file or Transaction Log backup files chain. You need only to go through a simple wizard, that contains a variant number of customized options, and ApexSQL Log will perform the reconstruction, translation, and parsing automatically, without the need to perform any manual task.

Before using ApexSQL Log, you need to download it from the ApexSQL tools download page, then proceed with the installation process, by following a straight-forward installation wizard. After installing ApexSQL Log, you can run that tool by clicking on the Axe icon.

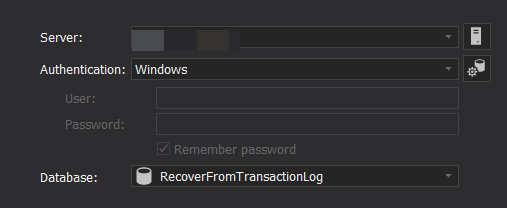

In the start page, provide the connection information, includes the SQL Server instance name, the authentication mode, the credentials, and the database name, that will be used to connect to your SQL Server instance and read the database Transaction Log File content, as shown below:

After connecting to the database server, ApexSQL Log will provide you with all data sources from where you can read the Transaction Logs on the selected database. In our example, and having no backup taken from the selected database, the only option available to read the Transaction Logs is reading the online transaction log file. Click Next to proceed:

In the next page, you will be asked to specify the format of the Transaction Logs output. In our case, we will generate an Undo/Redo script that will be used to recover the mistakenly modified data, as shown below:

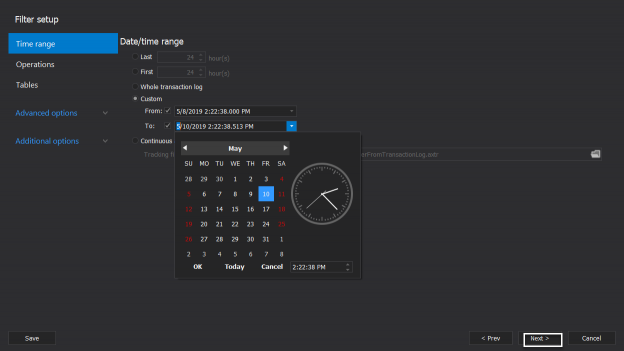

ApexSQL Log provides us with a number of options that can be used to filter the retrieved Transaction Logs from the Transaction Log file. The first filter is the Time Range filter, in which you can specify the range of time when the bad transaction was executed, as below:

Rather than viewing all transactions that are executed during the selected range of time, ApexSQL Log allows you to specify the type of operation that you plan to recover the data from. In our case, we will choose the UPDATE DML operation, as shown below:

Another useful filter is specifying the database object on which this transaction was performed. Select the name of the table you plan to recover and click Next:

From the Undo/Redo page, specify if you need to generate a script to undo the performed change or redo it again. In our case, we need to generate an Undo scrip that will be saved in the provided path, with the ability to generate a CMD or PowerShell command that can be used to recover the data later. Click Finish to proceed:

Now the script that will be used to recover the data corrupted due to the mistakenly executed UPDATE statement without having WHERE clause, is created successfully, with a summary about the generation process and links for the Undo file and the folder that contains the Undo file, as shown below:

Click on the file name provided in the previous page to view the Undo file content. In the opened file, you will see the magical UPDATE statements that can be used to recover the mistakenly modified data, by setting the phone number of each employee with the phone number value before the modification process, as shown below:

The next step now is to review these statements then execute it over the affected database. Now the table’s data is consistent now as nothing ever happened.

It is clear from the previous example, how you can easily recover any deleted or modified data using ApexSQL Log within few seconds and performing zero effort.

In the next article, we will show how to rebuild a database and bring it online in case of the lost or corrupted transaction log file. Stay tuned!

For more information on reading the SQL Server transaction log see

- Read a SQL Server transaction log

- Using a SQL log analyzer to audit, replicate and recover data

- Open LDF file and view LDF file content

Table of contents

- Azure Data Factory Interview Questions and Answers - February 11, 2021

- How to monitor Azure Data Factory - January 15, 2021

- Using Source Control in Azure Data Factory - January 12, 2021