Our organization must restrict permissions and prove to an independent party that we investigate access and restrict permissions. We restrict permissions to objects using least permissions and give full access to an exceptional few based on an organizational design that follows best practices. What can we do additionally that will help us prevent unauthorized access or catch when someone who shouldn’t have access is able to infiltrate our systems, especially in the context of showing a third party that we track this.

Overview

In this post we’ll look at two methods we can use for providing information to a third party auditor. The first we’ll be using some techniques by monitoring access, which can be on the level of procedure or T-SQL execution to tracking logins running queries. In addition, we can separate our server by data access – high priority or PII data being placed in a node where few have access to, while lower priority data exist in nodes where more have access to.

Monitoring access and execution

We can monitor access outside of running a consistent trace – though the trace is more comprehensive if we must provide a third party with evidence that we’re tracking user access. We’ll look at three methods of doing this and we have more than these three options, they provide us with some best practices to consider. Using a derivative of our restricting object access, we’ll limit our environment to using procedures or queries that always log who accesses data. In an application layer, this may be a query that returns a result and also inserts data, or we could use the procedure method, which we see below this.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

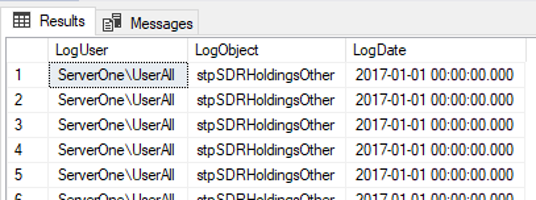

/* ---- Example table for retaining failed logins CREATE TABLE tbAuditLog( LogDate VARCHAR(100), LogObject VARCHAR(200), LogDate DATETIME DEFAULT GETDATE() ) */ CREATE PROCEDURE stpSDRHoldingsOther WITH ENCRYPTION AS BEGIN SELECT Members,SDRAllocations FROM tbSDRHoldings INSERT INTO tbAuditLog (LogUser,LogObject) VALUES (SUSER_NAME(),'stpSDRHoldingsOther') END EXEC stpSDRHoldingsOther SELECT * FROM tbAuditLog |

In addition to encrypting our procedure, we also log the object called along with the user and date.

The downside is that all our procedures must log the user, objects and time, the upside is that even if a user has access, we can spot inconsistencies with access, such as an employee looking at a report 12 times in 1 day for a monthly report, which they generally only look at once or twice during that month. This helps us possibly catch a scenario where UserA comprises UserB’s access and looks at data without approval using UserB’s credentials.

We’ll also observe that in the above procedure example, we’re using the option WITH ENCRYPTION, which prevents users from scripting a stored procedure (with restricted access they shouldn’t have this anyway) to discover what the definition of the procedure is. This adds another layer of security to our objects, though I will caution developers who user procedures like this that they must know the definitions in order to alter them correctly – the cost of inconvenience adds a layer of security.

What about invalid logins, where a user attempts to log into a server when they shouldn’t, or when a valid user tries to log in multiple times in a row as if they lost their password? One way we can track this on the server level is to retain all invalid login information from the error log.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

/* ---- Example table for retaining failed logins CREATE TABLE tbSaveLogins( LogDate DATETIME, LogText VARCHAR(2000) ) */ DECLARE @save TABLE( LogDate DATETIME, ProcessInfo VARCHAR(200), LogText VARCHAR(MAX) ) INSERT INTO @save EXEC sp_readerrorlog 0,1 INSERT INTO tbSaveLogins SELECT t.LogDate, t.LogText FROM @save t LEFT JOIN tbSaveLogins tt ON t.LogDate = tt.LogDate AND t.LogText = tt.LogText WHERE ProcessInfo = 'Logon' AND t.LogText LIKE '%Login failed%' AND tt.LogText IS NULL SELECT LogDate, LogText FROM tbSaveLogins |

We save the failed the login information so that we can later run aggregates against it.

The downside to saving this information is that it can become noisy in some situations, so I would approach alerting with this information carefully, so that people don’t learn to ignore it. This can be a very accurate way of catching a hack attempt, but too much alerting with it can cause developers to ignore the attempts.

The upside is that we can spot irregularities by using aggregate queries and track how this changes over time. In an example scenario, we may get 120 bad logins a month. Suppose that we migrate some priority data to our server without adding new user access and where the priority data only have access a few times a month, but we suddenly see a spike of 7000 bad logins per month. This is an example scenario where we have a possible compromise attempt and we can architect a new design for our priority data, or track who leaked information.

In some situations, we may want to take a snapshot of the current logins running queries in addition to the other ideas. In the below query, we get the users running transactions (the filter on status for running) along with the date and time information of the login. Depending on our requirements, we may want to run this on a schedule or we may want to run this randomly if we want to prevent an infiltrator from identifying a consistent query. In some situations, an infiltrator doesn’t want to be on a server consistently logged in, otherwise, they’re easier to identify. An occasional login may get caught in these types of audits.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

/* ---- Example table for audting running logins CREATE TABLE tbAuditRunningLogins( LoginTime DATETIME, HostName VARCHAR(500), LoginName VARCHAR(500), AuditDate DATETIME DEFAULT GETDATE() ) */ INSERT INTO tbAuditRunningLogins (LoginTime,HostName,LoginName) SELECT login_time , host_name , login_name FROM sys.dm_exec_sessions WHERE status IN ('running') SELECT * FROM tbAuditRunningLogins |

Saved information from our random or scheduled audits of currently logged in users running transactions.

Since we need to submit evidence that we’re tracking information to a third party, these types of audits also make this process easier, since we’re logging this information with a timestamp for the random audit or scheduled audit, depending on the requirements.

Delineating data storage by access

For a database with priority and (or) PII data, we may consider placing the database on a separate server with a few restricted accounts, firewall restrictions, and other security enhancements like two-factor authentication. Even if an infiltrator managed to compromise an account and had access to a username and password, without the firewall being open, or without a two-factor code, this would mean nothing. Even though this doesn’t guarantee security, we can provide this as a security design we’re using if we’re answering to an audit from a third party involving our security practices.

Part of the reason why demarcating data can be so effective, even if costly, is that it requires companies consider who should have what level of access. Unfortunately, some security breaches occur because these discussions never happened, so everyone has full access to all data. In addition, because our database servers are separate, hacks trying to increase permissions become more difficult, as it may not be a service account that an infiltrator must get access to, but also passing through a firewall and other obstacles. Finally, because fewer people will have access to this server, this makes tracking the behavior of the users even easier – how do two people tend to query versus hundreds?

The price tag for this option does come with higher costs, as demarcating data on separate servers for enhanced security means that we have multiple servers and these servers do not directly communicate with each other. This means our methods for transferring data will leave us with less automation and convenience, even if the security is more robust.

Conclusion

In addition to retaining this information, we want to make sure that where we store the data is restricted. If we keep a copy of the audit data local to the database where all users have read access, this defeats the purpose. We can retain copies in other restricted databases and migrate the data, if needed, since the audit data itself will become priority data.

In addition, all security requires a combination of techniques and no technique alone is sufficient to protect against compromise, especially in a complex technical environment. While these will add some costs, for some environments, the cost for any compromise is much greater than the cost for monitoring and preventing possible infiltrations.

References

- Information about active users on a SQL Server

- Creating stored procedures using the encryption option

- Good review of permissions and options in SQL Server from Microsoft

See more

Interested in an enterprise-level SQL Server audit and compliance solution for GDPR, HIPAA, PCI and more, including tamper-proof repository, fail-over/fault tolerant auditing, tamper-evident repository, sophisticated filters, alerting and reports? Consider ApexSQL Audit, a database auditing tool for SQL Server

- Data Masking or Altering Behavioral Information - June 26, 2020

- Security Testing with extreme data volume ranges - June 19, 2020

- SQL Server performance tuning – RESOURCE_SEMAPHORE waits - June 16, 2020