In this article, I am going to explain in detail SSIS memory usage and how can we optimize our tasks and the data flow to leverage maximum benefits from the in-memory operating tool. As you might be aware, SSIS also known as SQL Server Integration Services is a data integration tool, provided by Microsoft which comes shipped with the SQL Server editions. SSIS is an enterprise-scale, in-memory data integration tool which can be used to move data between different databases or different servers in a comfortable yet manageable way.

Overview of SSIS Memory Usage

In ETL design, the often most important question that an engineer faces is how much memory we should allocate in our production environment for running SSIS packages. This is a very common area where most of us while working on SSIS or configuring the environments need to deal with. A key point to remember is that we must understand the jobs or packages that are going to be set up, how many times are these jobs going to be executed etc. These parameters will help a BI Engineer to calculate and decide a correct figure for setting up the required memory requirements for the instance. Also, I have experienced a few occasions in which the SSIS packages are executed on the same machine on which SQL Server is running. This is not considered a good practice because most of the system memory will be allocated to SQL Server and there will be very little room for SSIS to perform in-memory operations. In such a scenario, you can either reduce the allocated memory to the SQL Server or increase the physical RAM on the server.

Since SSIS is an enterprise tool, it can consume as much RAM as you can allocate to it. However, allocating more RAM also increases the budget on the infrastructure of your projects which might not be an ideal case always. So, you should consider the package design, if there is any parallelism involved within the packages, if there are any expensive operations like sort or merge within the packages, and so on. All these factors contribute to the SSIS memory usage and we need to understand these in detail.

Concept of Buffer in SSIS

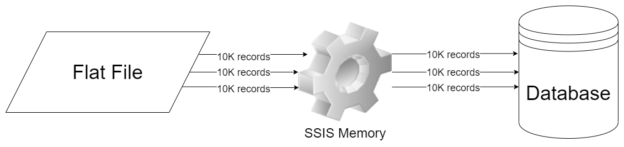

In order to understand how SSIS memory usage is defined, we need to understand buffers first. In simple words, you can consider buffer as a temporary storage area that is used by SSIS while processing data, be it from a flat file or a database. By design, SSIS is configured to use this system of buffers while performing any operations within the memory. Usually, the default value for this buffer is 10MB or 10000 records. This gives rise to a very important question – what happens when the buffer is filled? And to answer this question, when the buffer memory is full, the operations are written to the disk. This is a continuous process performed during the package execution. The SSIS engine reads data from the file into the buffer, performs the operations, and then writes the operation back to the disk when completed.

Memory consumption by Data Flow Tasks (DFT)

Data Flow Tasks or DFTs as most of us call it, are one of the most used tasks and we need to understand its SSIS memory usage. Using a Data Flow Task, we can quickly load data from a flat file to a table in SQL Server or vice versa. However, sometimes it is possible that you are going to have multiple data flow tasks within your package and the execution process takes a long time.

For example, let us consider a package that uses a Data Flow Task which loads 1M records from a flat file to a SQL Server table, then all these 1M records need to pass through the memory space that has been allocated to the SSIS server during execution.

However, in practice, when the SSIS package starts executing, all the 1M records will not be loaded into the memory at once. If this was the case, then we would require a lot more memory to support the entire 1M records at a single time. Here, the concept of buffer plays a very important role. The SSIS engine is designed to break the incoming data into much smaller chunks of 10000 records for example. This enables us to execute the SSIS package without having to load the entire dataset into the memory all at a time.

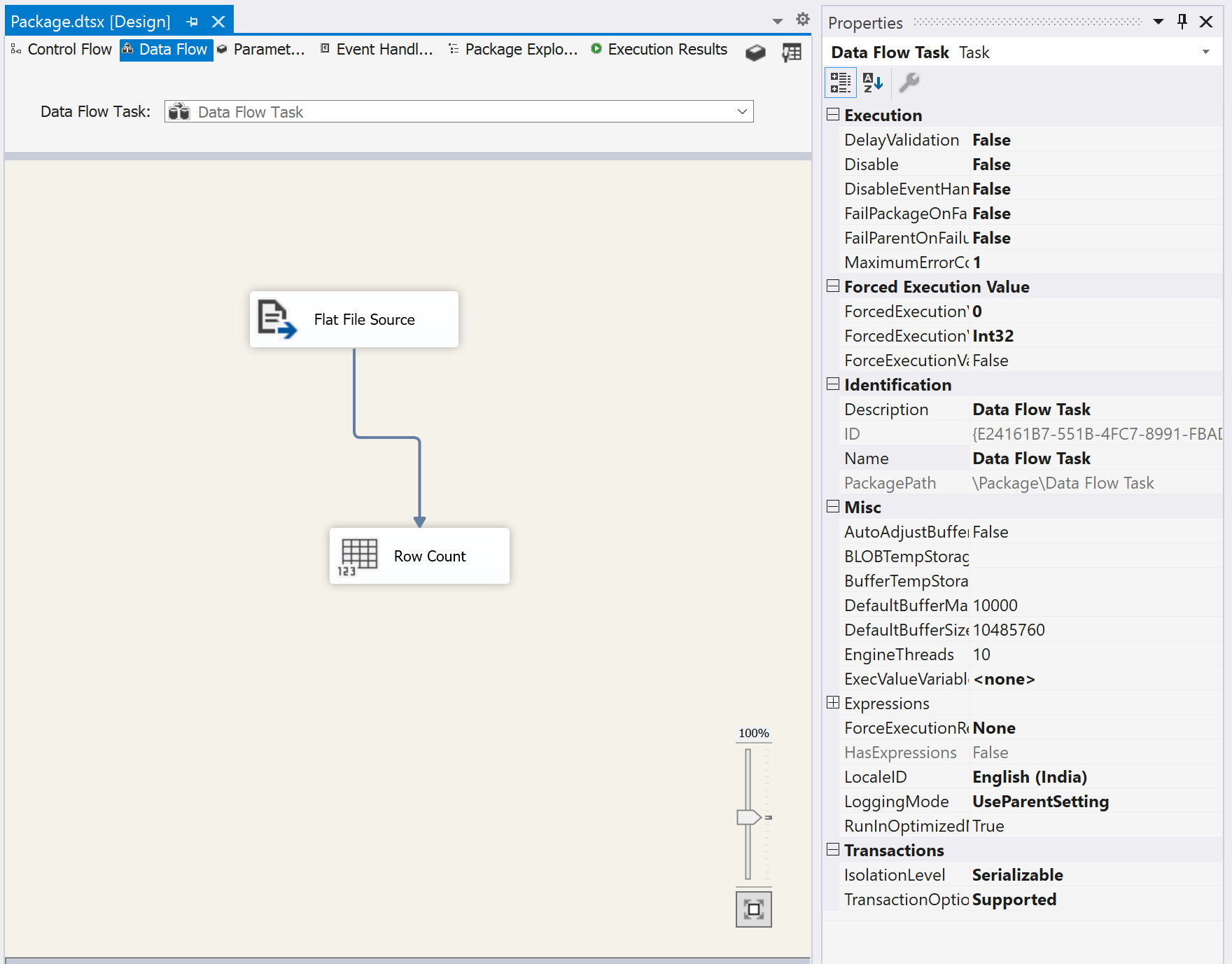

Let us try this in action. Open up SSIS and create a new project. I am going to use a simple DFT that will read data from a flat-file that has 2.5M records and use the RowCount destination in SSIS. This will help us understand how the buffers play an important role while moving data across the SSIS engine.

Figure 1 – SSIS DFT Example

As you can see in the above figure, I have created a DFT which has only two components. The Flat File Source uses a flat-file connection manager to read files stored in the local machine. And the Row Count destination will display the number of rows being read by the SSIS engine. If you have a look at the Properties window on the right, you can see that by default, the values for DefaultBufferMaxRows and DefaultBufferSize are set to 10000 and 10485760 respectively. Let us now execute the package and check the execution time of the process.

Figure 2 – Default Buffer Execution Time

After execution, we can see that the number of rows processed is 2,500,000, and the time execution duration was approximately 10.5 seconds. This is the duration when the DefaultBufferMaxRows was set to 10000. Now let us tweak this setting and increase the value from 10000 to 100,000 and re-execute the package.

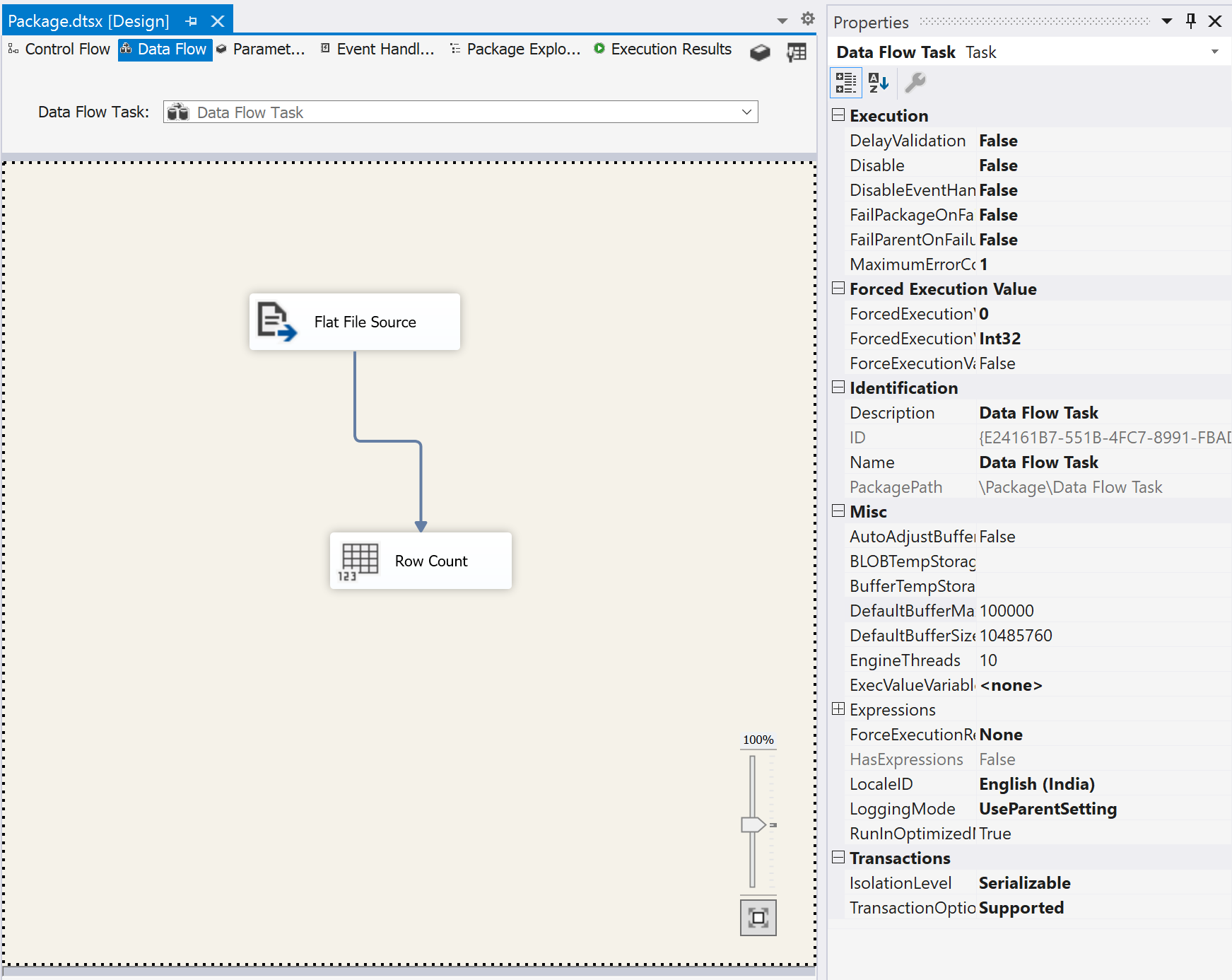

Figure 3 – Increased Default Buffer Max Rows value to 100000

Let us now execute and see the results.

Figure 4 – Package executed in 2.8 seconds

This is a huge performance benefit as you can see that the execution time has been drastically reduced to 2.8 seconds which was above 10 seconds in the previous case.

This explains that since the number of maximum rows in the buffer has been increased, a greater number of records can be fit in the buffer now and in total, the SSIS engine has to create fewer buffers while processing the task. However, there is a consideration that comes into the picture when you increase the buffer size and that is the memory. This means that now you should have more allocated memory on your server so that the SSIS engine can fit all that data into the memory while processing.

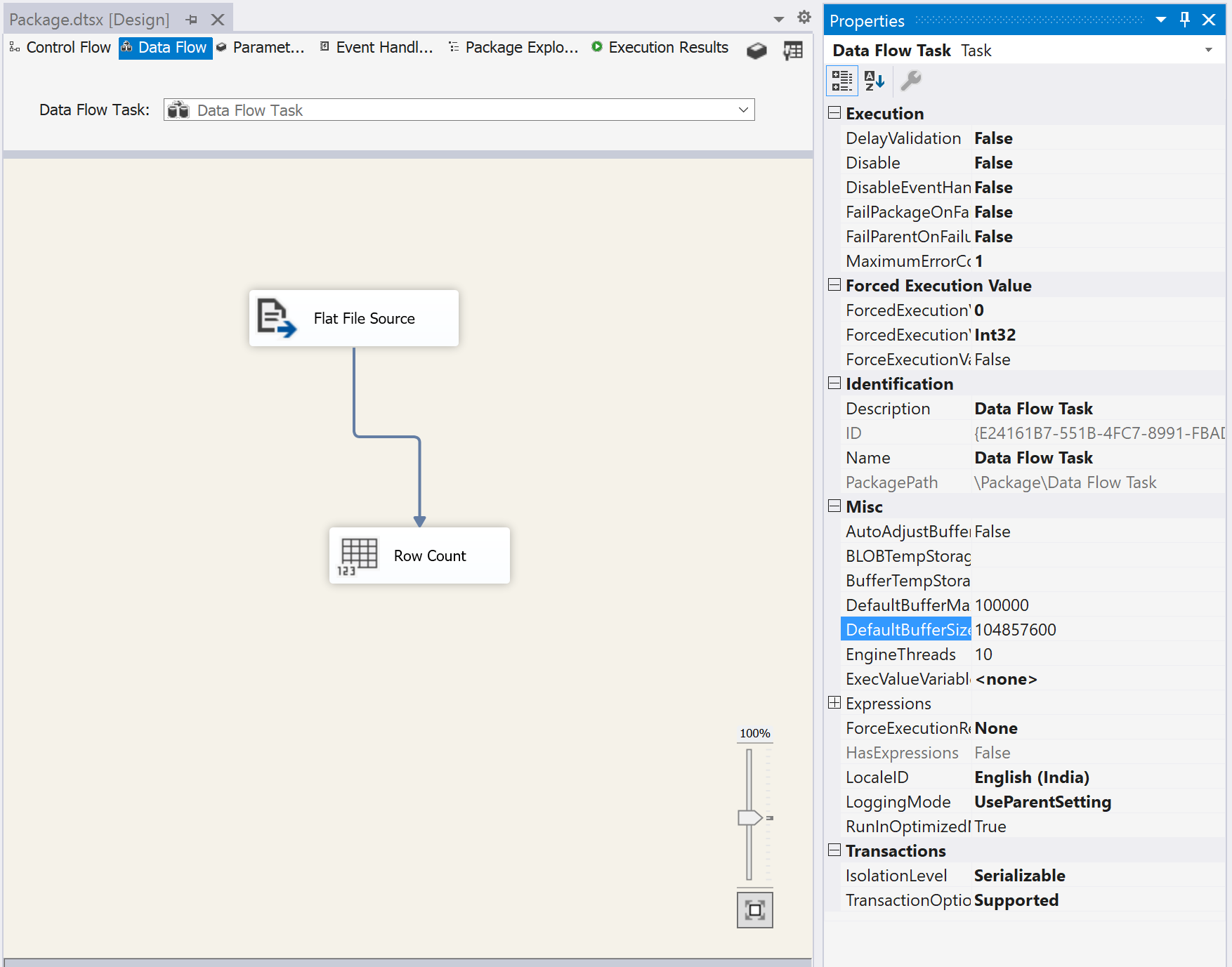

Now let us try to go a step ahead and modify the DefaultBufferSize to 104857600, i.e. increasing it by ten times and then execute the package again.

Figure 5 – Increased DefaultBufferSize

This helps us to further decrease the execution time to 1.8 seconds which is less than the previous example that we have tried in.

Figure 6 – Package executed in 1.8 seconds

From this, we can infer that we need to tweak these settings while designing our SSIS packages so that we can get the best out of the SSIS memory usage.

Conclusion

In this article, we have understood the concept of SSIS memory usage using the Data Flow Task component. I have explained the various execution patterns by tweaking the buffer that is available within SSIS. There is no hard and fast rule to define how much memory is optimal for SSIS. It always depends on the volume of data that is being moved across different systems.

Usually, higher memory helps to achieve faster transformation as much more data can be loaded into memory while processing and thus helps in completing the job faster. As you grow as a developer, with your experience, you will be able to determine the correct required memory for an SSIS instance and configure the environment accordingly.

- Getting started with PostgreSQL on Docker - August 12, 2022

- Getting started with Spatial Data in PostgreSQL - January 13, 2022

- An overview of Power BI Incremental Refresh - December 6, 2021