Using Python SQL scripts is a powerful technical combination to help developers and database administrators to do data analytics activities. Python provides many useful modules to perform data computation and processing of data efficiently. We can run Python scripts starting from SQL Server 2017. We can create the ETL solutions to extract data from various sources and insert into SQL Server.

Suppose we are getting data in a flat file in a compressed format, we can use the ETL process to import these as well. It requires files to be extracted first using the tool to extract these files such as 7z.

I have seen the developers using an external SSIS tool CozyRoc. It includes a Zip task to compress and decompress the files in various formats such as Zip, GZip, and BZip2 etc. In my previous article, we imported the compressed CSV file using the 7z compression utility. Python can also play an important role in importing data into SQL Server from the compressed files.

In this article, I am using the SQL Server 2019 CTP 2.0

You should install the Machine Learning Services (Python) to run the Python SQL scripts. If you do have installed it before, you can start the SQL Server installer. We need to add a feature in the existing SQL Server installation. In the Feature selection page, put a check on the Machine Learning Services and Python as shown in the following image:

It installs the SQL Server Launchpad service to run the Machine Learning Service. In the next page, you can set the service account it.

In the next page, we need to provide Consent to install the Python. Once you click on Accept, it downloads the required software and does the installation.

You can review the SQL Server 2019 feature before installation in the summary page. You can see we are going to install the Python with the Machine Learning Services.

In the SQL Server Configuration Manager, SQL Server service and the SQL Server Launchpad service should be running to use the Python scripts in the SQL Server.

Connect to the SQL Server. We need to enable parameter external scripts enabled using the sp_configure command to run the Python SQL scripts

|

1 2 |

EXEC sp_configure 'external scripts enabled', 1 RECONFIGURE WITH OVERRIDE |

In the below screenshot, we can see the external script enabled is configured successfully.

Once You have enabled the external scripts, you need to restart both the services. You might get the following error if the SQL Services and the Launchpad service is not started after we run the sp_configure command mentioned above.

We can run the following to test if the Python SQL script will process correctly in SQL Server.

|

1 2 3 4 5 6 7 8 9 |

execute sp_execute_external_script @language = N'Python', @script = N' a = 9 b = 3 c = a/b d = a*b print(c, d) ' |

For this example, let us prepare data using the below query in the WideWorldImporters database.

|

1 2 3 4 5 6 7 |

SELECT [PersonID] ,[FullName] ,[PreferredName] ,[SearchName] ,[IsPermittedToLogon] ,[ValidFrom] FROM [WideWorldImporters].[Application].[People] |

Save the output (1111 rows) in the CSV format in a designated directory.

Now right click on this CSV file and go to 7-Zip and click Add to archive.

We need to create a bzip2 compressed archive file.

Click Ok and it creates a compressed file Employee.csv.bzip2.

Connect to SQL Server instance and run the following Python SQL script

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

EXEC sp_execute_external_script @language = N'Python', @script = N' import pandas as pd import datetime as datetime OutputDataSet = pd.read_csv("C:\sqlshack\Draft articles\Data\person.csv.bz2", names = ["PersonID", "FullName", "PreferredName", "SearchName", "IsPermittedToLogon", "ValidFrom"], header = 0, compression = "bz2") ' ,@input_data_1 = N'' ,@input_data_1_name = N'' WITH RESULT SETS ( ( PersonID INT, FullName VARCHAR(512), PreferredName VARCHAR(512), SearchName VARCHAR(512), IsPermittedToLogon bit, ValidFrom nvarchar(500) ) ) ) |

You get data from the compressed CSV file as a output in SSMS. SQL Server uses Python code to interact with the compressed file and extract data using Python modules.

Let us understand this query in the Python language.

Part 1: Import Python Module: We can use Pandas module in Python to extract data from the compressed file. Python does not have a data type for the date; therefore we also need to import a module datetime.

|

1 2 3 4 5 6 7 |

EXEC sp_execute_external_script @language = N'Python', @script = N' import pandas as pd import datetime as datetime |

Part 2: Define the CSV columns and compression format: In this part, we need to define the following parameters.

|

1 |

OutputDataSet = pd.read_csv("C:\sqlshack\Draft articles\Data\person.csv.bz2", names = ["PersonID", "FullName", "PreferredName", "SearchName", "IsPermittedToLogon", "ValidFrom"],header = 0, compression = "bz2") |

- Absolute path for the compressed file in Python function pd.read.csv- In this query, we specified the CSV file path as OutputDataSet = pd.read_csv(“C:\sqlshack\Draft articles\Data\person.csv.bz2”

- Define the column names from the CSV – We can define CSV column name in the names parameter similar to names = [“PersonID”, “FullName”, “PreferredName”, “SearchName”, “IsPermittedToLogon”, “ValidFrom”]

- Header for the CSV file – In this query, we specified CSV header in the first column using header = 0

- Compression format – in this query, we specified compression format using compression = “bz2”

Part 3 – Define the output table column with data type: In this section, we need to define the table columns and their data types.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

,@input_data_1 = N'' ,@input_data_1_name = N'' WITH RESULT SETS ( ( PersonID INT, FullName VARCHAR(512), PreferredName VARCHAR(512), SearchName VARCHAR(512), IsPermittedToLogon bit, ValidFrom nvarchar(500) ) ) |

Now we need to import this data into the SQL Server. We can directly use Insert statement in Python query to insert in a SQL Server table. We should have a SQL Server table with the columns similar to the CSV file columns. Let us create a table in this article using the following query.

|

1 2 3 4 5 6 7 8 9 |

Create table PythonZipInsert ( PersonID INT, FullName VARCHAR(512), PreferredName VARCHAR(512), SearchName VARCHAR(512), IsPermittedToLogon bit, ValidFrom varchar(512) ) |

Once we have the SQL Server table in place, we need to insert data using Python SQL script.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

insert into PythonZipInsert EXEC sp_execute_external_script @language = N'Python', @script = N' import pandas as pd import datetime as datetime OutputDataSet = pd.read_csv("C:\sqlshack\Draft articles\Data\person.csv.bz2", names = ["PersonID", "FullName", "PreferredName", "SearchName", "IsPermittedToLogon", "ValidFrom"], header = 0, compression = "bz2") ' |

We get the following output that shows the number of inserted records.

Let us understand this query to insert records directly into SQL Server table.

Part 1: Insert statement: In this step, we need to specify an insert statement into our existing SQL Server table.

|

1 |

insert into PythonZipInsert |

Part 2: Define the CSV columns and compression format: This step is precisely similar to the step we looked while viewing data in SSMS.

We do not need to specify the column and their data type Part 3- Define the output table column with data type while importing data into SQL Server tables directly.

SSIS Package to Import compressed data into SQL Server using Python SQL script

We can also use Python query in an SSIS package and import data into SQL Server tables.

Open Visual Studio 2017 and create an Integration Service Project. Specify a valid directory and solution name for this SSIS package.

It creates a solution for the PythonImport SSIS package. Drag a Data Flow task in the Control Flow area.

Double click on Data Flow Task and drag OLE DB Source in data flow task area.

In this OLE DB Source, specify the connection to SQL Server instance. We need to run Python command in this OLE DB Source to get the required data. Select data access mode as SQL Command and in SQL Command text, paste the Python code. It is same Python SQL code that we used to display data in SSMS.

Click Ok and add an OLE DB Destination. In this OLE DB Destination, specify SQL Server instance and SQL Server table in which we want to insert data from CSV using the Python SQL query.

Click on Mapping and verify the mapping between CSV columns and SQL Server table columns. We can change the mapping between these columns if column name differs in CSV and table.

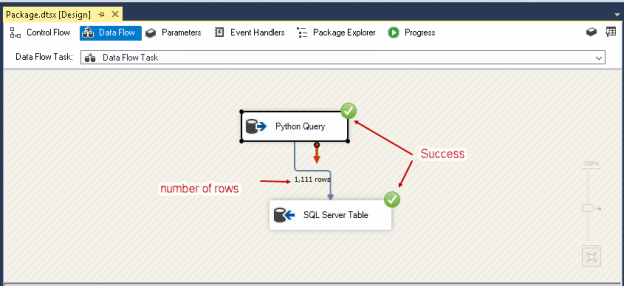

Click Ok, and you can see the SSIS package in the following screenshot. I renamed both Source and Destination as below.

- Source: Python SQL Query

- Destination: SQL Server Table

If there is any configuration error, you will get a red colour cross on the source or destination task.

We will truncate our destination SQL Server table using the following query. It ensures that table is completely empty before running the SSIS package.

|

1 |

truncate table PythonZipInsert |

Now execute this SSIS package. In the following screenshot, you can see that we inserted 1111 rows from Python SQL Query to SQL Server Table. The SSIS package execution is successful as well.

Verify records in SQL Server destination table. We have 1111 rows that are equivalent to number of rows in CSV file.

|

1 |

select count(*) as ImportusingPython from PythonZipInsert |

We can perform data transformation as well in the SSIS package based on the requirement. Python makes the process easier for us to extract data from the compressed file. We do not need to extract the compressed file first.

Conclusion:

In this article, we explored to import compressed data into SQL Server using a Python SQL command.

Table of contents

| Using Python SQL scripts for Importing Data from Compressed files |

| Importing Data into SQL Server from Compressed Files |

- Understanding PostgreSQL SUBSTRING function - September 21, 2024

- How to install PostgreSQL on Ubuntu - July 13, 2023

- How to use the CROSSTAB function in PostgreSQL - February 17, 2023